GTC '25: Nvidia showcases Blackwell Ultra, DGX Spark, RTX Pro, Dynamo and reasoning models

Key Points

- At its GTC 2025 conference, Nvidia unveiled the Blackwell Ultra platform, which it claims will deliver 1.5 times the AI performance of its predecessor and will be available in the second half of 2025. The company also announced its new Dynamo inference software, designed specifically for AI reasoning models.

- The company also introduced the Llama Nemotron model family with improved reasoning capabilities that are said to be up to 20 percent more accurate in benchmarks than the underlying Llama models. For networking, Nvidia introduced Spectrum-X Photonics and Quantum-X Photonics, which integrate optical communications directly into switches.

- For developers, Nvidia introduced AI personal computers: DGX Spark (the "world's smallest AI supercomputer") and DGX Station with the GB300 Grace Blackwell Ultra Desktop Superchip. In robotics, Isaac GR00T N1 was announced as a customizable foundation model for humanoid robots, while the new Cosmos World foundation models were designed for physical AI applications.

Nvidia introduced a comprehensive range of new products and technologies at its GTC Conference 2025, positioning itself for a new era of AI reasoning. The centerpiece of the announcement was the Blackwell Ultra platform.

The new Nvidia Blackwell Ultra platform represents an advancement of the Blackwell architecture introduced just last year. It includes the Nvidia GB300 NVL72 rack-scale solution and the Nvidia HGX B300 NVL16 system. With this release, Nvidia transitions to its planned annual cycle for AI accelerators for the first time. According to the company, the GB300 NVL72 delivers 1.5 times more AI performance at FP4 precision than the GB200 NVL72.

Partners including Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro will offer the new Blackwell Ultra products starting in the second half of 2025. Cloud service providers like AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, along with GPU cloud providers such as CoreWeave, will be among the first to offer Blackwell Ultra-based instances.

Nvidia also announced the DGX SuperPOD with Blackwell Ultra GPUs. The DGX GB300 system features Nvidia Grace Blackwell Ultra Superchips – with 36 Nvidia Grace CPUs and 72 Nvidia Blackwell Ultra GPUs – and a liquid-cooled rack-scale architecture. Each DGX GB300 system also includes 72 Nvidia ConnectX-8 SuperNICs, delivering network speeds up to 800 Gb/s.

As an alternative, Nvidia offers the air-cooled DGX B300 system based on the Nvidia B300 NVL16 architecture, designed for existing data centers that haven't yet adopted liquid cooling.

Nvidia Dynamo: Optimized inference software for AI reasoning

Alongside the new hardware, Nvidia introduced Dynamo, a new open-source inference software designed to accelerate and scale AI reasoning models.

Dynamo succeeds the Triton Inference Server and was specifically developed to maximize token throughput for AI services. In practice, this means more requests can be processed while the cost per request decreases – a crucial factor for the economic viability of AI services.

The software orchestrates and accelerates inference communication across thousands of GPUs using a method called "Disaggregated Serving." This separates the processing and generation phases of large language models (LLMs) across different GPUs, allowing each phase to be independently optimized for its specific requirements.

According to Nvidia, Dynamo doubles the performance (and potential revenue) of "AI factories" running Llama models on the current Hopper platform with the same number of GPUs. When running the DeepSeek-R1 model on a large cluster of GB200 NVL72 racks, the software reportedly increases the number of tokens generated per GPU by more than 30 times.

To achieve these performance improvements, Dynamo integrates multiple features designed to increase throughput and reduce costs. The software can dynamically add, remove, and reassign GPUs to respond to fluctuating request volumes and types. It can also identify specific GPUs in large clusters that can minimize response calculations and route requests accordingly.

Several companies are already planning to deploy Dynamo, including Perplexity AI.

Llama Nemotron: AI models for reasoning and agentic AI

The company also announced a new Llama Nemotron model family with reasoning capabilities, intended to provide developers and businesses with a foundation for advanced AI agents. The accuracy in various benchmarks is reportedly up to 20 percent higher than the underlying Llama models. The models are available as Nvidia NIM Microservices in Nano, Super, and Ultra sizes. Truly meaningful comparisons with other reasoning models are not yet available. However, through optimization for Nvidia chips and FP4 precision, the models are said to run up to five times faster than comparable open reasoning models – which should also make them significantly less expensive to operate.

According to a press release, companies including Accenture, Amdocs, Atlassian, Box, Cadence, CrowdStrike, Deloitte, IQVIA, Microsoft, SAP, and ServiceNow are already working with Nvidia on the new reasoning models and associated software. Nvidia is also introducing AI-Q, a LangGraph-based blueprint for agent systems.

Silicon photonics for revolutionary network technology

With Nvidia Spectrum-X Photonics and Nvidia Quantum-X Photonics, the company also introduced new network technology that integrates optical communication directly into switches. These switches are designed to drastically reduce the energy consumption and operating costs of communication in data centers.

The new switches offer 1.6 terabits per second per port and deliver 3.5 times more energy efficiency, 63 times greater signal integrity, and 10 times better network resilience compared to conventional methods. Nvidia Quantum-X Photonics InfiniBand switches are expected to be available later this year, while Nvidia Spectrum-X Photonics Ethernet switches are scheduled for release in 2026.

According to Nvidia CEO Jensen Huang, this move breaks some of the limitations of existing networks and could open the door to clusters of millions of GPUs.

Personal AI supercomputers with DGX Spark and DGX Station

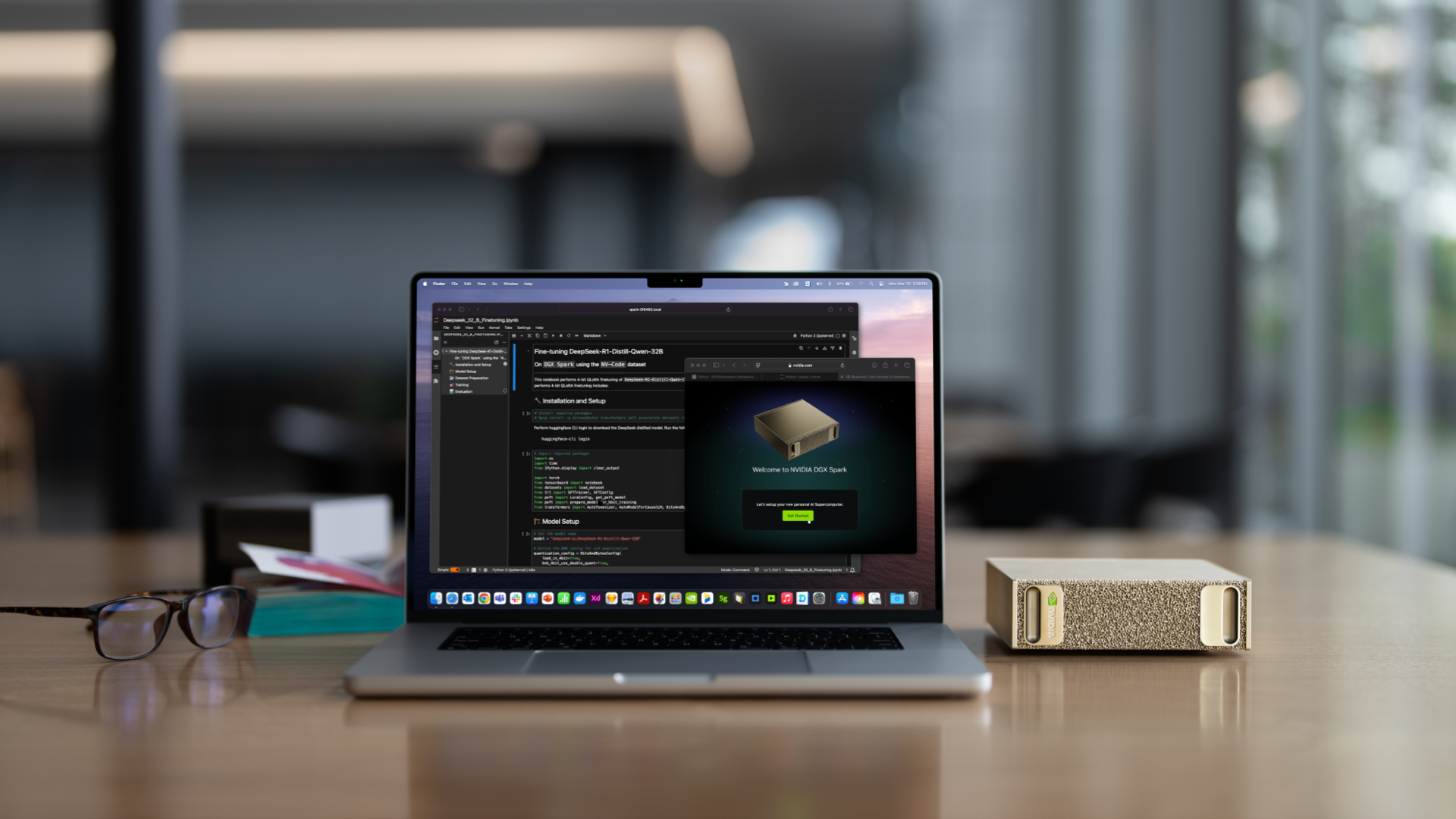

For developers, researchers, and data scientists, Nvidia introduced the personal AI computers DGX Spark and DGX Station.

DGX Spark (formerly Digits) is described by Nvidia as the world's smallest AI supercomputer and is powered by the Nvidia GB10 Grace Blackwell Superchip. DGX Station, on the other hand, is the first desktop system with the Nvidia GB300 Grace Blackwell Ultra Desktop Superchip and features 784 GB of coherent memory for large training and inference workloads. According to Huang, this represents a new class of computers, designed for AI-native developers and for running AI-native applications.

For designers, developers, data scientists, and creatives, Nvidia also introduced the expected Nvidia RTX PRO Blackwell series. The new GPUs offer improved performance with the Nvidia Streaming Multiprocessor, fourth-generation RT Cores, and fifth-generation Tensor Cores. They also support larger and faster GDDR7 memory, offering up to 96 GB for workstations and servers and up to 24 GB for laptops.

Isaac GR00T N1: Foundation model for humanoid robots

In robotics, Nvidia presented Isaac GR00T N1, which the company describes as the first open, fully customizable foundation model for generalized humanoid reasoning and skill capabilities. The model had been introduced earlier, but is now reportedly available. In a demonstration, the company showed how robotics manufacturers can use the model to control their humanoid robots.

Nvidia also announced a collaboration with Google DeepMind and Disney Research to develop Newton, an open-source physics engine that enables robots to accomplish complex tasks with greater precision. Google DeepMind plans to integrate a version of Newton into the MuJoCo training platform.

Cosmos: World foundation models for physical AI

For Nvidia Cosmos, the company introduced new World Foundation Models (WFMs): Cosmos Transfer converts structured data such as segmentation maps and lidar scans into photorealistic videos – ideal for synthetic training data in robotics applications. Cosmos Predict generates virtual world states from multimodal inputs and, with the new models, can also predict intermediate actions or motion trajectories. On Grace Blackwell systems, this enables real-time world generation, according to Nvidia.

Cosmos Reason complements the portfolio as an open reasoning model with spatiotemporal awareness that interprets video data and predicts interaction outcomes. Companies such as Agility Robotics, 1X, and Skild AI are already using these technologies to improve their autonomous systems, according to Nvidia.

Nvidia's Vera Rubin GPU aims for 50 petaflops

Nvidia CEO Jensen Huang announced the Vera Rubin GPU, set for release in mid-2026. The GPU combines two chips and Nvidia's new "Vera" CPU, promising up to 50 petaflops in AI calculations - double the performance of current Blackwell chips. The CPU also offers twice the speed of its predecessor. Huang revealed plans for the multi-GPU Vera Rubin Ultra and the four-GPU Rubin Next in late 2027. Additionally, the Feynman GPU, also utilizing the Vera CPU, is slated for 2028, though further details were not provided.

Nvidia also announced a Quantum Computing Research Center in Boston that will integrate leading quantum hardware with AI supercomputers. The center will collaborate with Quantinuum, Quantum Machines, and QuEra Computing and is expected to begin operations later this year.

With the Nvidia AI Data Platform, the company introduced a customizable reference architecture, and in climate technology, Nvidia presented the Nvidia Omniverse Blueprint for Earth-2 weather analyses to accelerate the development of more accurate weather forecasting solutions.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now