Well-known AI researcher quits: Potential for abuse too great

Renowned AI researcher Joe Redmon developed a widely used image analysis AI. Now he is stopping work on it because he fears abuse by the military and a surveillance machine.

At least since the atomic bomb, it has been clear how quickly a powerful and potentially useful technology becomes a threat. It's a similar story with artificial intelligence: image analysis AIs can recognize cats - or monitor people. The same AI technology that unlocks millions of smartphones every day is used by China for mass surveillance of its own population.

With its successes in recent years, AI research has increasingly become the target of public criticism and criticism from within the industry. The AI equivalent of the atomic bomb has not yet been invented, but it is the possibilities for image manipulation or mass surveillance that have alarmed critics worldwide.

At the important AI conference NeurIPS, for example, all submitted papers must in future include a chapter on the possible consequences of a research.

AI researcher fears misuse of his research

When the NeurIPS conference announced this new rule, it made American AI researcher Joe Redmon prick up his ears. Redmon is the maker of the widely used "You only look once" (YOLO) image analysis AI.

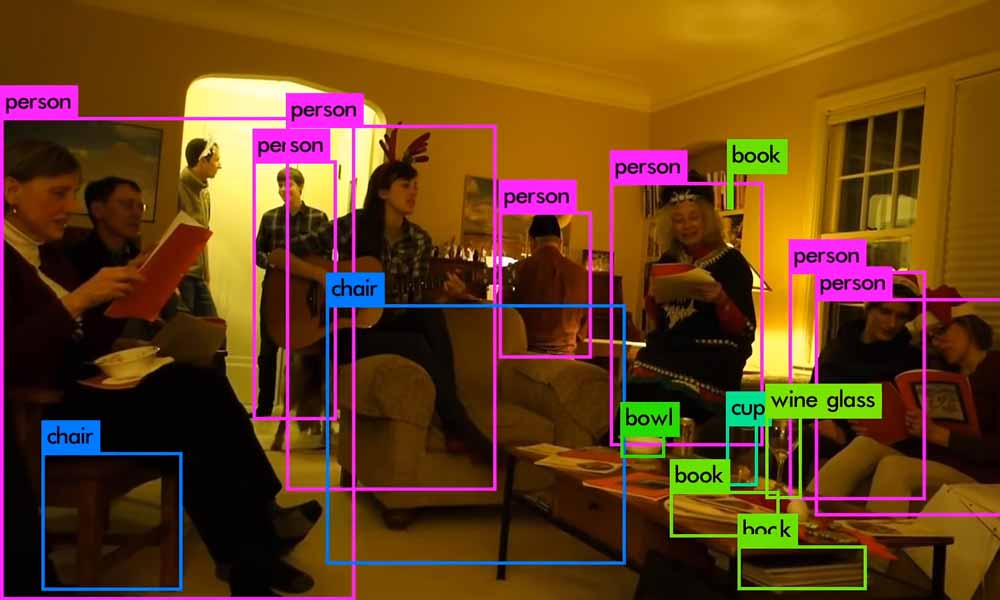

YOLO is an open-source image analysis AI that recognizes objects in real time. It is accurate and does not require a supercomputer to do so: The current version YOLOv3 can analyze 30 images per second on an Nvidia Titan X.

Redmon released the first version of YOLO back in 2016, and version 3 was then released in spring 2018.

Already in a 2018 scientific publication, Redmon sounded unusually critical notes: "What are we going to do with these detectors now that we have them? A lot of the people doing this research are at Google and Facebook. I guess at least we know the technology is in good hands and definitely not being used to collect your personal data and sell it... wait, are you saying that's exactly what it's being used for? Oh."

Another funder is the military, and that "has never done bad things," Redmon notes cynically as he continues. Researchers, he says, have a responsibility to consider and mitigate potential harm from their work. "We owe the world that much," Redmon writes.

Redmon quits AI research over ethical concerns

About a year later, Redmon is now drawing drastic conclusions from the ongoing trend of using image analysis AIs for surveillance and halting its research on AI technology. There will be no fourth version of YOLO - at least not from Redmon directly.

He loved working on his image analysis AI, but military applications and the invasions of privacy he can no longer ignore, Redmon writes on Twitter.

I stopped doing CV research because I saw the impact my work was having. I loved the work but the military applications and privacy concerns eventually became impossible to ignore.https://t.co/DMa6evaQZr

- Joe Redmon (@pjreddie) February 20, 2020

Among experts, he is met with understanding - but also with criticism. Other AI researchers see him as the perfect candidate for further research, because the AI industry could do with a scientist with an ethical compass. Conversely, Redmon's retirement will certainly not hold back AI research.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.