Gemini 2.5 Deep Think achieves gold at the world’s leading student programming competition

A new version of Gemini 2.5 Deep Think has reached gold-medal performance at this year's International Collegiate Programming Contest (ICPC) World Finals - and even solved a problem that every human team failed.

A new version of Gemini 2.5 Deep Think, an advanced version of Google's model family, competed at the ICPC World Finals 2025, the most prestigious university-level programming competition.

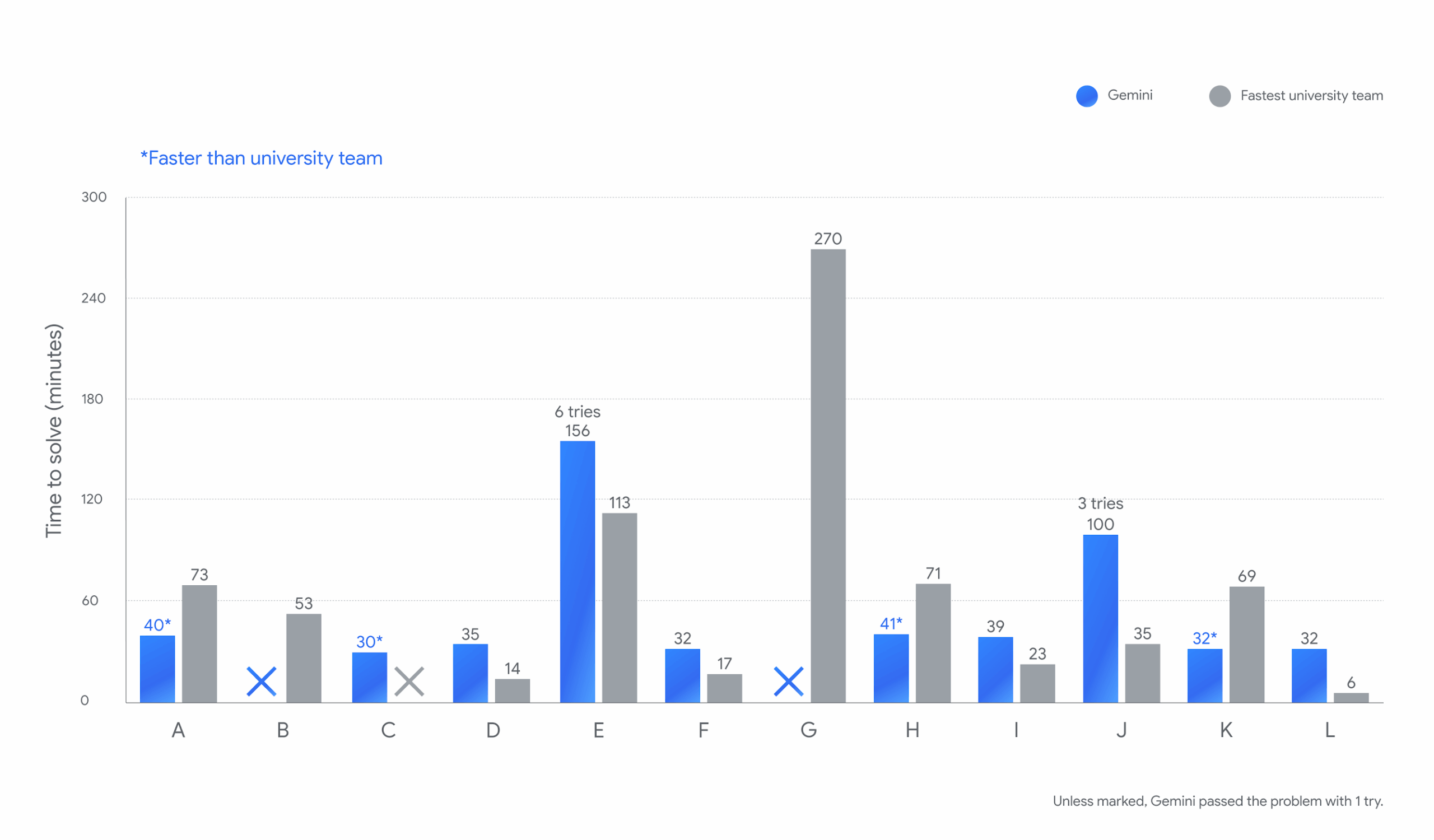

The final took place on September 4, 2025, in Baku, Azerbaijan. Out of nearly 3,000 universities across more than 100 countries, 139 teams qualified. They were given five hours to solve 12 algorithmic problems as quickly and precisely as possible. Only error-free submissions counted, and time penalties determined rankings.

AI solves 10 out of 12 challenges

Gemini Deep Think competed under official ICPC rules in an online environment, starting 10 minutes after human teams began. Within 45 minutes, it solved 8 problems, and 2 more were completed within 3 hours. Overall, it logged a combined solution time of 677 minutes for 10 problems - a performance that would have ranked second place among human competitors.

Its most striking result came on Problem C, which no human team managed to solve. The task involved maximizing the efficiency of a liquid distribution system through a configurable network of pipes and reservoirs, requiring the system to find the fastest filling strategy among virtually endless possibilities.

However, the model failed to crack Problem B and Problem G.

Advances in abstract reasoning

According to Google Deepmind, the system's success stems from progress in pretraining, posttraining, advanced reinforcement learning methods, multi-step logical reasoning, and parallel problem-solving. During reinforcement learning, the model was trained on extremely difficult programming tasks. Multiple Gemini agents generated different solution candidates, tested them in virtual terminals, and improved them iteratively.

Just weeks earlier, a version of Gemini 2.5 Deep Think won gold at the International Math Olympiad (IMO). Google says the new system builds directly on that variant.

A lighter version of Gemini 2.5 Deep Think is already available through the Gemini app to users with a Google AI Ultra plan. The IMO version has been shared with select testers. Looking ahead, Google expects future releases could evolve into far more powerful coding assistants for areas like software development, logistics, and scientific research.

A symbol of Google's ambitions

Dr. Bill Poucher, Executive Director of the ICPC, called this a turning point: "Gemini successfully joining this arena, and achieving gold-level results, marks a key moment in defining the AI tools and academic standards needed for the next generation."

He said the ability to break down complex problems, design multi-step strategies, and implement them correctly matters not only in programming but also in fields like drug discovery, chip design, and research more broadly.

Google Deepmind adds that Gemini could act as a collaborative partner for developers. In theory, a joint effort combining the best human and AI solutions would have solved all 12 competition problems.

AI Olympiads heat up

Google's breakthrough comes shortly after a major success by OpenAI. In August 2025, an OpenAI system earned gold at the International Olympiad in Informatics (IOI), the top global programming contest for high school students. Its performance was topped by only 5 out of 330 human participants.

OpenAI researcher Noam Brown emphasized that, like Gemini, this result came from a general-purpose reasoning model rather than a highly customized system. That marked rapid progress from 2024, when OpenAI narrowly missed a medal using a heavily specialized model.

Google's gold-level performance at ICPC - a university-level competition - raises the stakes yet again. It's likely that OpenAI also entered one of its systems in the contest.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.