BioGPT is a Microsoft language model trained for biomedical tasks

Key Points

- BioGPT is a transformer language model trained with biomedical literature with 349 million parameters. It is based on GPT-2 medium.

- In tests, the small domain-specific biomedical language model shows more competence on domain-specific questions than much larger, general and scientific language models.

- According to Microsoft Research, BioGPT performs at the level of human experts on the tasks tested.

BioGPT is a transformer language model developed by Microsoft researchers and optimized for answering biomedical questions. According to Microsoft Research, the model performs at the level of human experts.

The Microsoft research team trained BioGPT using only domain-specific data. They collected articles from PubMed, an English-language text-based meta-database of biomedical articles, updated before 2021. This yielded a total of 15 million pieces of content with titles and abstracts, which the team used to train BioGPT.

For pre-training, the research team used eight Nvidia V100 GPUs for 200,000 steps, while fine-tuning was done with a single Nvidia V100 GPU for 32 steps.

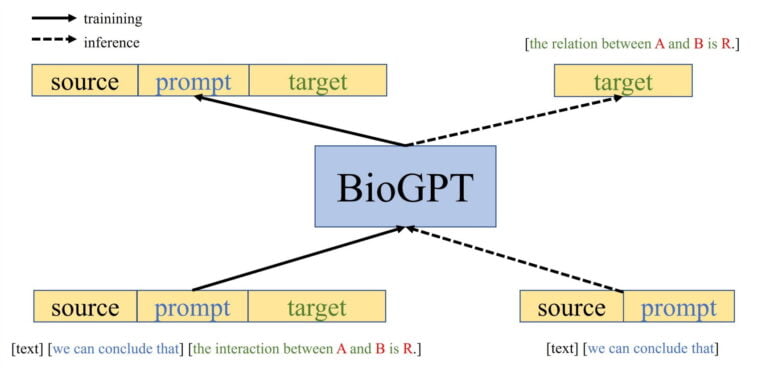

The team then refined the pre-trained GPT-2-based model with 357 million parameters for downstream tasks: end-to-end relation extraction, text-generation, question answering, and document classification.

BioGPT outperforms larger, more general language models in biomedical language benchmarks

According to the research team, BioGPT outperforms comparable models based on Google BERT on biomedical question answering and end-to-end relation extraction benchmarks. BioGPT also demonstrated superior text generation capabilities for biomedical content compared to a generally trained GPT-2.

Input:

The treatment of COVID-19Output GPT-2:

The treatment of COVID-19 is similar to that of COVID-20 and COVID-22, in which COVID-19 is treated with a variety of ionic, dopaminergic and serotonergic agents. In addition to its effects on COVID-19, the use of COVID-19 has been suggested to exert effects on the function of other dopamine neurons, including dopamine D2 receptors, D3 receptors and serotonin transporters. These effects include attenuation of the increase in dopamine release induced by the central nervous system stimulant drug amphetamine and its metabolites.Output BioGPT:

The treatment of COVID-19 with remdesivir (remdesivir for COVID-19) has been approved by the US Food and Drug Administration (FDA) for the treatment of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) infection in patients with confirmed SARS-CoV-2 infection and is currently being evaluated in clinical trials for the treatment of COVID-19 in patients with confirmed SARS-CoV-2 infection, as well as in patients with suspected or confirmed SARS-CoV-2 infection.Sample prompt from the paper

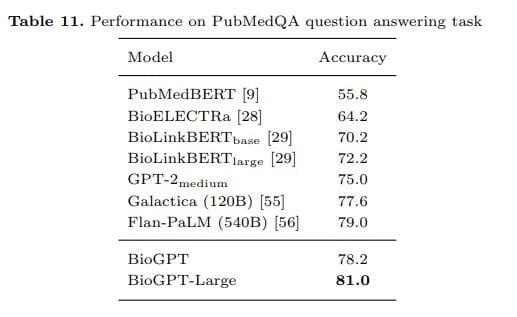

The researchers also scaled their GPT-2 medium-based model to the largest GPT-2 XL architecture available. The fine-tuned BioGPTLarge with (still comparatively few) 1.5 billion parameters achieved 81 percent accuracy in the PubMedQA benchmark (BioGPT: 78.2), outperforming larger general language models such as Flan-PaLM (540 billion parameters, 79.0) and Metas Galactica (120 billion parameters, 77.6).

BioGPT shows that small but domain-specific language models can compete with much larger, general language models within their domain. One advantage of smaller models is that they require less data and training.

The opposite approach is to fine-tune large language models like PaLM for specific domains. Google recently demonstrated with Med-PaLM that a large language model can be efficiently optimized for specific domains with specialized prompts and high-quality data. Med-PaLM can answer lay medical questions at the level of human experts.

BioGPT for human expert-level biomedical content generation

According to Microsoft Research, BioGPT performs at the level of human experts on the tasks tested in the benchmarks and outperforms other general and scientific language models. BioGPT can help researchers gain new insights, for example, in drug development or clinical therapies, Microsoft said.

In the future, the team plans to experiment with further scaling to train an even larger version of BioGPT, optimized with even more biomedical data and for even more tasks. The code for the BioGPT model presented here is available on Github.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now