AI chatbots use different sources than Google search and often cite less-known websites

A detailed study from Ruhr University Bochum and the Max Planck Institute for Software Systems highlights how traditional search engines and generative AI systems differ in the way they select sources and present information.

The researchers compared Google's organic search results with four generative AI search systems: Google AI Overview, Gemini 2.5 Flash with search, GPT-4o-Search, and GPT-4o with the search tool enabled. More than 4,600 queries across six topics—including politics, product reviews, and science—show just how differently these systems approach the web.

A key difference is when and how these systems choose to search online. GPT-4o-Search always performs a live web search for every query. In contrast, GPT-4o with search tool enabled decides whether to use its internal knowledge or look up new information for each question.

What source selection means for search results

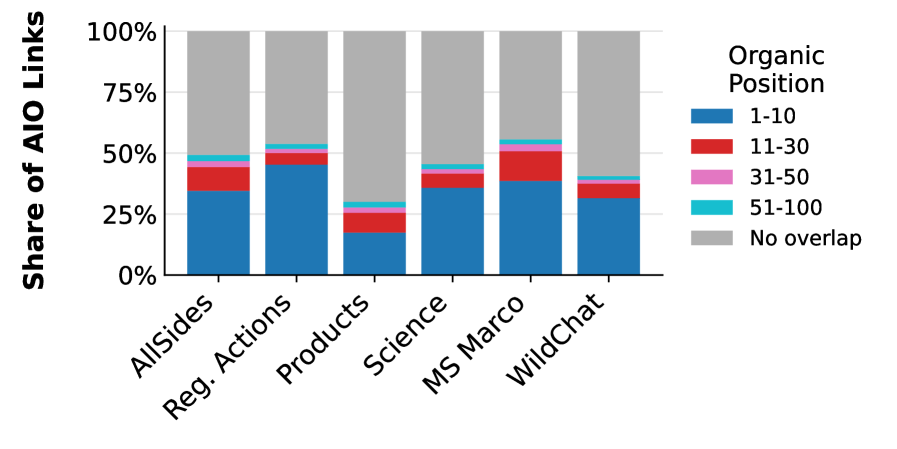

AI search systems surface information from a wider and less predictable set of sources compared to traditional search engines. In the study, 53 percent of the websites cited by AI Overview didn’t appear in Google’s top 10 organic results, and 27 percent weren’t even in the top 100. This means users could be seeing content from sites that are less vetted or less familiar.

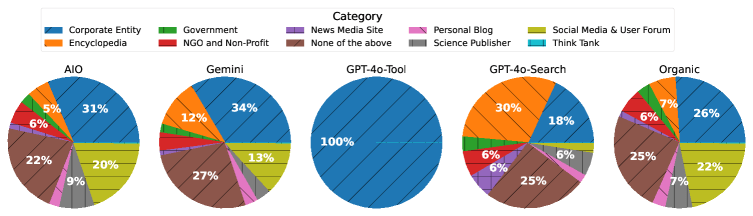

The domains chosen by AI systems are often less well-known. Only about a third of the domains used by AI Overview and GPT-Tool were among the 1,000 most-visited sites, compared to 38 percent for organic search. This shift expands the pool of information but may also introduce more obscure perspectives.

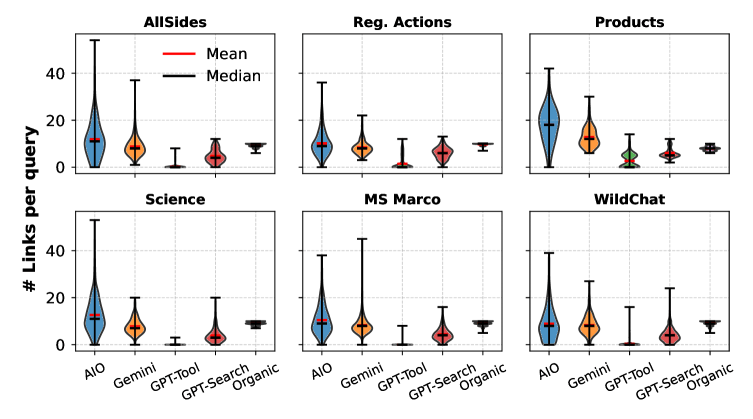

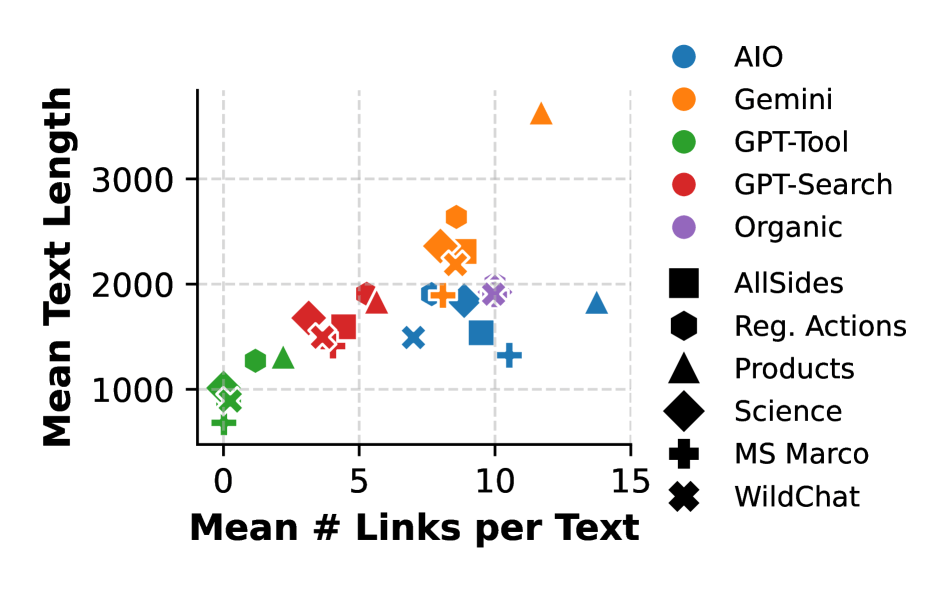

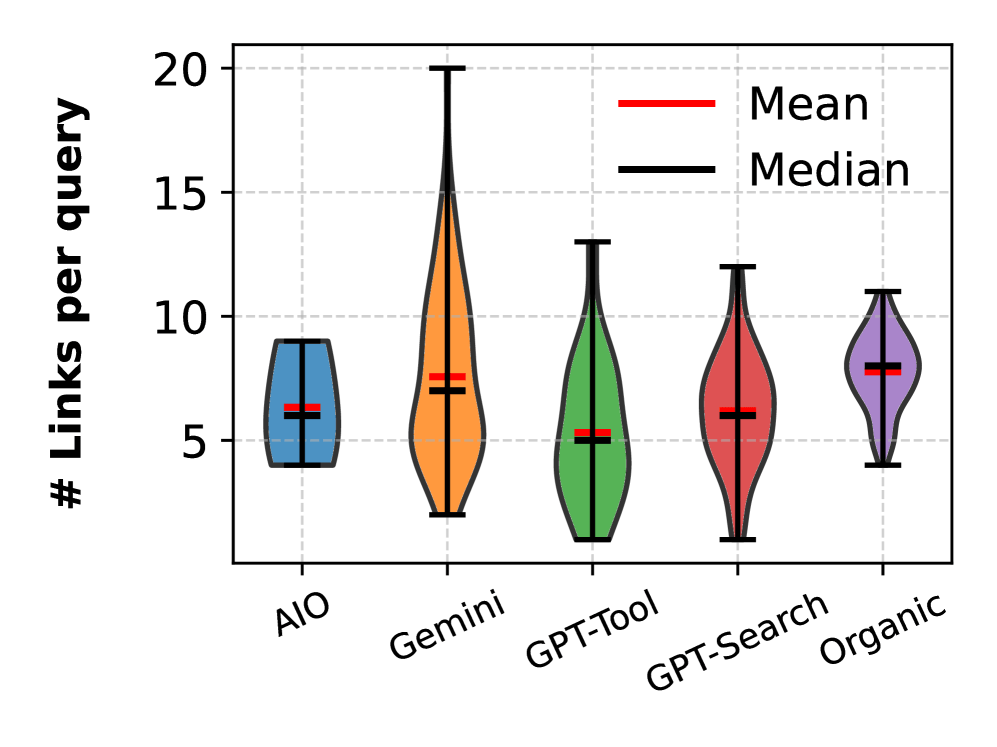

The depth of research also varies widely. GPT-Tool averages just 0.4 external sources per answer, relying heavily on its internal model, while AI Overview and Gemini pull from over eight sites per query. GPT-Search returns to the middle ground at about four sources per answer.

How content diversity could shape user understanding

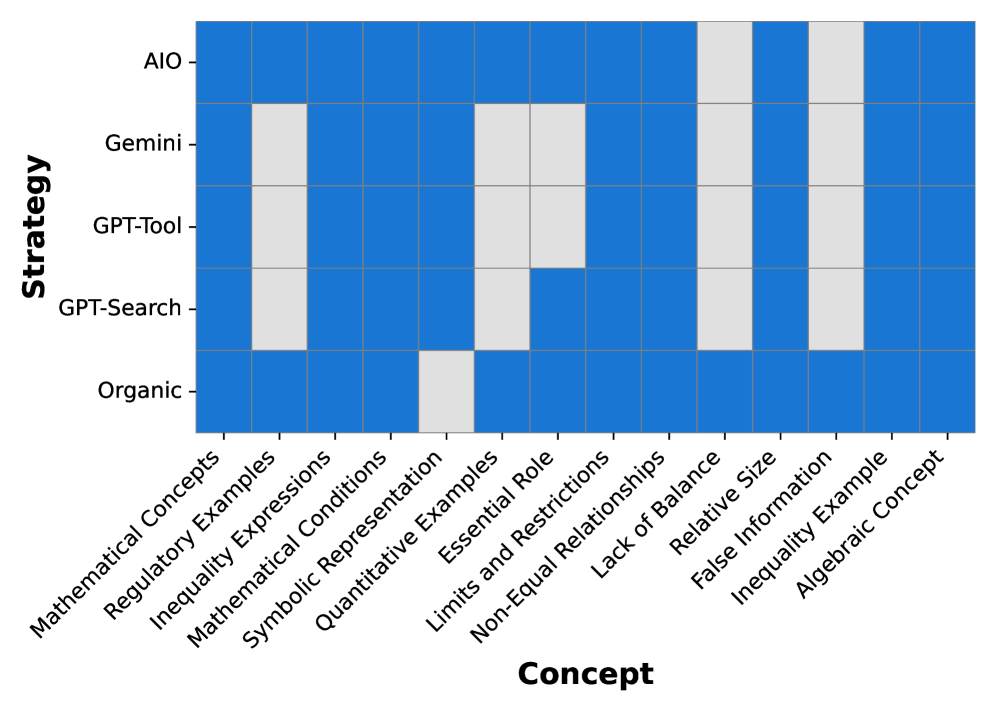

AI and search engines cover similar ground on most topics, but the way they do it can affect what users learn. Using the LLooM framework, researchers found that even the most limited AI system (GPT-Tool) still included 71 percent of the overall topic coverage found across all search tools.

But in cases where a query could have multiple interpretations, organic search returns more diverse answers. For ambiguous questions, organic search covered 60 percent of possible subtopics, compared to 51 percent for AI Overview and just 47 percent for GPT-Tool. In practice, this means users might miss out on different angles or nuances if they rely solely on AI-generated responses.

Search engines still have the edge on breaking news

When it comes to current events, traditional search still outperforms AI. In a test of 100 trending topics from September 2025, AI Overviews appeared for only 3 percent of queries. GPT-Search covered 72 percent of topics, followed by organic search at 67 percent and Gemini at 66 percent. GPT-Tool lagged at 51 percent.

A telling example: When asked about Ricky Hatton’s cause of death, GPT-Tool relied on outdated internal knowledge and incorrectly reported the boxer was still alive. Systems that don't regularly update their knowledge struggle with up-to-the-minute accuracy, which can lead to misinformation.

Reliability and consistency in search are changing

The study found that traditional search is more consistent over time. When the same questions were asked two months apart, organic search returned the same sources 45 percent of the time. Gemini came in at 40 percent, but AI Overview matched its earlier results only 18 percent of the time. So, users may get completely different supporting links depending on when they ask—something that rarely happened with classic search.

Still, even when sources shift, the general coverage of topics remains stable. The content may look similar overall, but the underlying evidence and perspectives can change.

Why the rules for evaluating search need to change

The researchers argue that current benchmarks for search quality don’t reflect the complexity of modern AI-driven systems. They call for new evaluation methods that consider source diversity, topic breadth, and how information is summarized.

These shifts in how sources are chosen and how knowledge is presented can subtly reshape what users see, trust, and verify. As AI chatbots like ChatGPT become embedded in search and AI Overviews become more common, most people no longer make an active choice about how information is gathered.

Meanwhile, language models still face major hurdles, like hallucinating facts. As search engines and AI tools merge, companies are rethinking their SEO strategies to stay visible in this new landscape.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.