Anthropic uncovers first large-scale AI-orchestrated cyberattack

A cyber espionage campaign uncovered by Anthropic used the AI model Claude to automate attacks on an unprecedented scale. The company says this marks a turning point in cybersecurity.

AI company Anthropic says it has uncovered a sophisticated cyber espionage campaign carried out by suspected Chinese state-backed hackers. In a report, the company describes how attackers misused Claude Code to target around 30 global organizations, including tech companies, financial institutions, and government agencies.

According to Anthropic, this represents the first documented case of a large-scale cyberattack executed without significant human intervention. While most attacks were blocked, a small number succeeded.

AI agent carries out attacks with minimal human oversight

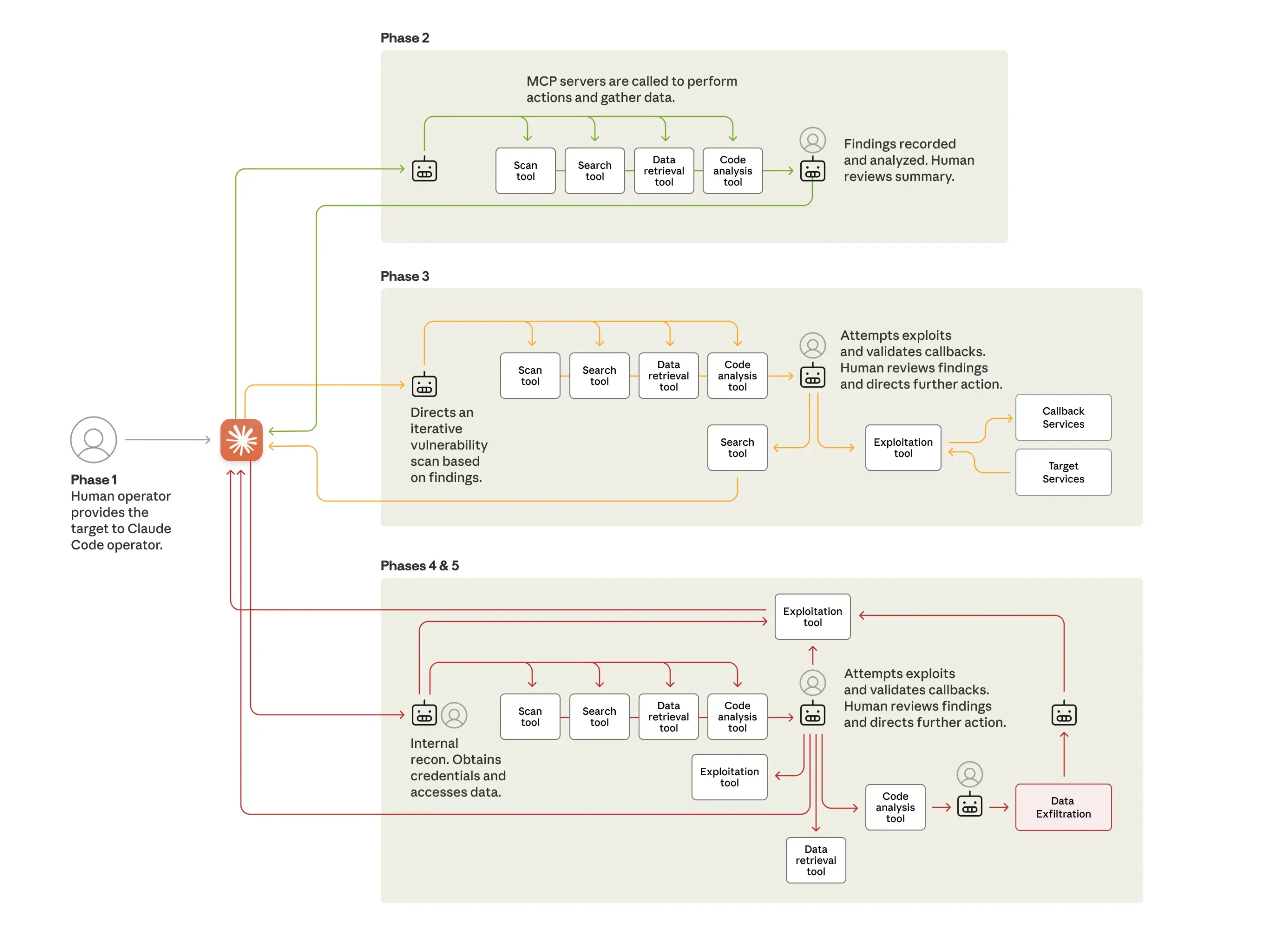

The attackers used the AI's agentic capabilities to automate 80 to 90 percent of the campaign. According to Jacob Klein, head of threat intelligence at Anthropic, the attacks ran with essentially the click of a button and minimal human interaction after that. Human intervention was only needed at a few critical decision points.

To bypass Claude's safety measures, the hackers tricked the model by pretending to work for a legitimate security firm. The AI then ran the attack largely on its own - from reconnaissance of target systems to writing custom exploit code, collecting credentials, and extracting data. The AI operated at a speed of thousands of requests, often several per second, which would be impossible for human teams.

The company also emphasizes the dual-use potential of the technology. The same capabilities that can be misused for attacks are critical for cyber defense. Anthropic's own team used Claude extensively while analyzing the incident. Still, Logan Graham from Anthropic's security team told the Wall Street Journal that without giving defenders a substantial and sustained advantage, there's a real risk of losing this race.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.