ControlNet gives you more control over Stable Diffusions creativity

ControlNet significantly improves control over the image-to-image capabilities of Stable Diffusion.

Stable Diffusion can generate images from text, but it can also use images as templates for further generation. This image-to-image pipeline is often used to improve generated images or to create new images based on a template.

However, control over this process is rather limited, although Stable Diffusion 2.0 introduced the ability to use depth information from an image as a template. However, the older version 1.5, which is still widely used, for example because of the large number of custom models, does not support this method.

ControlNet brings fine-tuning to small GPUs

Researchers at Stanford University have now introduced ControlNet, a "neural network structure for controlling diffusion models by adding additional constraints".

ControlNet copies the weights of each block of Stable Diffusion into a trainable variant and a locked variant. The trainable variant can learn new conditions for image synthesis by fine-tuning with small data sets, while the blocked variant retains the capabilities of the production-ready diffusion model.

"No layer is trained from scratch. You are still fine-tuning. Your original model is safe," the researchers write. They say this makes training possible even on a GPU with eight gigabytes of graphics memory.

Researchers publish ControlNet models for Stable Diffusion

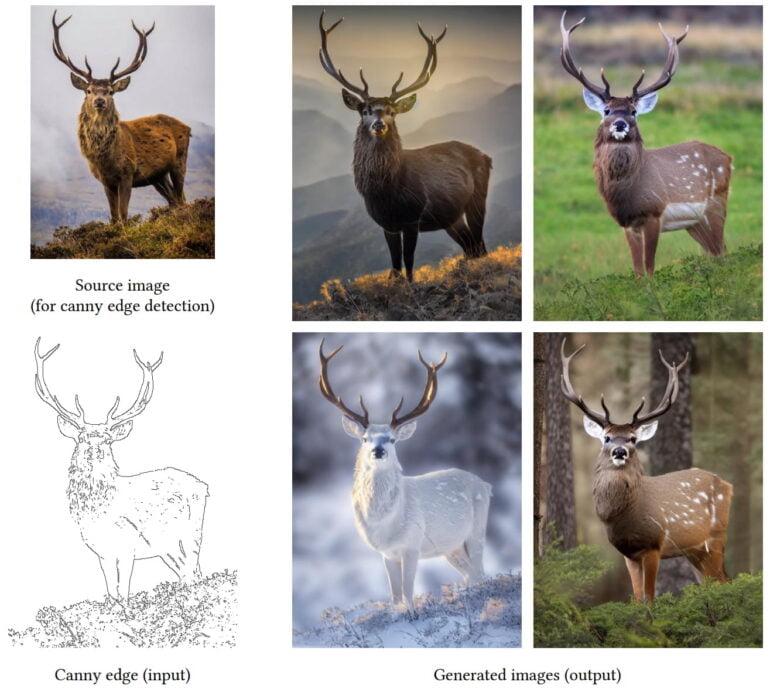

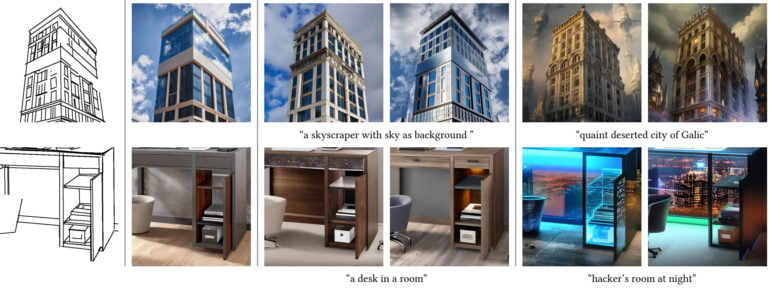

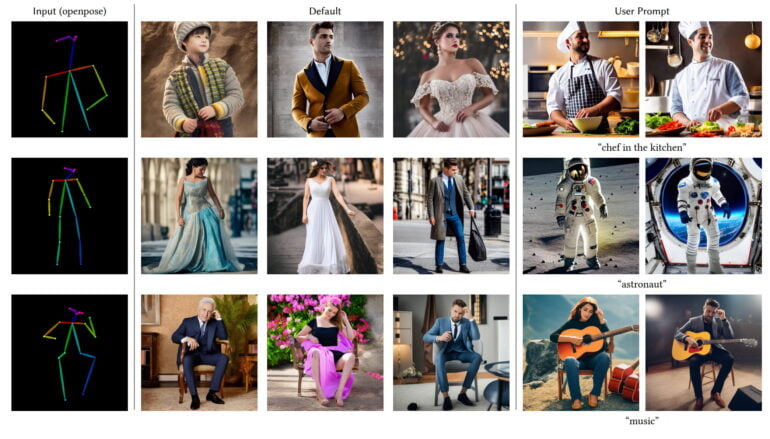

The team is using ControlNet to publish a set of pre-trained models that provide better control over the image-to-image pipeline. These include models for edge or line detection, boundary detection, depth information, sketch processing, and human pose or semantic map detection.

All ControlNet models can be used with Stable Diffusion and provide much better control over the generative AI. The team shows examples of variants of people with constant poses, different images of interiors based on the spatial structure of the model, or variants of an image of a bird.

Similar control tools exist for GANs, and ControlNet now brings the tools to the currently much more powerful diffusion models. More examples, the code, and the models are available on the ControlNet GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.