New method generates better prompts from images

A new method learns prompts from an image, which then can be used to reproduce similar concepts in Stable Diffusion.

Whether DALL-E 2, Midjourney or Stable Diffusion: All current generative image models are controlled by text input, so-called prompts. Since the outcome of generative AI models depends heavily on the formulation of these prompts, "prompt engineering" has become a discipline in its own right in the AI community. The goal of prompt engineering is to find prompts that produce repeatable results, that can be mixed with other prompts, and that ideally work for other models as well.

In addition to such text prompts, the AI models can also be controlled by so-called "soft prompts". These are text embeddings automatically derived from the network, i.e. numerical values that do not directly correspond to human terms. Because soft prompts are derived directly from the network, they produce very precise results for certain synthesis tasks, but cannot be applied to other models.

"Learned hard prompts" require far fewer tokens

In a new paper titled "Hard Prompts Made Easy" (PEZ), researchers at the University of Maryland and New York University show how to combine the precision of soft prompts with the portability and mixability of text prompts-or hard prompts, as the paper calls them.

Finding hard prompts is a "special alchemy" that requires a high degree of intuition or a lot of trial and error, the paper says. Soft prompts, on the other hand, are not human-readable and are a mathematical science.

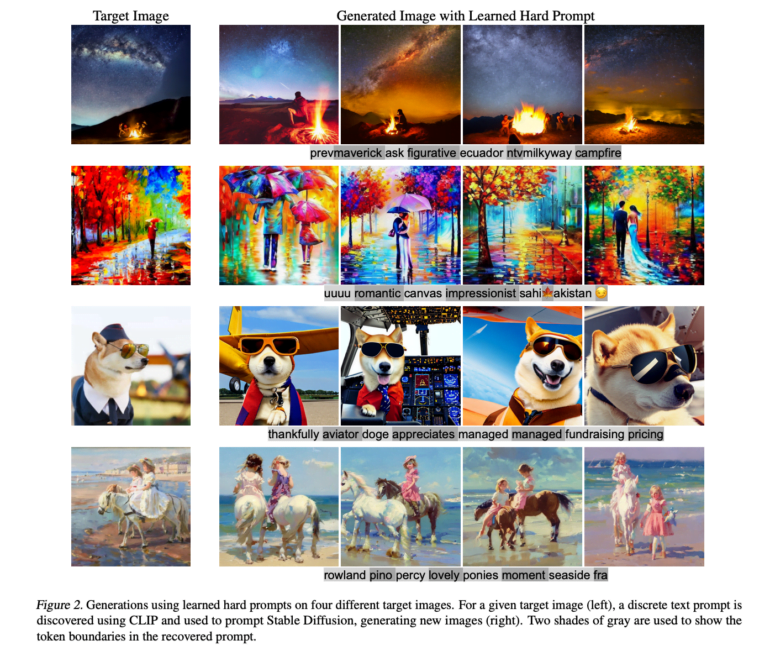

With PEZ, on the other hand, the team presents a method that automatically learns hard prompts from an input image. PEZ optimizes the accuracy of the learned prompts during the learning process using CLIP. "Learned hard prompts combine the ease and automation of soft prompts with the portability, flexibility, and simplicity of hard prompts," the article states.

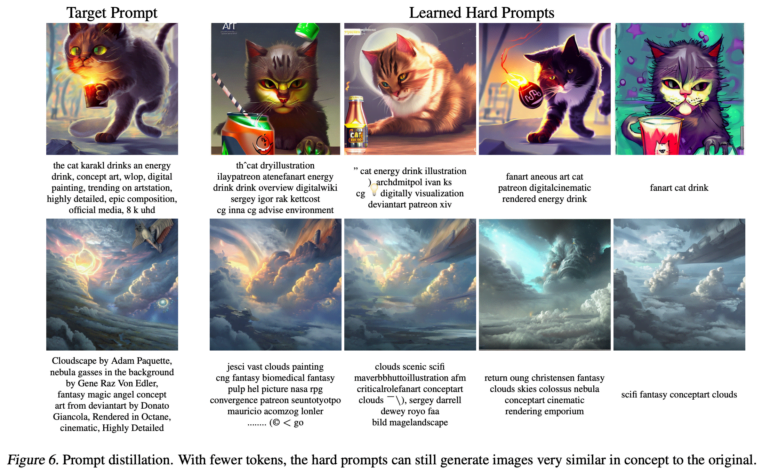

The result: PEZ is a tool for generating text prompts that reliably produces specific image styles, objects, and appearances without the need for complex "alchemy," and is on a par with highly specialized soft-prompt generation tools, they state. The team also uses "prompt distillation" to reduce the number of tokens required.

According to the researchers, the hard prompts learned can be applied well to other models.

Method tested on multiple datasets

The team shows examples of prompts in four training datasets, namely LAION-5B (mixed), Celeb-A (celebrity portraits), MS COCO (photography), and Lexica.art (AI images). To generate the AI images, they used Stable Diffusion.

Although there are differences between the original and generated images, the learned hard prompts produce clearly discernible variations of the original objects, compositions, or styles. In the future, the researchers expect to make further improvements in automated prompt discovery and control of generative AI models such as Stable Diffusion.

Although our work makes progress toward prompt optimization, the community’s understanding of language model embedding space is still in its infancy, and a deeper understanding of the geometry of the embedding space will likely enable even stronger prompt optimization in the future.

From the paper

However, the search for such efficient prompts could be used in the future to reproduce images in AI models. Recent efforts on AI reproduction of training images have already shown that diffusion models have a reproduction problem.

The team also shows that PEZ can be used to discover text prompts for large language models, for example, to make them more suitable for classification tasks.

More information and the code is available at GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.