Luma AI turns videos into 3D models for almost no money

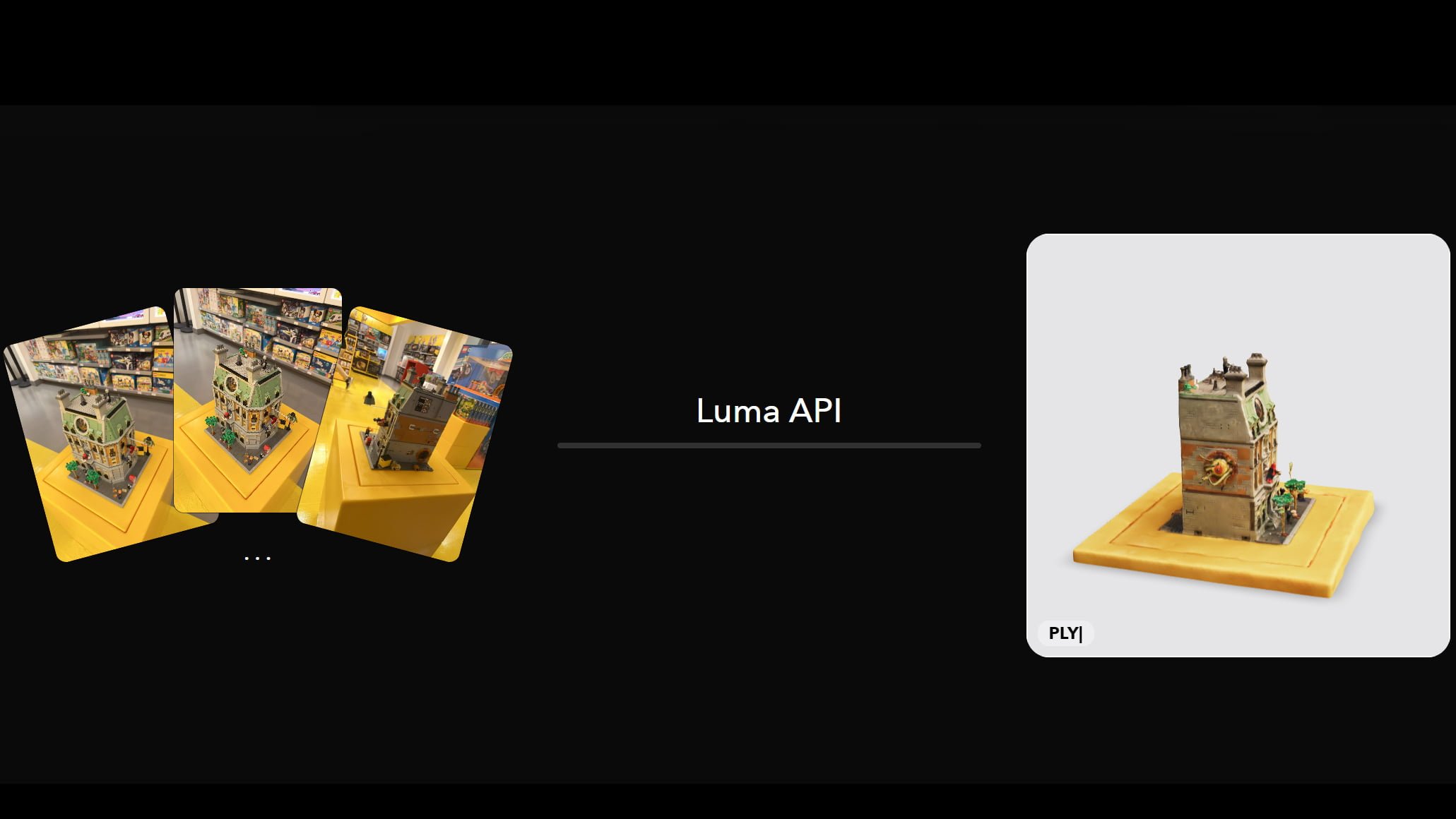

California-based Luma AI aims to democratize 3D scenes. Following the release of a text-to-3D tool, the startup has now introduced an API for converting images and videos into 3D models.

Currently, it takes between $60 and $1,500 and two to ten weeks to create a 3D model, says AI startup Luma AI. It wants to make the process much faster and cheaper, down to 30 minutes and as little as $1 per model.

To that end, the company, which recently raised $20 million in a Series A funding round, has unveiled a video-to-3D API. "Our mission is to democratize 3D. Hollywood quality, photorealistic 3D for everyone," says the Palo Alto, California-based startup, which has 14 employees.

We live in a three dimensional world. Our perception and memories are of three dimensional beings. Yet there is no way to capture and revisit those moments like you are there. All we have to show for it are these 2D slices we call photos. It's time for that to change.

Luma AI

To create a 3D model from a set of images or video, developers can use the new API, but end users can also access a web interface. To achieve the best possible result, Luma provides some guidelines.

Luma AI's tips for creating the perfect 3D scene

The scene or object should be photographed by walking around it in a circle, as slowly as possible to avoid blurring, and with the HDR mode off.

It is best to shoot from three different heights: Chest height looking forward, above the head looking slightly down into the center of the scene, and finally at knee height looking slightly up. Optionally, the scene can be shot in wide angle or fisheye (ultra wide angle). You'll need to specify this when you upload.

The maximum size of the video file you can upload is 5 GB. The same applies to a zip archive of images. See the Luma AI documentation for more information. From the video or images, Luma creates a NeRF, a neurally learned 3D scene.

In my short test, Luma's video-to-3D function proved to be usable, although the central object is a bit low resolution. But the bouquet of flowers in the transparent glass is also a relatively complex object. Additional shots would probably improve the quality.

After processing on the server, the 3D scene can also be downloaded as an object (GLTF, USDZ, OBJ) or mesh scene (PLY), shared by linking, or embedded as an iframe. This is an example of a chair to show you how this can work with a simple object.

Get started with some free credits

To use the new 3D tool, you need to register for free. Ten credits - equivalent to ten 3D scenes - are available for testing. Luma AI promises improvements in quality and processing time in the near future.

In December 2022, Luma AI launched a text-to-3D interface called Imagine 3D with similarly impressive results. This tool is currently only available via a waiting list.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.