Google CEO Sundar Pichai sidesteps chatbot search question

Key Points

- According to CEO Sundar Pichai, Google is continuing to integrate large language models into its search engine.

- Pichai doesn't signal a clear commitment to disruptive chatbot search like Microsoft's Bing Chat or OpenAI's ChatGPT plugins.

- Instead, Google will likely try to create a smooth transition to search with more AI content. There are good reasons for this.

Google CEO Sundar Pichai says his company is integrating large language models into Google Search. Is this news?

Whether chats could become the new Internet search is one of the most heated debates about large language models. Heated because the criticisms of lack of timeliness and accuracy combined with non-citable, random chatbot answers and lack of ownership (still) weigh heavily.

And a chatbot search would really shake up the content ecosystem on the web. Who will create content if it ends up in a chatbot with no return in terms of clicks and visibility?

Language models in Google search aren't new

In an interview with the Wall Street Journal, Google CEO Sundar Pichai now confirms that the search company is continuing to work on integrating large language models into its search product.

"Will people be able to ask questions to Google and engage with LLMs in the context of search? Absolutely," says Pichai.

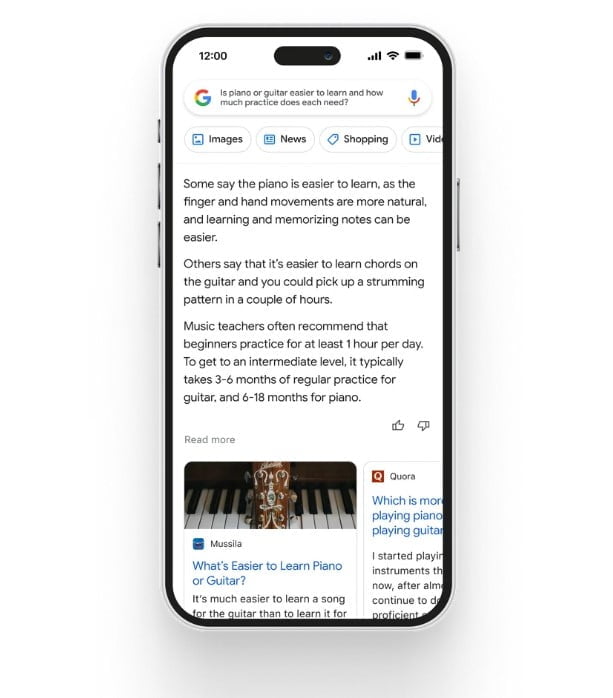

In addition to its Bard chatbot, which, like ChatGPT, answers knowledge questions and points to Google Search and other sources, Google has also announced "AI features" for search, AI-generated direct answers to a search query that appear before the typical web page listing.

Pichai's thoughtful choice of words seems relevant: Users should engage with language models in the "context of search," not for search or as a search replacement. As an example, Pichai cites a search interface that allows users to ask follow-up questions to the original question, which Google is working on.

At first, this doesn't sound new: Google has been using language models in the "context of search" for years, such as autocomplete in the search box or automatically generated follow-up questions that are answered with content from web pages directly in the search results. So what can we take away from Pichai's statement?

Google likely wants to make the transition to AI search as smooth as possible

Pichai's statement does not sound like a clear commitment to disruptive chatbot search, as OpenAI is pushing with its browser plugin for ChatGPT, Bing with Sydney, or Perplexity.ai.

Rather, Google may be trying to avoid disrupting the existing search paradigm and instead provide a smooth transition to new search models that are more supported by AI-generated content or AI methods in search.

The "AI features" mentioned above are an example of this, and the issues mentioned at the beginning are a good reason why: Google repeatedly emphasizes that it also has an ethical responsibility.

But Google's business model weighs much more heavily as an argument for my "go slow" thesis: Current Google search is a cash cow, while monetizing chatbot search is a big unknown, and direct AI response also consumes more computing power, making it more expensive.

So Google will continue to try to respond to innovative products like ChatGPT to avoid being left behind without disrupting its own business model. A difficult balancing act. Google is rumored to be working with Deepmind on a GPT-4 competitor in the "Gemini" project.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now