This is a multimodal AI wearable coded by GPT-4

Project Ring combines language and image models in an AI wearable that looks at the world through a camera and comments on it with an AI-generated voice.

The simplest way to describe Project Ring is as a wearable Google Lens with voice controls. According to developer Mina Fahmi, the project aims to "demonstrate low-friction interactions which blend physical & digital information between humans & AI."

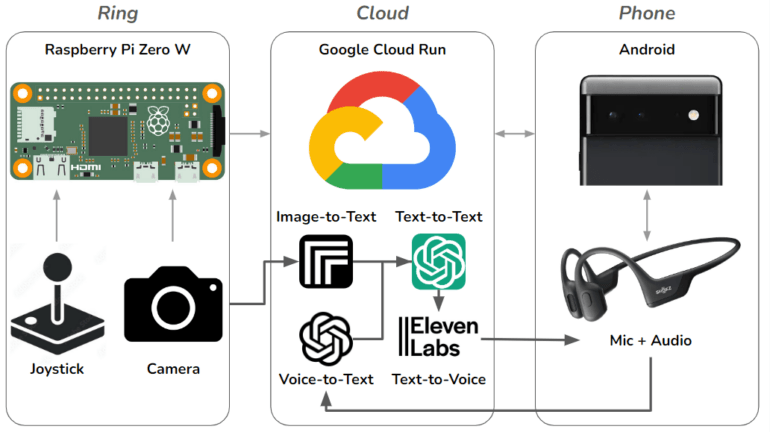

To that end, Fahmi built a wrist-worn minicomputer with a camera and joystick that can visually analyze the environment in real-time using a Replicate image-to-text model, describe it in text, and comment on it via a ChatGPT.

https://www.youtube.com/watch?v=g34I8JRRzDE

The text is converted to speech using Eleven Labs' text-to-speech service, which is then transmitted to bone-conduction headphones via an Android smartphone. The headphones have a built-in microphone that allows the user to speak back to the wearable, for example, to ask questions about the environment. The user's voice is converted to text using OpenAI's Whisper so that ChatGPT can chime in with some more or less intelligent remarks. All data is processed in the Google Cloud.

"Project Ring feels like having a curious friend on your shoulder - one who sees the world as you do and unobtrusively whispers thoughts in your ear," Fahmi writes.

GPT-4 writes code for the wearable, but "it wasn't easy"

Fahmi says he did all the code generation for Project Ring with GPT-4. In total, the language model generated about 750 lines of code. That includes a Python script for the Raspberry Pi, a cloud application, a website, and an Android application.

Fahmi has a background in coding, but he says that he hasn't written any code in years. He believes his project shows that it is possible, though not easy, to use GPT-4 to program complete software prototypes.

His coding background helped him get GPT-4 to make corrections in the right places or to assemble the code correctly by copying and pasting. According to Fahmi, GPT-4 occasionally lost context and needed to be realigned. The code was also unstable and neither performant nor production-ready, he said.

Despite these shortcomings, AI "may be capable of automating a large majority of coding tasks in a relatively short time period," Fahmi speculates.

Fahmi works on AI and human-computer interfaces at Meta, and previously worked at CTRL-Labs, the startup Meta acquired in 2019. Meta is developing a wristband based on CTRL-Labs' technology, which can translate brainwaves into precise computer commands in real time.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.