Meta takes control of its AI future

A new AI chip from Meta is designed to speed up the execution of neural networks, and an upgraded supercomputer is designed to speed up the company's own AI research.

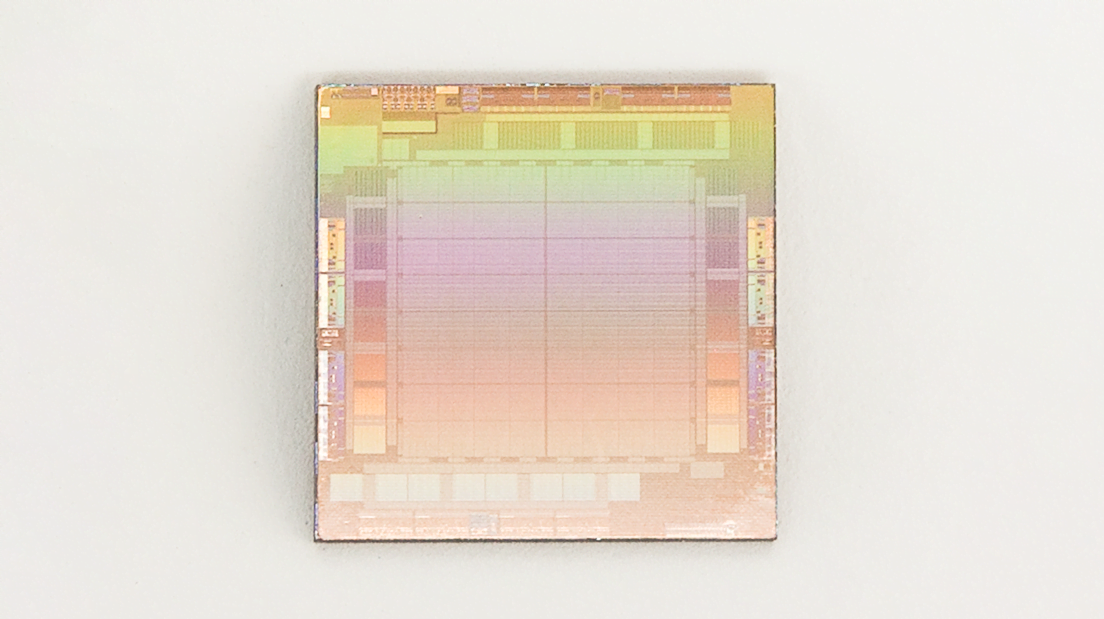

The "Meta Training and Inference Accelerator" (MTIA) is a new family of chips designed to accelerate and cheapen the processing performance of neural networks, called inference. The chip is expected to be in use by 2025. For now, Meta is still relying on Nvidia graphics cards in its data centers.

Like Google's Tensor Processing Units (TPUs), the MTIA is an application-specific integrated circuit (ASIC) chip optimized for the matrix multiplication and activation functions found in neural networks. According to Meta, the chip can handle low and medium-complexity AI models better than a GPU.

Video: Meta AI

With Trainium and Inferentia, Amazon also offers access to AI chips for training and execution in the cloud. Microsoft is said to be working with AMD on AI chips.

Research SuperCluster: Meta's RSC AI supercomputer reaches phase two

In January 2022, Meta unveiled the RSC AI supercomputer, which it said at the time would lay the groundwork for the Metaverse. When fully developed, it is supposed to be the fastest supercomputer specializing in AI calculations. The company has been building this infrastructure since 2020.

According to Meta, the RSC has now reached its second stage with 2,000 Nvidia DGX A100 and 16,000 Nvidia A100 GPUs. The peak performance, according to Meta, is five exaflops. The RSC will be used for AI research in a variety of areas, including generative AI.

A unique feature of the RSC supercomputer is its ability to use data from Meta's production systems for AI training. Until now, Meta has relied primarily on open-source and publicly available datasets, although the company sits on a huge treasure trove of data.

Video: Meta

RSC has already made its mark: Meta trained the LLaMA language model on it, which - partly leaked, partly published - became the engine of the open-source language model movement. According to Meta, training the largest LLaMA model took 21 days on 2,048 Nvidia A100 GPUs.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.