Self-interviewing AI lets Reddit user talk to a ghostly version of themselves

Usually no one answers when you talk to yourself. AI can fill that gap.

In a thread in the r/singularity subreddit, a user presented his latest project: a large language model that he trained with 100 hours of self-conducted interviews. Now, user UsedRow2531 is able to conduct interviews with a "locally run ghost" of himself.

He explained that the project involved a lot of tinkering and "many late nights talking to myself". He emphasized that the success of the project depended less on the method than on the quality of the interview corpus.

Method doesn't matter, corpus is critical

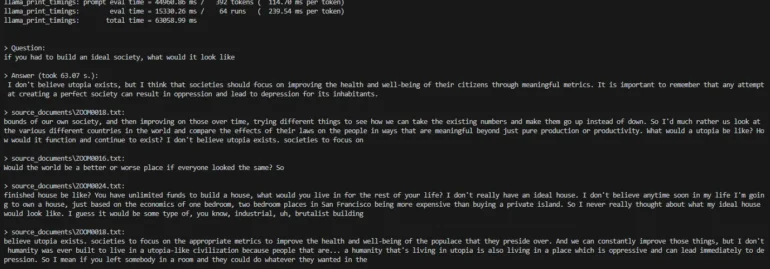

He chose Vicuna as the model after getting "strange results" with Metas LLaMA 2 (llama-2-70b-hf). He was not sure if this was due to his incorrect modifications, or if the model was actually not doing what it was supposed to do.

Conveniently, his model can also indicate the source, so it's possible to track which time period the answer came from.

Google, engineering, and many late nights talking to myself like an insane person. The method doesn't matter. The quality of the interview corpus does matter. With a massive data set, the how is meaningless. This was trained on me up until 2019. I have yet to feed it from 2019 to 2023. Purely a POC to prove to myself I am on the right path.

u/UsedRow2531 on Reddit

The post received a positive response from the Reddit community. Many users were impressed and asked for specific instructions on how to create a digital twin of themselves. Others asked about possible applications for similar projects, including the possibility of training an AI model on personal emails.

New tools for historians and investigators, too?

The discussions surrounding UsedRow's2531 contribution also led to philosophical musings about the future of autobiographies and personal records.

User u/Pelumo_64 speculated that a person could collect their thoughts in a digital diary, with text generated from audio recordings as needed. This text would then be converted into an interactive bot that could answer questions.

Questions could be about daily life or beliefs the person once held. According to u/Pelumo_64, such a bot could be a valuable tool for anthropologists, historians, and criminal investigators to delve deeper into people's thoughts and daily lives.

The "ghost" also hallucinates

However, the project reproduces the well-known shortcomings of the major language models. These may not be a problem in the private sphere, but they also show that the profession of the professional biographer is unlikely to die out any time soon.

Despite the carefully curated database, the AI model exhibited some unexpected behavior. Among other things, it repeated several times that it was a genius and that it believed in the existence of aliens. These were aspects that u/UsedRow2531 had never mentioned in his original interviews.

I never said that. I checked all of it. It gets bent out of shape when I correct it. The LLM somehow looks at what I said and decided it was a genius. It is not a genius, nor am I a genius. All very strange.

u/UsedRow2531 on Reddit

User u/More_Grocery-1858 has a plausible answer: The model works with statistical probabilities and therefore knows the different connections between words.

People who said similar things to the original poster would therefore also call themselves geniuses. This leads to the effect shown, even if the thread starter does not call himself a genius.

The concept is not really new. In late 2022, an artist had the idea to feed GPT-3 with diary entries from her childhood in order to get to know herself better. What does the inventor u/UsedRow2531 himself say about his project? "The future is going to be weird."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.