Meta shows learning algorithm for multitasking AI

Meta introduces a learning algorithm that enables self-supervised learning for different modalities and tasks.

Most AI systems still learn supervised with labeled data. But the successes of self-supervised learning in large-scale language models such as GPT-3 and, more recently, image analysis systems such as Meta's SEER and Google's Vision Transformer, clearly demonstrate that AIs that autonomously learn the structures of languages or images are more flexible and powerful.

However, until now, researchers still need different training regimes for different modalities, which are incompatible with each other: GPT-3 completes sentences in training, a vision transformer segments images, and a speech recognition system predicts missing sounds.

All AI systems, therefore, work with different types of data, sometimes pixels, sometimes words, sometimes audio waveform. This discrepancy means, for example, that research advances for one type of algorithm do not automatically transfer to another.

Metas data2vec processes different modalities

Researchers at Metas AI Research are now introducing a single learning algorithm that can be used to train an AI system with images, text, or spoken language. The algorithm is called "data2vec," a reference to the word2vec algorithm, which is a foundation for developing large-scale language models. Data2vec combines the training process of the three modalities and achieves in benchmarks the performance of existing alternatives for individual modalities.

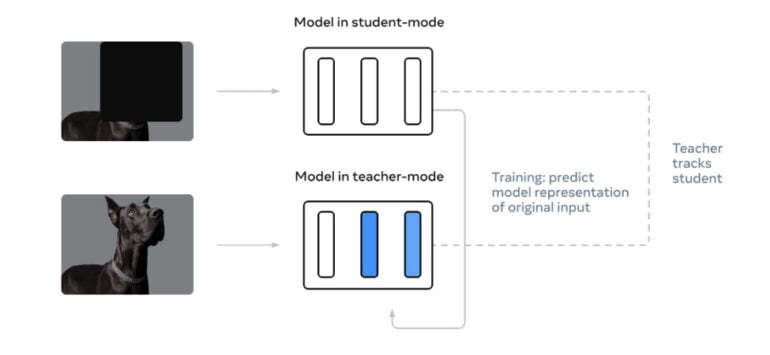

Data2vec circumvents the need for different training regimes for different modalities with two networks that work together. The so-called Teacher network first computes an internal representation of, say, a dog image. Internal representations consist of, among other things, the weights in the neural network. Then, the researchers mask a part of the dog image and let the Student network compute an internal representation of the image as well.

However, the Student network must predict the representation of the full image. But instead of learning with more images, like the Vision Transformer, the Student net learns to predict the representations of the Teacher net instead.

Since the latter was able to process the complete image, with numerous further training passes the Student network learns better and better to predict the Teacher representations and thus the complete images.

Since the Student network does not directly predict the pixels in the image, but instead the representations of the Teacher network, from which pixels can then be reconstructed, the same method works for other data such as speech or text. This intermediate step over representation predictions makes Data2vec suitable for all modalities.

Data2vec aims to help AI learn more generally

At its core, the researchers are interested in learning more generally: "AI should be able to learn many different tasks, even those that are completely foreign to it. We want a machine to not only recognize the animals shown in its training data but also to be able to adapt to new creatures if we tell it what they look like," Meta's team writes. The researchers are following the vision of Meta's AI chief Yann LeCun, who in spring 2021 called self-supervised learning the "dark matter of intelligence."

Meta is not alone in its efforts to enable self-supervised learning for multiple modalities. In March 2021, Deepmind released Perceiver, a Transformer model that can process images, audio, video, and cloud-point data. However, that has been trained in a supervised fashion.

Then, in August 2021, Deepmind introduced Perceiver IO, an improved variant that generates a variety of results from different input data, making it suitable for use in speech processing, image analysis, or understanding multimodal data such as video. However, Perceiver IO still uses different training regimes for different modalities.

Meta's researchers are now planning further improvements and may look to combine the data2vec learning method with Deepmind's Perceiver IO. Pre-trained models of data2vec are available on Meta's Github.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.