Researchers identify potential indicators of consciousness in AI systems

A new report explores consciousness in AI, using scientific theories of consciousness to develop indicator properties that could indicate whether an AI system is likely to be conscious.

Artificial intelligence has made many advances in recent years, and in some cases - justifiably or not - raised questions about whether they could become conscious. A recent famous example was that of Google employee Blake Lemoine and his talk about a sentient LaMDA. One obvious problem with his attribution of sentience was that it was based solely on the behavior of the model.

Now an interdisciplinary team of researchers published a new report developing a "rigorous and empirically grounded approach to AI consciousness". Regarding cases such as Lemoines, the team argues: "Our view is that, although this may not be so in other cases, a theory-heavy approach is necessary for AI. A theory-heavy approach is one that focuses on how systems work, rather than on whether they display forms of outward behavior that might be taken to be characteristic of conscious beings."

In the report, the team argues that assessing consciousness in AI is scientifically tractable and provides a list of "indicator properties".

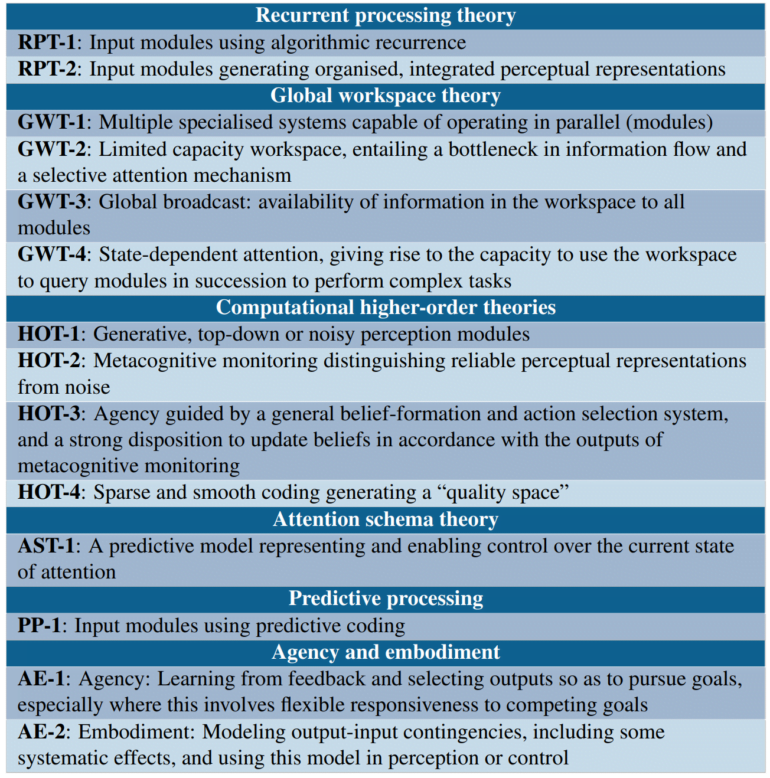

Neuroscientific theories of consciousness inform indicator properties

The researchers adopt a "computational functionalist" perspective, assuming consciousness depends on an AI system implementing certain computations. They then draw on neuroscientific theories that aim to identify the specific computational functions associated with consciousness in humans. These include recurrent processing theory, global workspace theory, higher-order theories such as perceptual reality monitoring, attention schema theory, predictive processing, and theories of agency and embodiment.

From these theories, the researchers derive a list of indicator properties, the presence of which makes an AI system more likely to be conscious. For example, the global workspace theory suggests indicators such as having multiple specialized subsystems and a limited-capacity workspace that allows information sharing between subsystems.

Evaluating existing systems according to indicator properties

The researchers use the indicator properties to evaluate some existing systems. They argue that large language models such as GPT-3 lack most of the features of a global workspace theory, while the Perceiver architecture comes closer but still falls short of all the indicators of GWT.

In terms of embodied AI agents, they examine Google's PaLM-E, a "virtual rodent", and Google Deepmind's AdA. According to the team, such AI agents satisfy the agency and embodiment conditions if their training leads them to learn appropriate models that relate actions to perceptions and rewards. The researchers see AdA as the most likely of the three systems to be embodied by their standards.

The team calls for further research to develop robust methods for assessing the likelihood of consciousness in planned systems before we build them and plans to continue and encourage further research.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.