Google Deepmind might have a solution to the AI image problem

Google Deepmind introduces SynthID, a way to reliably tag AI images and make them recognizable as such.

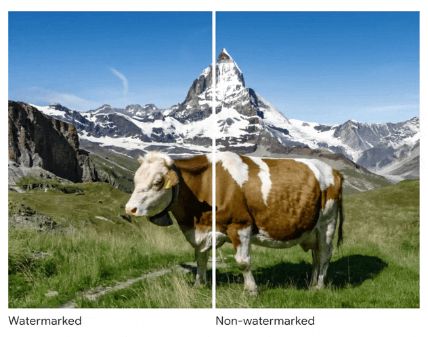

SynthID is a tool that can add an invisible watermark to AI-generated images. This watermark can be seen by machines, but not by humans.

As proof, Deepmind shows images on its blog where the watermarked half of the image looks exactly like the un-watermarked half - just in case you don't believe it.

According to Deepmind, the marker is added "directly into the pixels" of the AI-generated image, which should make it robust to image manipulations such as cropping, filtering, color changes, high compression, and other modifications typical of web images.

SynthID uses two deep learning models to generate and detect the watermark. Deepmind does not go into detail about how SynthID works to minimize the risk of an adversarial method.

Google Imagen with exclusive SynthID launch

SynthID outputs three levels of detection probability: The marker was detected, it was not detected, or it may have been detected. The last message is accompanied by a warning that the image may have been generated by AI.

Deepmind plans to roll out SynthID first for Google's Imagen image AI, which is currently available in a beta version. If the process proves successful, it could be made available for other systems "in the near future." In addition, SynthID is compatible with recognition methods that use metadata to authenticate images.

"We hope our SynthID technology can work together with a broad range of solutions for creators and users across society," Deepmind writes.

A research team from the Computer Science and Artificial Intelligence Laboratory (CSAIL) at the Massachusetts Institute of Technology (MIT) recently unveiled "PhotoGuard," another AI-based image recognition technique that uses pixel manipulation to make AI images traceable. However, the researchers acknowledged that image manipulation, such as cropping, adding noise, or rotating, could distract the system.

AI image recognition has gained prominence recently due to the first attempts at political manipulation. Recent models such as Midjourney can generate realistic photos of famous people. For example, fake AI images of Donald Trump in handcuffs went viral on Twitter.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.