Boston Dynamics integrates GPT-4 with Spot and discovers emerging capabilities

Key Points

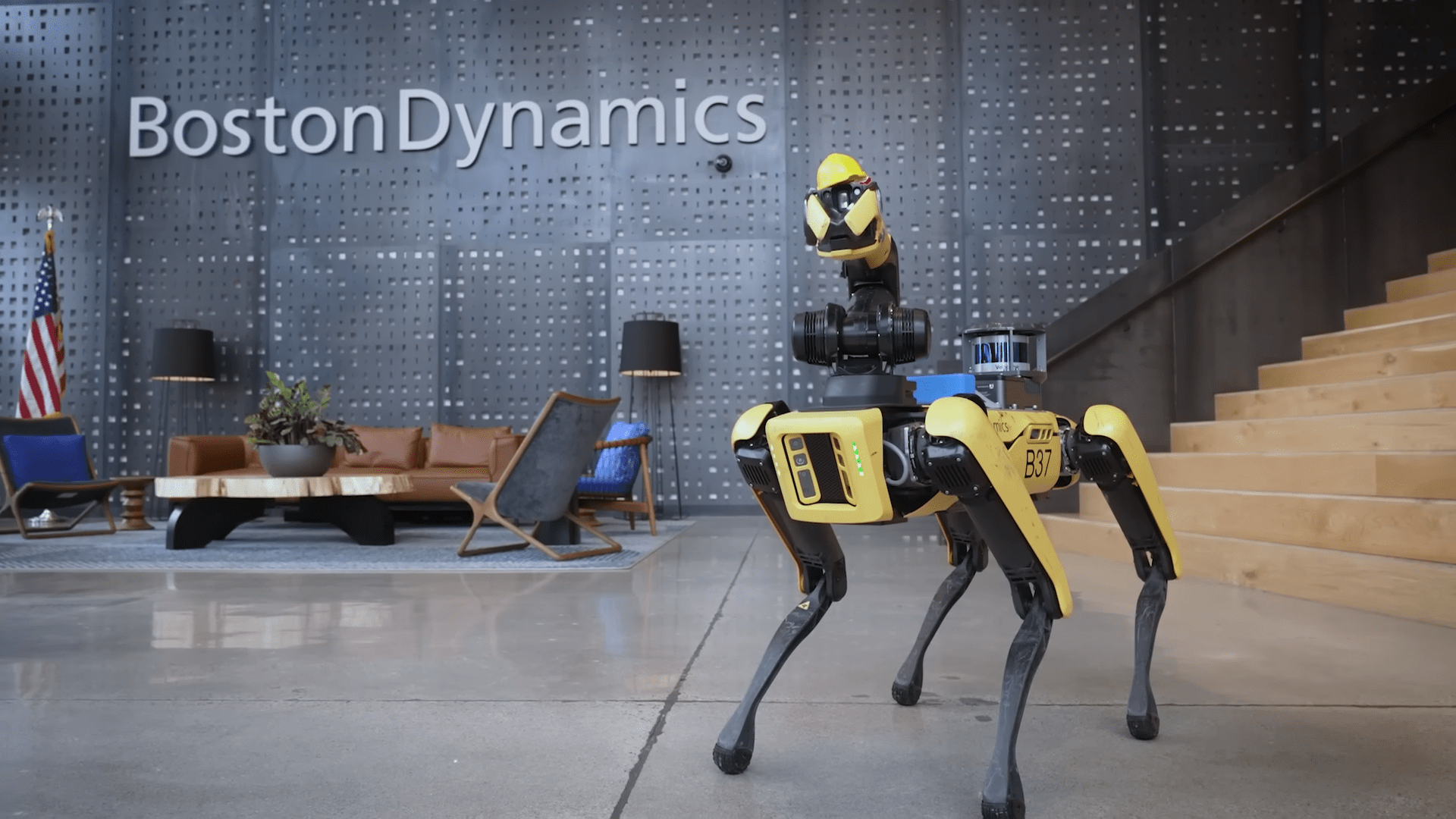

- Boston Dynamics is integrating OpenAI's GPT-4 into the Spot robot dog, adding a Bluetooth speaker, microphone, and camera to enable realistic conversations with humans.

- The talking Spot can answer questions and act as a tour guide, responding to the wake-up call "Hey, Spot!" and turning to face humans when speaking.

- Although Spot shows impressive emergent capabilities, the company also points out many shortcomings and does not mention any specific future plans for the improved robot dog.

Robotics company Boston Dynamics has integrated OpenAI's GPT-4 into its Spot robot dog, showcasing its emerging capabilities.

To build the talking and interactive robot dog, Boston Dynamics added a Bluetooth speaker and microphone to Spot's body, in addition to a camera-equipped arm that serves as its neck and head. Spot's grasping hand mimics a talking mouth by opening and closing. This gives the robot a form of body language.

For language and image processing, the upgraded Spot uses OpenAI's latest GPT-4 model, as well as Visual Question Answering (VQA) datasets and OpenAI's Whisper speech recognition software to enable realistic conversations with humans.

For example, with the wake-up call of "Hey, Spot!", the robot dog answers questions while performing his tour guide duties at Boston Dynamics' headquarters. Spot can also recognize the person next to it and turn to that person during a conversation.

Spot with ChatGPT demonstrates emergent capabilities

In experiments with the robot, Boston Dynamic found emergent capabilities, which are skills that the robot surprisingly exhibits even though they were not explicitly given or programmed into the robot.

When asked about the founder of Boston Dynamics, Marc Raibert, Spot replied that he did not know the person, but that support could be requested from the IT help desk. This request for help was not an explicit instruction in the prompt.

When asked about his parents, Spot referred to his predecessors "Spot V1" and "Big Dog" in the lab, describing them as his elders.

In addition, the ChatGPT robot is excellent at sticking to a predefined character and, for example, constantly making snarky remarks, Boston Dynamics writes.

Boston Dynamics based its implementation on Microsoft's ChatGPT rules for robots. A detailed description of the prompts can be found on the company blog.

Spot thinks up a yoga robot

But Boston Dynamics also points out the many shortcomings of its LLM-enabled Spot: The ChatGPT dog often says the wrong things, such as that the logistics robot "Stretch" is for yoga. It also takes about six seconds from question to answer, so it's not exactly a real-time conversation.

To be clear, these anecdotes don’t suggest the LLM is conscious or even intelligent in a human sense—they just show the power of statistical association between the concepts of “help desk” and “asking a question,” and “parents” with “old.” But the smoke and mirrors the LLM puts up to seem intelligent can be quite convincing.

Boston Dynamics

Boston Dynamics does not outline specific plans for its modified Spot. But robots in general are a promising way to embed language models in the real world, the company says. The possibilities are many, from tourist guides to customer service to personal companions.

Allowing a robot to learn new tasks simply by talking to it would also shorten the systems' learning curve."These two technologies are a great match," Boston Dynamics writes.

Boston Dynamics' experiment with Spot is another example of combining the capabilities of large language models with the presence of robots in the real world, as Google is exploring in its RT-2 and SayCan projects. The world knowledge of large language models helps robots move intuitively in the real world and perform complex actions on demand, without the need for additional programming.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now