Google Deepmind and YouTube unveil new Lyria AI model for music generation

Google Deepmind introduces Lyria, an AI model for music generation designed to enhance the creative process for musicians and artists.

In collaboration with YouTube, Google Deepmind has launched two AI experiments for music: Dream Track, an experiment in YouTube shorts, and Music AI Tools, a set of tools for artists, songwriters, and producers.

Lyria model aims to create longer, more cohesive pieces with vocals

The Lyria model is designed to create high-quality music with instrumental accompaniment and vocals. According to Google Deepmind, Lyria supports many genres, from heavy metal to techno to opera. The company says it can maintain the complexity of rhythms, melodies, and vocals over phrases, verses, or longer passages.

Google Deepmind is testing Lyria with YouTube as part of the Dream Track project. The goal of the experiment is to explore new ways for artists to make music. Users enter a theme and style into the model's interface, select an artist from the carousel, and create a 30-second soundtrack for a YouTube short.

The Lyria model generates the lyrics, background music, and AI-generated voice in the style of the selected participating artist. Featured artists include Alec Benjamin, Charlie Puth, Charli XCX, Demi Lovato, John Legend, Sia, T-Pain, Troye Sivan and Papoose.

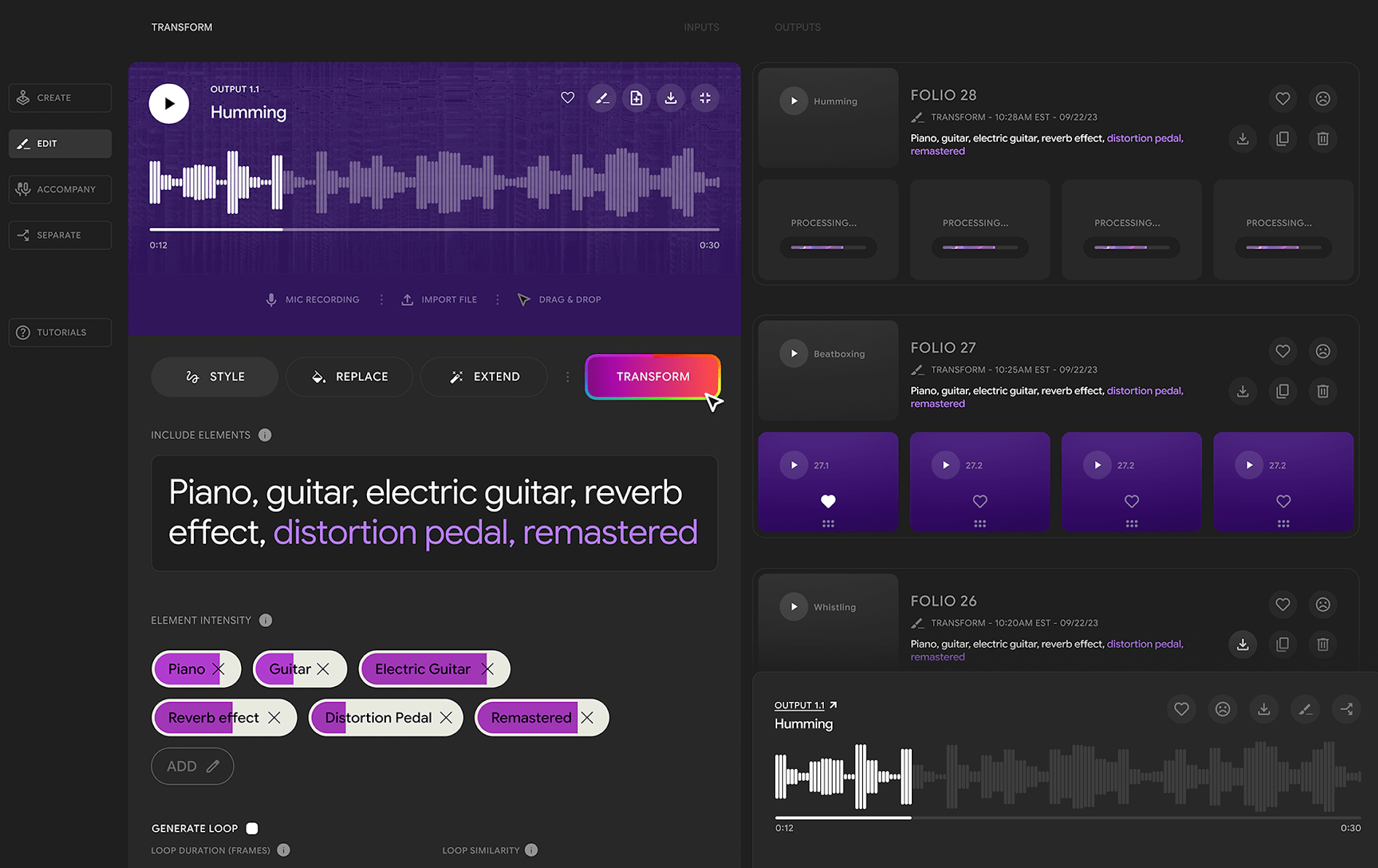

Google Deepmind researchers are also working with artists, songwriters, and producers from the YouTube Music AI Incubator to explore how generative AI can aid the creative process.

Together, they are developing a range of AI tools for music that can transform audio from one musical style or instrument to another, create instrumental and vocal accompaniments, and generate new music or instrumental passages from scratch. The tools are designed to make it easier for artists to turn their ideas into music, such as creating guitar riffs from hums.

Deepmind's SynthID labels AI audio

All content generated with Lyria is tagged with SynthID, the same technology toolkit used to identify AI images generated by Imagen on Google Cloud's Vertex AI. The watermark is visible to machines, but not to humans.

Similarly, SynthID watermarks AI-generated audio in a way that is inaudible to the human ear and does not affect the listening experience. It does this by transforming the audio wave into a two-dimensional visualization of how a sound's frequency spectrum changes over time.

The watermark should remain recognizable even if the audio material is modified, for example by adding noise, MP3 compression, or speeding up or slowing down the track, Deepmind writes.

SynthID should even help if parts of a song were created by Lyria. According to Google Deepmind, this "novel method" is unlike anything that has been done before, especially in the field of audio.

A few days ago, YouTube published new rules for dealing with AI-generated audiovisual content on the platform. Among other things, the video platform is focusing on increased labeling requirements and wants AI content to be identified by both humans and AI.

For YouTube, the music generator could also be a strategic tool to gain more control over the AI-generated music on their platform by getting users to use their tools where they have precise control over the generated content, including labeling.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.