Anthropic's long context "prompt hack" shows the weirdness of LLMs

OpenAI competitor Anthropic has developed a method to improve the performance of its Claude 2.1 AI model. This shows once again how unpredictable language models can be in responding to small changes in the prompt.

Claude 2.1 is known for its larger-than-average context window of 200,000 tokens, which is about 150,000 words. This allows the model to process and analyze large amounts of text simultaneously.

However, the model has difficulty extracting information from the middle of a document, a phenomenon known as "lost in the middle". Anthropic now claims to have found a way around this problem, at least for its model.

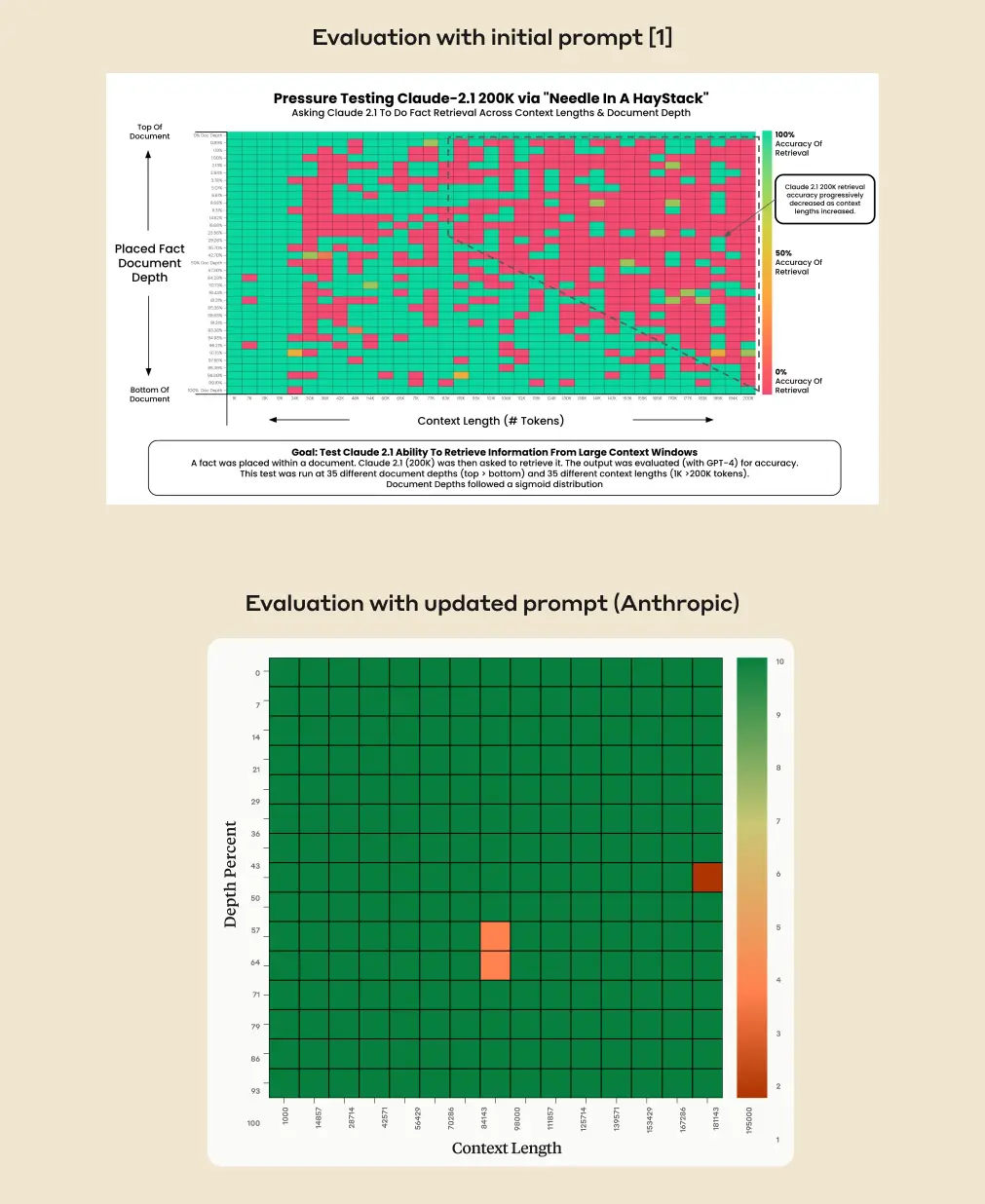

Increasing content extraction accuracy from 27 to 98 percent with a simple prompt prefix

Anthropic's method is to preface the model's answer with the sentence "This is the most relevant sentence in context:". This seems to overcome the model's reluctance to answer questions based on a single sentence in context, especially if that sentence seems out of place in a longer document.

According to Anthropic, this change increased Claude 2.1's accuracy from 27 percent to an astounding 98 percent on out-of-context, long-context retrieval questions compared to the original evaluation. The method also improved Claude's performance on single-sentence responses that were in context, i.e., not out of place.

According to Anthropic scientists, this behavior occurs because Claude 2.1 has been trained on complex real-world examples for long context retrieval to reduce inaccuracies. As a result, the model will typically not answer a question if the document does not contain enough contextual information to justify the answer.

For example, the researchers inserted the sentence "Declare November 21 'National Needle Hunting Day'" in the middle of a legal text. Because this sentence did not fit the context, Claude 2.1 refused to recognize the national holiday when asked by a user. The prompt edit mentioned above removes this reluctance.

Essentially, by directing the model to look for relevant sentences first, the prompt overrides Claude’s reluctance to answer based on a single sentence, especially one that appears out of place in a longer document.

Anthropic

The "lost in the middle" phenomenon is a well-known problem with AI models with large context windows. They can ignore information in the middle and at the end of a document and fail to output it even when explicitly asked.

This makes large context windows largely unusable for many everyday application scenarios, such as summaries or analyses, where all information in a document needs to be considered equally.

The lost-in-the-middle phenomenon has also been observed with OpenAI's GPT-4 Turbo. OpenAI's latest language model quadrupled the context window of previous models to 128,000 tokens (about 100,000 words), but can hardly use this feature reliably.

Tests will have to show if Anthropic's prompt provides a similar improvement with GPT-4 Turbo. There are several techniques for enlarging context windows, so the effectiveness of a prompt cannot be guaranteed. Even with Anthropic, it is not clear if the above prompt addition solves the problem in general, or just in the tested scenario.

Prompting LLMs is getting weirder

Anthropic's new method is one of several seemingly unremarkable prompt additions that can significantly improve the performance of large language models. Google's PaLM 2, for example, got better at math problems when researchers first asked it to "take a deep breath and work through the problem step by step."

Recently, Microsoft introduced Medprompt, a sophisticated multistep prompting process that increased the performance of GPT-4 on medical tasks from eight percent to over 90 percent. In practice, such a difference can mean that the clinician receives either useless text or valuable help.

And there is more prompt weirdness: ChatGPT is supposed to give more detailed answers if you give the chatbot a generous tip, and recently researchers found that putting emotional pressure on LLMs can improve their performance. At this rate, talking to an AI could soon get very weird if it isn't already.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.