Adobe unveils Firefly Image 3 and a major upgrade to the AI capabilities of Photoshop

Adobe unveils its third version of Firefly generative AI in a year and releases Firefly 3 in browser and Photoshop beta.

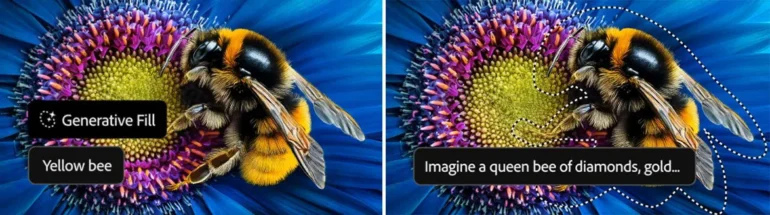

At its in-house creative conference Max in London, Adobe announced version 3 of its Firefly image model and new tools in the Photoshop beta. The Generative Fill inpainting function in particular benefits from the new features.

Previously based on the significantly inferior first-generation Firefly, Firefly 3 bypasses Firefly 2 and delivers much higher-quality results.

Firefly 3: Faster, more accurate, more controllable

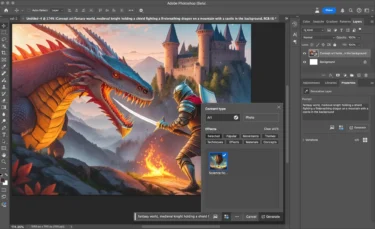

According to Adobe, Firefly Image 3 promises more creative control, new auto-stylization capabilities, Structure Reference and Style Reference, improved photographic quality and prompt accuracy, and a wider range of styles for illustrations and symbols.

According to Adobe, the model also offers significant improvements in generation speed, making the ideation and design process more productive and efficient.

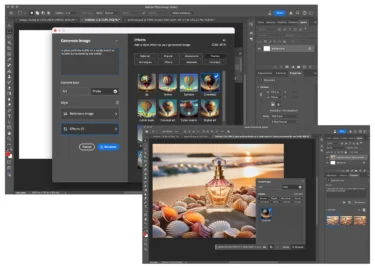

Firefly Image 3 also brings new features to the beta version of Photoshop. Images can now be created directly in the desktop application without having to go through the website. In addition, Photoshop not only uses the new model for text-to-image, Generative Fill and Generative Expand, but can also be better controlled using reference images.

With Firefly 3, you can also create backgrounds or variations of image content at the touch of a button. The update also includes features such as Enhance Detail, which automatically adjusts sharpness and clarity, and non-destructive adjustments with presets and brushes.

The new features are available today in a beta version of the Photoshop desktop application and will be included in a stable release later this year.

Third version in a year

Adobe introduced its first Firefly model only about a year ago, followed by a major update in October with Firefly 2. The big difference to the competition from Midjourney, SDXL, Ideogram, and DALL-E 3 is the origin of the training data: Adobe is one of the few vendors to claim that it can produce legally flawless images because the model is trained only on licensed material from its own stock database.

Recently, however, it was revealed that Adobe Stock now also contains images generated by Midjourney, which presumably ended up in Firefly's training material. Whether this will be the case with Firefly 3 is unclear. In the press release, Adobe refers to a multi-stage, continuous review and moderation process that blocks and removes content that violates Adobe's policies.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.