"AGI system could be built in as little as three years": Ex-OpenAI employee warns US Senate

Former employees of OpenAI and other tech giants criticized the companies' security practices in a hearing before the US Senate and warned of the potential dangers of artificial intelligence.

William Saunders, a former OpenAI employee, made serious accusations against his former employer during the Senate hearing. Saunders claimed that OpenAI neglected security in favor of rapidly developing artificial intelligence.

"Without rigorous testing, developers might miss this kind of dangerous capability," Saunders warned. He cited an example where OpenAI's new AI system could assist experts in planning the reproduction of a known biological threat.

According to Saunders, an artificial general intelligence (AGI) system could be developed in as little as three years. He pointed to the performance of OpenAI's recently released o1 model in math and coding competitions as evidence: "OpenAI’s new system leaps from failing to qualify to winning a gold medal, doing better than me in an area relevant to my own job. There are still significant gaps to close but I believe it is plausible that an AGI system could be built in as little as three years."

Such a system could perform most economically valuable work better than humans, entailing considerable risks like autonomous cyberattacks or assisting in the development of biological weapons.

Saunders also criticized OpenAI's internal security measures. "When I was at OpenAI, there were long periods of time when there were vulnerabilities that would have allowed me or hundreds of other engineers at the company to bypass access controls and steal the company's most advanced AI systems, including GPT-4," he said.

To minimize risks, Saunders called for stronger regulation of the AI industry. "If any organization builds technology that imposes significant risks on everyone, the public and the scientific community must be involved in deciding how to avoid or minimize those risks," he emphasized.

Also criticized by former OpenAI board member

Former OpenAI board member Helen Toner also criticized the fragile internal control mechanisms of companies. She reported cases where safety concerns were ignored to bring products to market more quickly. Toner said i would be impossible for companies to fully consider the interests of the general public if they are solely responsible for detailed decisions on security measures.

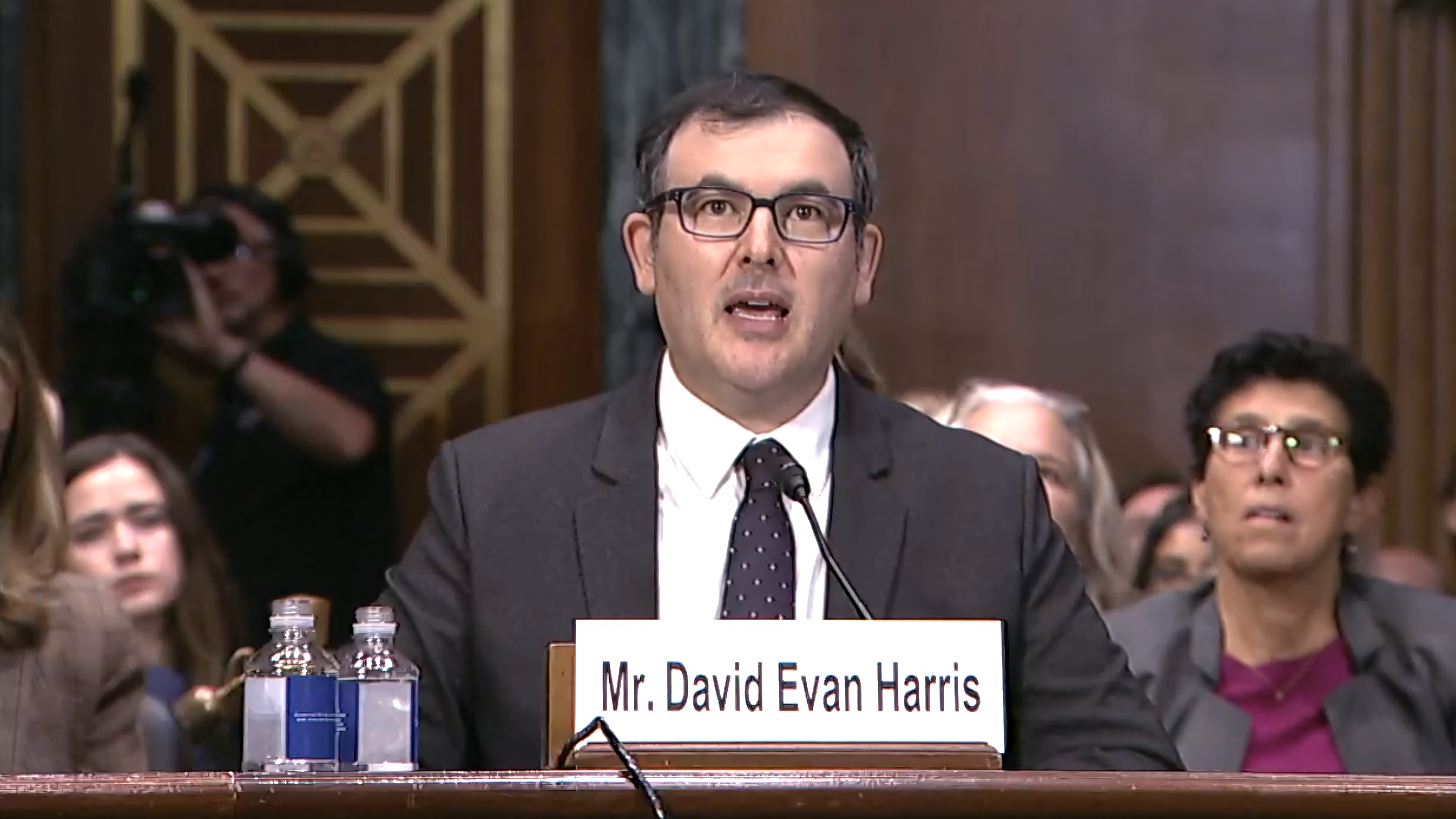

David Evan Harris, a former Meta employee, criticized the reduction of security teams across the industry. He warned against relying on voluntary self-regulation and called for binding legal regulations.

Margaret Mitchell, who previously worked on ethical AI issues at Google, criticized the lack of incentives for safety and ethics in AI companies. According to Mitchell, employees who focus on these issues are less likely to be promoted.

The experts unanimously called for stronger government regulation of the AI industry, including mandatory transparency requirements for high-risk AI systems, increased research investment in AI safety, building a robust ecosystem for independent audits, improved protection for whistleblowers, increased technical expertise in government agencies, and clarification of liability for AI-related damages.

The experts emphasized that appropriate regulation would not hinder innovation but promote it. Clear rules would strengthen consumer confidence and give companies planning security.

Senator Richard Blumenthal, Chairman of the subcommittee, announced that he would soon present a draft bill on AI regulation.

OpenAI works with the US government

The criticism from Saunders and others is part of a series of warnings from former OpenAI employees. Only recently it became known that OpenAI had apparently shortened the security tests for its AI model GPT-4 Omni. According to a report in the Washington Post, the tests are said to have been completed in just one week, which caused displeasure among some employees.

OpenAI rejected the accusations, stating that the company had "no shortcuts in our safety process" and had carried out "extensive internal and external" testing to meet political obligations.

Since November last year, OpenAI has lost several employees working on AI safety, including former Chief Scientist Ilya Sutskever and Jan Leike, who jointly led the Superalignment Team.

This week, OpenAI introduced a new "Safety and Security Committee" under the leadership of Zico Kolter, which has far-reaching powers to monitor safety measures in the development and introduction of AI models. A few weeks earlier, OpenAI had reached an agreement with the US National Institute of Standards and Technology (NIST), giving the US AI Safety Institute access to new AI models before and after they are published to work together on researching, testing, and evaluating AI safety.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.