AI-generated South Park episode may be a hoax

Update –

There are doubts about the authenticity of the project. Indications include the non-existent address "500 Baudrillard Drive, San Francisco, CA 94127" and the team listed by Fable Simulation, whose profile pictures are AI-generated.

Also, the name of the alleged CEO "Julian B. Adler" can be read as an anagram of Baudrillard. Jean Baudrillard was a French sociologist, philosopher, cultural theorist, political commentator, and photographer known for his analyses of hyperreality and simulation.

The other alleged team members listed also appear to be familiar references to well-known figures in history. Although Fable Studio lists a phone number on its website, the company cannot be reached at that number, as it sends you directly to a voicemail.

Fable Studio exists and was launched in 2018 when Facebook shut down its VR film studio. The people mentioned in the article also exist, including co-founder Edward Saatchi, who recently gave an interview to VentureBeat about the South Park episode.

Julian B. Adler is an anagram of Jean Baudrillard (assuming the B stands for Brad), and the address of the simulation is 500 Baudrillard Avenue. Mystery solved. Too on the nose. I'm calling it, Matt and Trey are in on it. pic.twitter.com/jy1z1DUf2S

- Samantha B - Sincere Posts (0/100) (@KojimaErgoSum) July 19, 2023

It's Show-1-Time: AI creates a new South Park episode

The creative capabilities of Stable Diffusion or GPT-4 are well known. However, they lack the consistency for complex stories. SHOW-1 aims to change that.

The AI company Fable Studio has combined several models into a new model called SHOW-1. It is capable of generating several coherent episodes of a series.

They prove that their concept works with a 22-minute episode of "South Park" that, surprisingly, is about the impact of AI on the entertainment industry.

To get started, the model only needs a title, synopsis, and main events

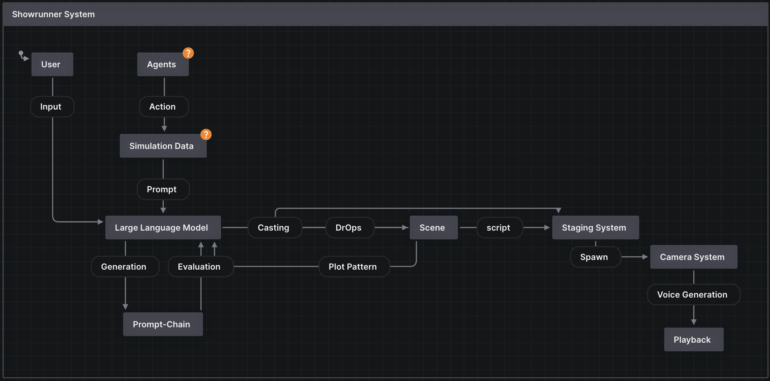

Creating a complete South Park episode is a complex process. The storytelling system is started with an abstract-level idea, usually in the form of a title, synopsis, and major events that should take place within a simulated week (about three hours of play). Generating a single scene can take a "significant amount of time", up to a minute.

- The system automatically generates up to 14 scenes based on simulation data.

- A showrunner system organizes the cast of characters and shapes the plot according to a predetermined pattern.

- Each scene is assigned a plot letter (ABC) that is used to switch between different groups of characters.

- Each scene defines location, characters, and dialogue.

- After the initial setup of the staging and AI camera system, the scene plays according to the plot pattern.

- The characters' voices were pre-trained and voice clips were generated in real-time for each new line.

Fable Studio's work is based on another research paper, "Generative Agents," published in April by Stanford and Google scientists. In it, they simulated a virtual city and observed how many defaults the so-called agents - the inhabitants - needed to follow a realistic daily routine and interact with each other.

GPT-4, custom diffusion models, and cloned voices

Among other things, SHOW-1 uses OpenAI's GPT-4 to influence the agents in the simulation and to generate the scenes for the South Park episodes.

Because transcripts of most South Park episodes are part of GPT-4's training data set, it already has a good understanding of the show's character personalities, speaking styles, and general humor, according to Fable Studio. This dramatic fingerprint is important for the consistency of a show, the team says.

Prompt chaining, or the linking of multiple prompts, provides another foundation. Deepmind's Dramatron, which writes scripts for film and television, also uses this technique.

In the case of SHOW-1, GPT-4 acts as its own discriminator for answers, similar to the concept of Auto-GPT. But generating a story is a "highly discontinuous task" and requires some "eureka" thinking, according to the team.

For the visualization, the developers used a dataset of about 1,200 characters and 600 backgrounds. They used DreamBooth to train two specialized Stable Diffusion models: one to generate individual characters against a monochrome background, and one for the backgrounds themselves, so that they could be assembled in a modular fashion.

A special feature of this approach is that users can create their own character using the character model and have it participate in the simulation.

However, the image quality is limited due to the relatively low resolution of the diffusion models, so in the future the developers suggest generating SVG vectors via GPT-4 to upscale the graphics without loss.

Neither gambling nor porridge nor blank page

Existing AI models would have to deal with the following problems, among others, which SHOW-1 does not solve completely, but at least reduces:

- Slot Machine Effect: According to this theory, the use of most AI models is similar to gambling, since the results cannot be predicted at all or only with difficulty.

- Oatmeal Problem: Another criticism of existing models is the observation that everything looks the same. In the case of serial episodes, this is especially fatal when the viewer recognizes patterns and can no longer be surprised.

- Blank Page Problem: According to Fable Studios, even experienced writers sometimes feel overwhelmed when asked to come up with a title or story idea. This cannot happen with a large language model in SHOW-1 because of the context of the previous simulation.

Who is responsible for what?

And who is ultimately the creator of the AI episode? The answer is more complex than it seems at first glance. The task is shared between the users of SHOW-1, GPT-4 and the simulation, and it is possible to set whose opinion should be weighted and how much.

While the simulation usually provides the foundational IP-based context, character histories, emotions, events, and localities that seed the initial creative process. The user introduces their intentionality, exerts behavioral control over the agents and provides the initial prompts that kick off the generative process.

The user also serves as the final discriminator, evaluating the generated story content at the end of the process. GPT-4, on the other hand, serves as the main generative engine, creating and extrapolating the scenes and dialogue based on the prompts it receives from both the user and the simulation. It's a symbiotic process where the strengths of each participant contribute to a coherent, engaging story.

Importantly, our multi-step approach in the form of a prompt-chain also provides checks and balances, mitigating the potential for unwanted randomness and allowing for more consistent alignment with the IP story world.

From the paper

Even before the release of SHOW-1, the entertainment industry was in an uproar. Authors in particular feel threatened by the advances of AI. Fable Studio does not explicitly address these fears in its paper.

On the contrary, they argue that their approach offers an effective solution to circumvent the limitations of current models for creative storytelling.

"As we continue to refine this approach, we are confident that we can further enhance the quality of the generated content, the user experience, and the creative potential of generative AI systems in storytelling," they conclude.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.