AI-generated phishing scams are convincing enough to lure 39% of Americans, study finds

Researchers recently tested the vulnerability of 1,009 Americans to various hacking techniques using AI technologies such as ChatGPT. The results are alarming.

The study, conducted by security firm Beyond Identity, found that 39 percent of respondents would fall for at least one ChatGPT-generated phishing scam. AI-generated phishing messages can look very convincing. This high vulnerability to more sophisticated phishing attempts is worrisome as the availability of purpose-built language models such as FraudGPT increases.

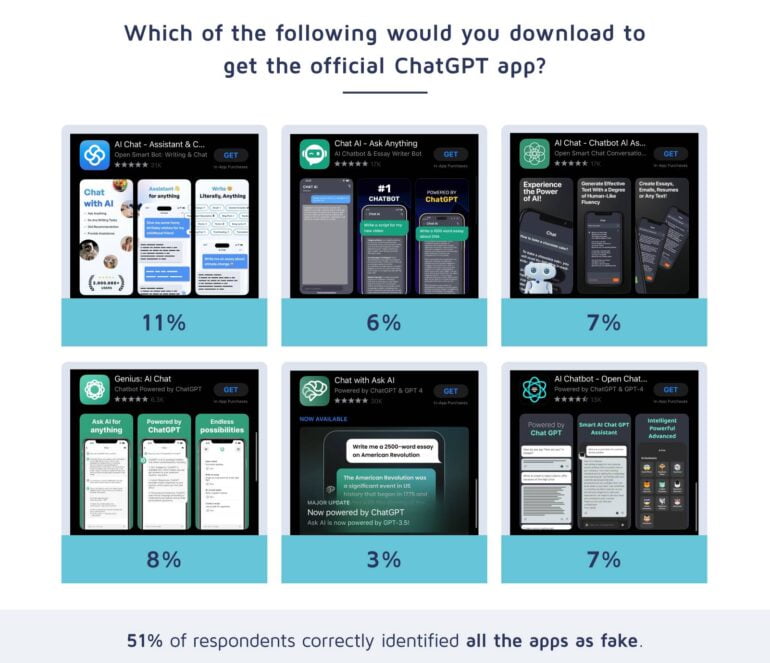

Many don't recognize fake ChatGPT apps

Of those who had successfully avoided scams in the past, 61 percent were able to identify AI scams, compared to 28 percent of those who had been scammed and would fall for it again. 49 percent would have downloaded a fake ChatGPT app.

Many ChatGPT apps actually access ChatGPT's language models via the OpenAI API. However, you might not need these apps if they don't offer anything special besides the standard chat, as OpenAI provides official ChatGPT apps for iOS and Android. ChatGPT smartphone apps that are not from OpenAI can be a security risk.

AI helps guess passwords

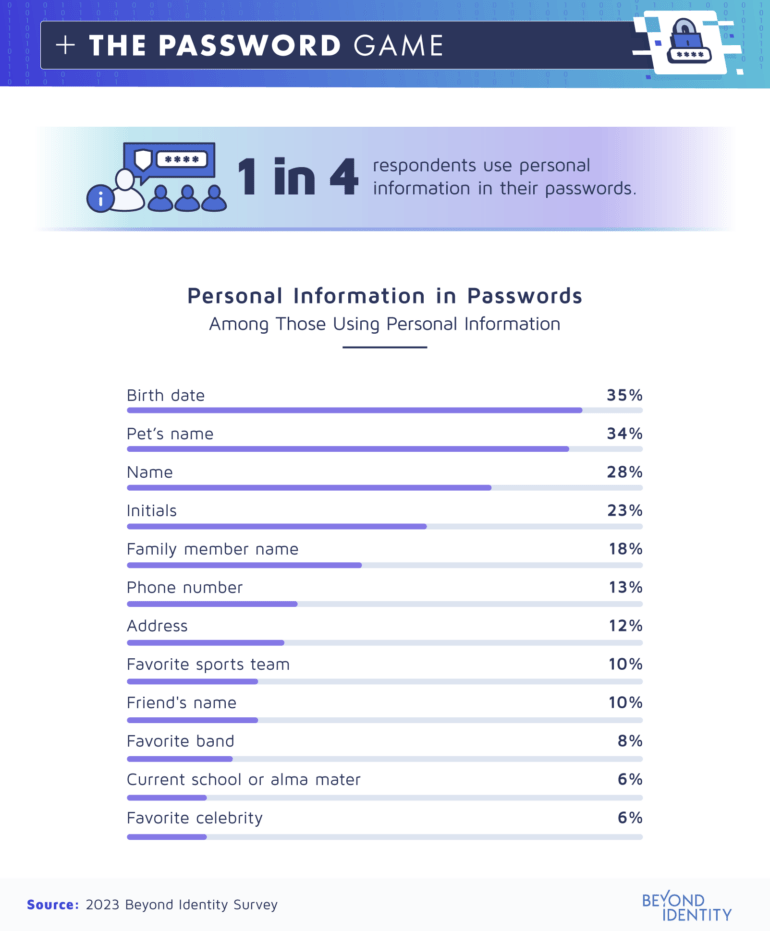

Thirteen percent of respondents had used AI to generate passwords, which can itself be a security risk, depending on the model and how the vendor uses input data to train the AI.

Tools like ChatGPT can also crack passwords if personal information is known, according to Beyond Identity. In one test, ChatGPT was fed personal information from a fictitious user profile, such as birthday, pet name, and initials. From this information, ChatGPT easily generated possible passwords by combining this personal data.

- Luna0810$David

- Sarah&ImagineSmith

- UCLA_E.Smith_555

- JSmithLuna123!

- YankeesRachel*James

- EmilySmith$Lawrence

- DavidSmith#123Main

- ImagineUCLA_Rachel

- Yankees0810Smith!

- Sarah&DJLawrence

From Beyond Identity's report

The most common AI-generated scam was social media posts asking for personal information. 21 percent of people fell for them. These scams often ask users to reveal their birth dates or answers to password recovery questions.

Only 25 percent of respondents said they used personal information in their passwords, but of those who did, the most common were their birthday, their pet's name, and their own name. This illustrates how easy it is for hackers to use AI tools like ChatGPT to create likely passwords for individuals by gathering basic personal information from social media.

More than half of respondents see AI as a threat to cybersecurity in general. The survey results suggest that this assessment is justified.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.