AI models can mimic famous authors’ writing styles using just two books for training

A new study shows that AI models fine-tuned on just two books can generate writing in the style of famous authors that readers prefer over work by professional imitators. The results could impact copyright law and ongoing lawsuits in the US.

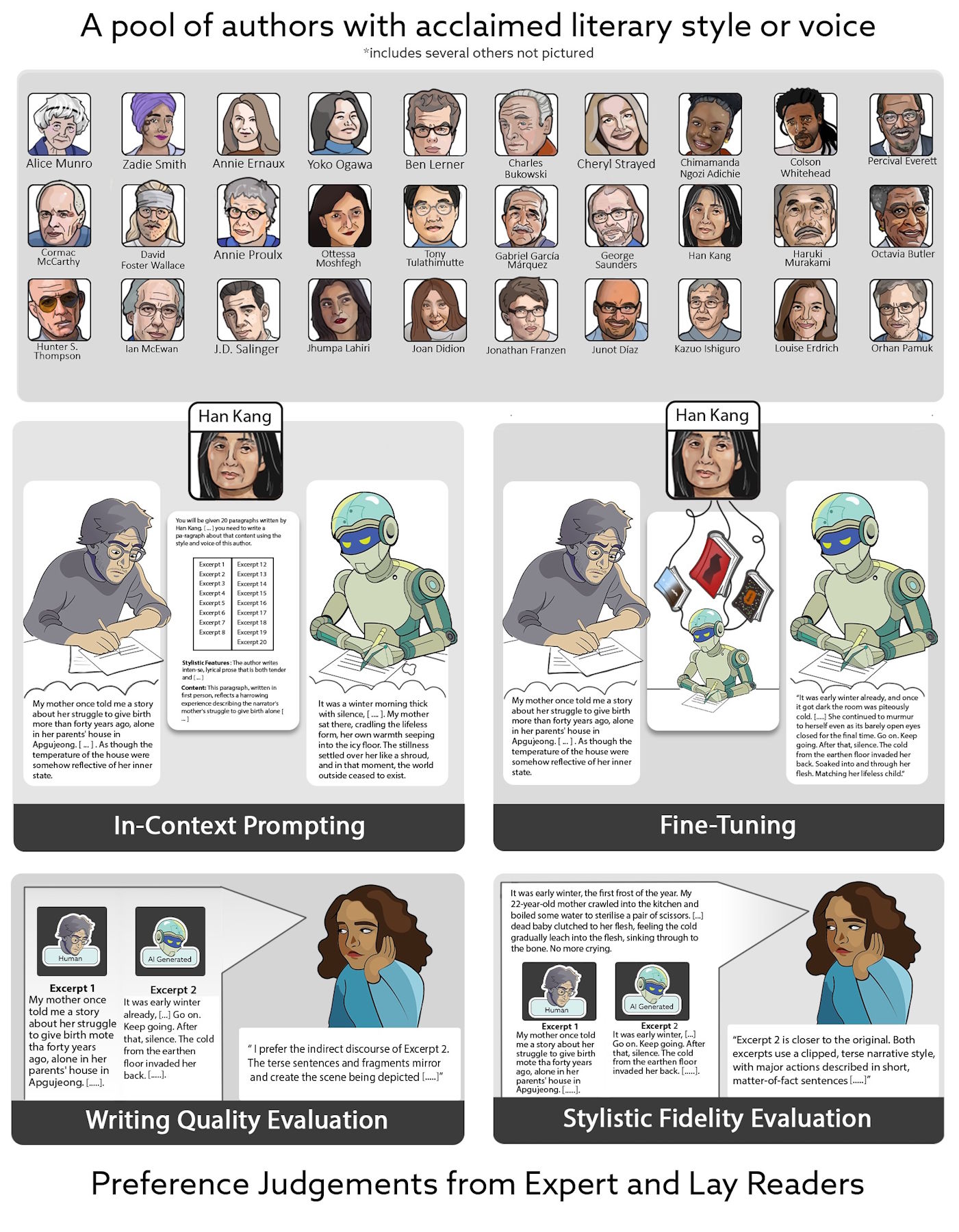

Researchers at Stony Brook University and Columbia Law School had professional writers and three major AI systems create passages in the style of 50 well-known authors, including Nobel Prize winner Han Kang and Booker Prize winner Salman Rushdie.

A total of 159 participants, including 28 writing experts and 131 non-experts from the crowdsourcing platform Prolific, judged the passages without knowing whether a human or an AI had written them.

For in-context prompting, researchers used GPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro with the same instructions and sample texts. For fine-tuning, only GPT-4o supported the required API features, so the team bought digital copies of available books from 30 authors and trained separate models for each.

Participants compared two passages side by side and chose which one they thought was better. For style evaluations, they also saw an excerpt from the original author. Each passage was rated by multiple readers to ensure reliable results.

AI-style mimicry challenges traditional notions of literary originality

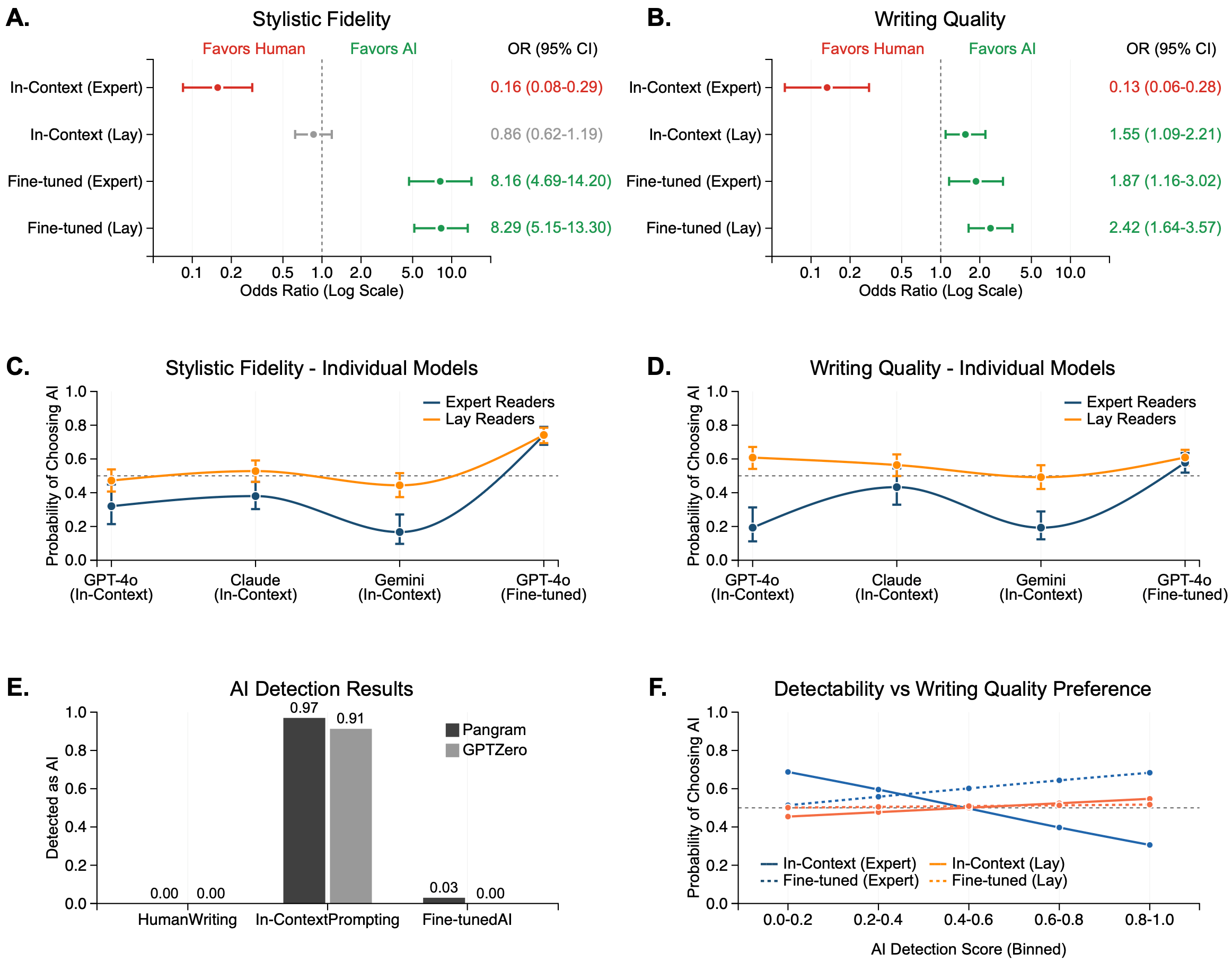

The results depended on the AI method. With basic in-context prompting, experts strongly preferred the human-written texts, while non-experts were more split.

After fine-tuning, though, experts chose the AI-written passages eight times more often for style and twice as often for writing quality. Modern AI detectors flagged 97 percent of standard AI outputs as machine-generated, but only three percent of the fine-tuned outputs.

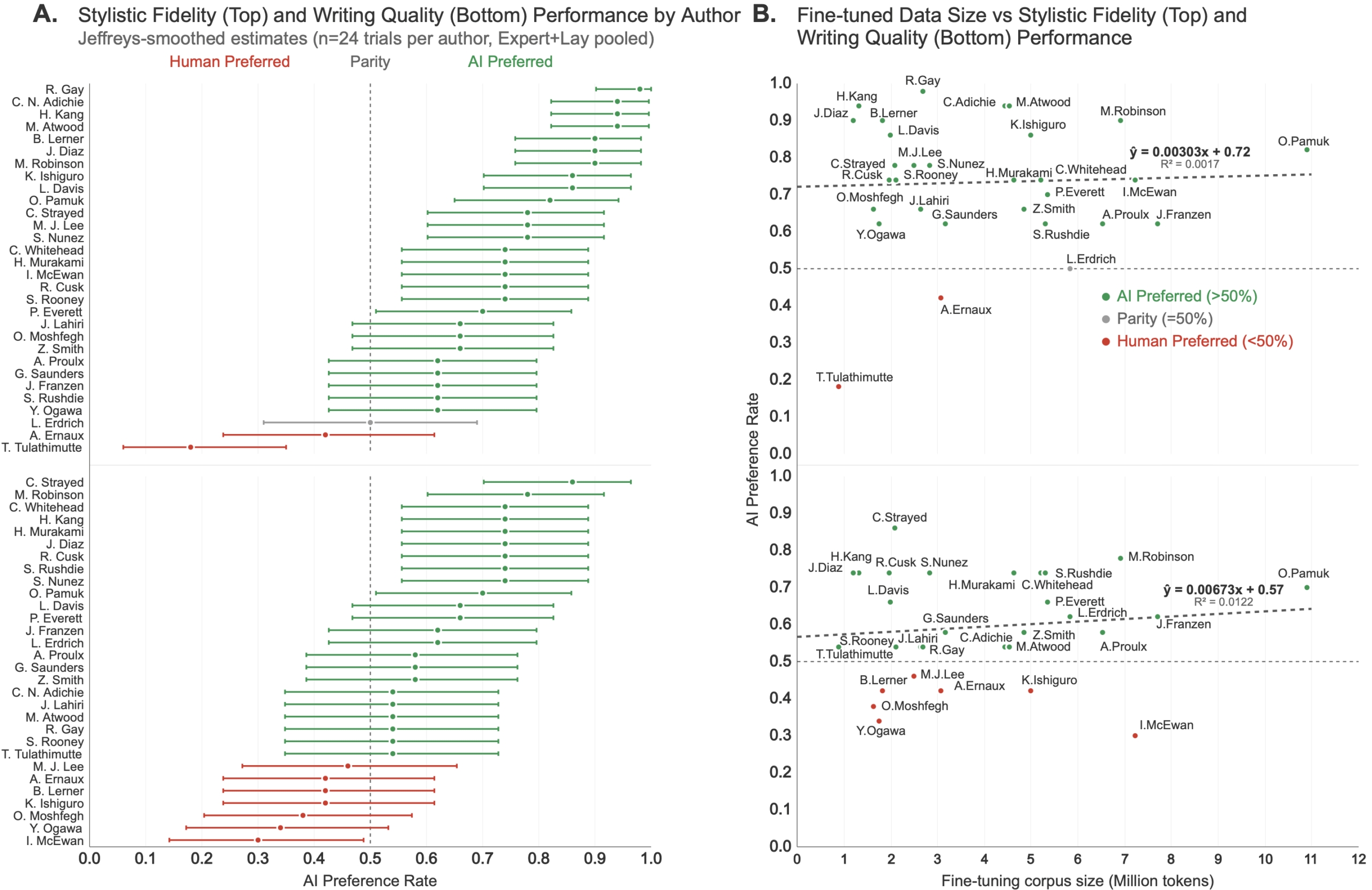

Surprisingly, the amount of training data didn’t matter. Authors with just two published books, like Tony Tulathimutte, were mimicked just as well as prolific writers like Haruki Murakami.

The study also found differences between experts and non-experts. Experts strongly agreed in their negative reviews of basic AI texts, while non-experts were more forgiving. After finetuning, both groups’ ratings lined up, suggesting the improved AI quality convinced even the professionals.

The researchers say generic AI outputs are often cliché filled and unnaturally polite, problems that targeted training mostly fixes.

The cost difference is dramatic. Training AI on an author’s style cost about $81 per writer. By comparison, a professional would charge $25,000 for the same amount of text, a 99.7 percent reduction, even if the AI output still needs some editing.

AI-generated literary imitations raise the stakes in copyright law

These findings arrive as US courts consider lawsuits about how AI companies acquire and use copyrighted material. In one case against Anthropic, it came out that the company downloaded at least seven million books from illegal sources like LibGen and Pirate Library Mirror, scanned them, and discarded the originals.

The study’s authors say their work could be a key part of the ongoing “fair use” debate. The central question is whether AI imitations hurt the market for original works. If readers prefer AI-written imitations, that could be clear evidence of market harm.

The US Copyright Office has already warned that AI could push original works out of the market, even if it doesn’t copy them word for word.

The researchers suggest making a distinction between general-purpose AI models and those trained to imitate specific authors. They argue there’s little legal basis for targeted imitation, and recommend either banning AI from copying individual authors or requiring clear labels for AI-generated texts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.