AI music editor developed by Sony and researchers can modify songs with text prompts

Researchers at Queen Mary University of London, Sony AI, and MBZUAI's Music X Lab have developed an AI system called Instruct-MusicGen that can modify existing music based on text prompts.

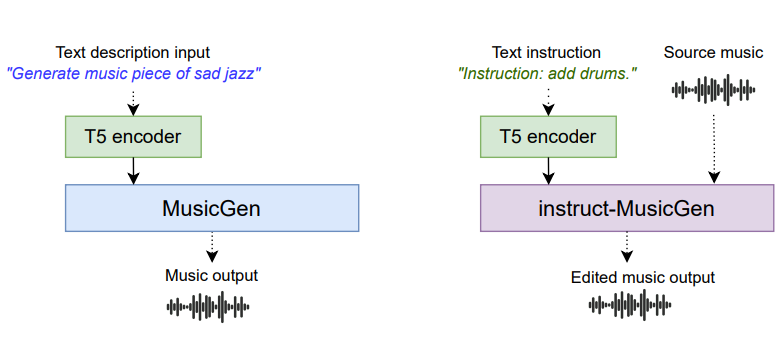

Instruct-MusicGen builds on Meta's open-source AI model MusicGen, which the team has enhanced for text-to-music editing tasks. The researchers modified the original MusicGen architecture by adding text and audio fusion modules, allowing the model to process editing prompts and audio input simultaneously.

The added audio and text fusion modules enable precise editing tasks like adding, removing, or separating music tracks, known as stems. Stems are grouped tracks, often organized by instrument type, that play a key role in music production.

Input audio without bass:

With instruction "add bass":

Input Audio:

Input Audio "drums only":

The researchers note that Instruct-MusicGen improves the efficiency of text-to-music processing and expands the use of language models for music in production environments.

The new model requires only 8% more parameters and 5,000 additional training steps, less than 1% of MusicGen's total training time, to achieve good results. The developers provide numerous examples, code, model, and weights on the project page.

Sony should be in the clear regarding licensing, as Meta asserts that MusicGen was only trained on licensed music and the research team used a dataset of synthetically generated music pieces, Slakh210, for its own instruction tuning. This is significant because Sony is a key player in a lawsuit claiming license infringement against current music generators capable of producing completely original music compositions based on text prompts.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.