Anthropic releases Claude 4 with new safety measures targeting CBRN misuse

Anthropic has released its next generation of AI models, Claude Opus 4 and Claude Sonnet 4, and is introducing new safety measures designed to prevent their use in developing chemical, biological, radiological, or nuclear (CBRN) weapons.

Claude Opus 4 and Claude Sonnet 4 both aim to expand what’s possible for software developers and agent-based applications. According to Anthropic, the two models are better at handling longer reasoning chains, can use tools like web search in parallel, and remember document access with an expanded memory system.

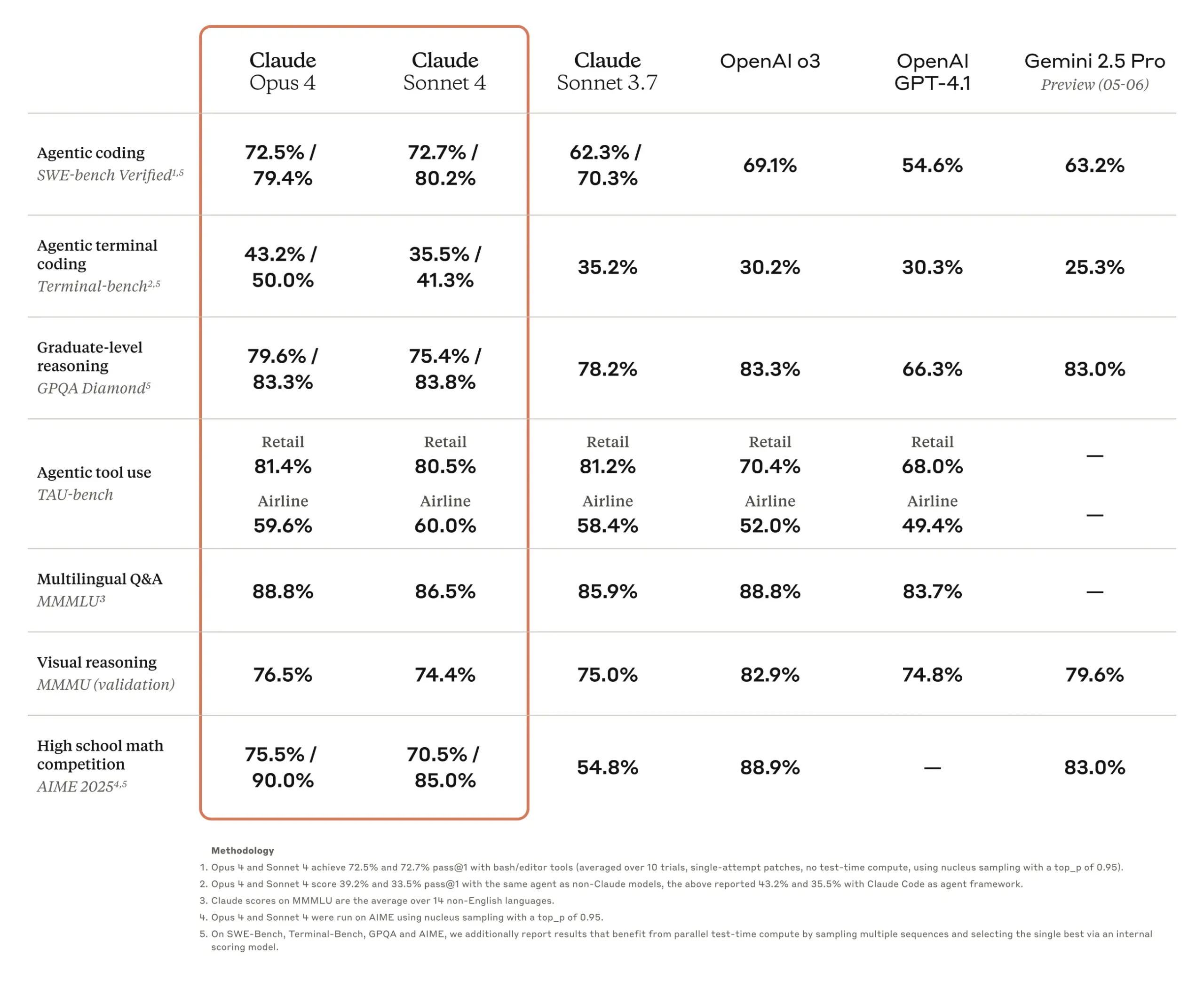

Opus 4 is Anthropic’s most advanced model to date, with a particular focus on coding and complex, multi-hour workflows. It tops benchmarks like SWE-bench (72.5%) and Terminal-bench (43.2%), and also scores highly on MMMLU (87.4%) and GPQA Diamond (74.9%). The "Extended Thinking" feature gives it a performance boost on certain tasks, and Anthropic says Opus 4 is designed for sustainable performance over many steps. When accessing local files, Opus 4 can create "Memory Files" that help it keep track of information—useful in scenarios like navigating complex game worlds such as Pokémon.

Sonnet 4: The all-purpose model for developers

Claude Sonnet 4 is an upgraded version of Sonnet 3.7 built for everyday but demanding developer tasks. It scores 72.7% on SWE-bench and, according to partners like GitHub and Sourcegraph, shows significant improvements in problem-solving, code navigation, and handling complex instructions. GitHub plans to use Sonnet 4 as the foundation for its new Copilot agent.

Anthropic says both new models are 65% less likely than Sonnet 3.7 to take shortcuts or exploit loopholes in agent-based tasks. For especially long reasoning chains, they use "Thinking Summaries" to condense steps—a feature Anthropic says is only needed about 5% of the time.

New tools for building agents

Alongside the new models, Anthropic is rolling out updated API features for building more efficient agents. There’s a new code execution tool that can run Python in an isolated environment—including data analysis and visualization in a single step. Agents can now also connect to external systems like Asana or Zapier via the new MCP Connector, removing the need for custom integrations.

The new Files API lets users upload a document once and reference it across multiple sessions. Combined with the code execution tool, Claude can analyze these files and return results such as diagrams directly. For long-running sessions, prompt caching is now available for up to an hour—a twelvefold improvement over the previous five-minute cache.

Claude Code now generally available

Claude Code, previously in testing, is now available to everyone. Developers can integrate it directly into IDEs like VS Code or JetBrains, where Claude suggests changes inline. The Claude Code agent can also be used with GitHub pull requests to implement feedback, fix CI errors, or adjust code. An SDK is available for teams building their own agents.

ASL-3 safety standard activated for the first time

With Claude Opus 4, Anthropic is activating its AI Safety Level 3 (ASL-3) standard from the Responsible Scaling Policy for the first time. This is a precautionary move, as the model shows advanced knowledge of CBRN risks. Claude Sonnet 4, by contrast, does not fall under ASL-3.

ASL-3 has two main components: preventing misuse and protecting model weights from theft. To block misuse, Anthropic uses "Constitutional Classifiers" that monitor inputs and outputs in real time to filter out dangerous CBRN-related information. A bug bounty program and synthetic training with jailbreak data are also used to strengthen security.

For protecting model weights, Anthropic has implemented over 100 security controls, such as two-person authorization, change management protocols, and egress bandwidth monitoring to prevent large data exports from going undetected.

Anthropic notes that it remains unclear whether Claude Opus 4 strictly requires ASL-3, but activating the safeguards now allows the company to test and refine them in practice.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.