Anthropic shares blueprint for Claude Research agent using multiple AI agents in parallel

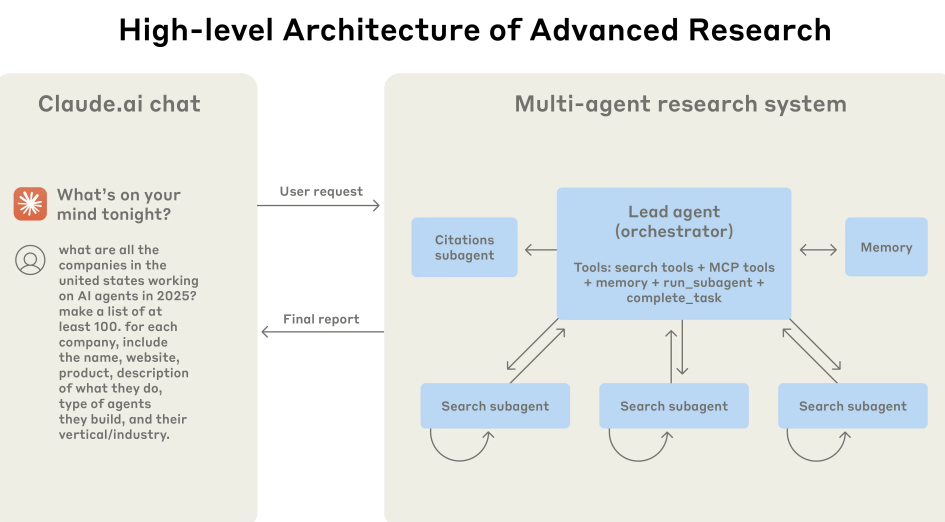

Anthropic has published the technical details behind its new Claude Research agent, which uses a multi-agent approach to speed up and improve complex searches.

The system relies on a lead agent that analyzes user prompts, devises a strategy, and then launches several specialized sub-agents to search for information in parallel. This setup allows the agent to process more complex queries faster and more thoroughly than a single agent could.

In Anthropic's internal tests, the multi-agent system outperformed a standalone Claude Opus 4 agent by 90.2 percent. The architecture uses Claude Opus 4 as the main coordinator and Claude Sonnet 4 as sub-agents.

Anthropic evaluates outputs using an LLM as a judge, scoring results for factual accuracy, source quality, and tool use - a method they say is more reliable and efficient than traditional evaluation techniques. The approach positions LLMs as meta-tools for managing other AI systems.

One key performance factor is token consumption: multi-agent runs use about 15 times more tokens than standard chats. In internal testing, the number of tokens used explained about 80 percent of performance differences, with additional improvement coming from the number of tools used and model selection.

For example, upgrading to Claude Sonnet 4 led to a bigger performance boost than simply doubling the token budget in Claude Sonnet 3.7. This suggests that while token usage matters, both the choice of model and tool configuration are also critical for performance.

Additionally, Anthropic claims that, in specific scenarios, Claude 4 can recognize its own mistakes and revise tool descriptions to improve performance over time. In essence, it acts as its own prompt engineer.

Asynchronous execution: the next step for agentic AI

Anthropic sees its current multi-agent architecture as best suited for queries that demand large amounts of information and can benefit from parallel processing.

Looking ahead, Anthropic aims to move toward asynchronous execution, where agents can create new sub-agents and work in parallel, unconstrained by the current need to wait for all sub-agents to finish before moving forward.

This shift would allow for more flexibility and speed, but it comes with challenges around coordination, state management, and error handling - problems Anthropic says have yet to be fully solved.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.