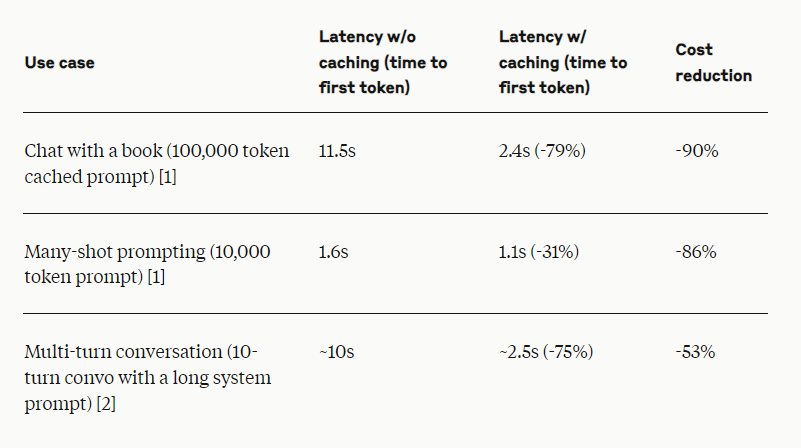

Anthropic's prompt caching feature can cut the cost of long prompts by up to 90% and reduce latency by as much as 85%. The technology lets developers cache frequently used context between API calls, giving Claude more background knowledge and examples to work with. Prompt caching is now in public beta for Claude 3.5 Sonnet and Claude 3 Haiku models, with support for Claude 3 Opus on the way. The feature is a good fit for chat agents, coding assistants, long document processing, detailed instruction sets, agent-based search and tool usage. It also works well for answering questions about books, papers, documentation, and podcast transcripts, Anthropic says. Google also offers prompt caching.

Ad

Support our independent, free-access reporting. Any contribution helps and secures our future. Support now:

Sources

News, tests and reports about VR, AR and MIXED Reality.

What happens next with MIXED

My personal farewell to MIXED

Meta and Anduril are now jointly developing XR headsets for the US military

MIXED-NEWS.com

Join our community

Join the DECODER community on Discord, Reddit or Twitter - we can't wait to meet you.