Google brings personalized discounts to AI search and launches open commerce protocol

The search engine company introduces personalized discount ads in its AI mode and presents an open protocol to bind retailers more closely to the Google ecosystem.

The search engine company introduces personalized discount ads in its AI mode and presents an open protocol to bind retailers more closely to the Google ecosystem.

Chinese researchers have developed UniCorn, a framework designed to teach multimodal AI models to recognize and fix their own weaknesses.

DeepSeek researchers have developed a technique that makes training large language models more stable. The approach uses mathematical constraints to solve a well-known problem with expanded network architectures.

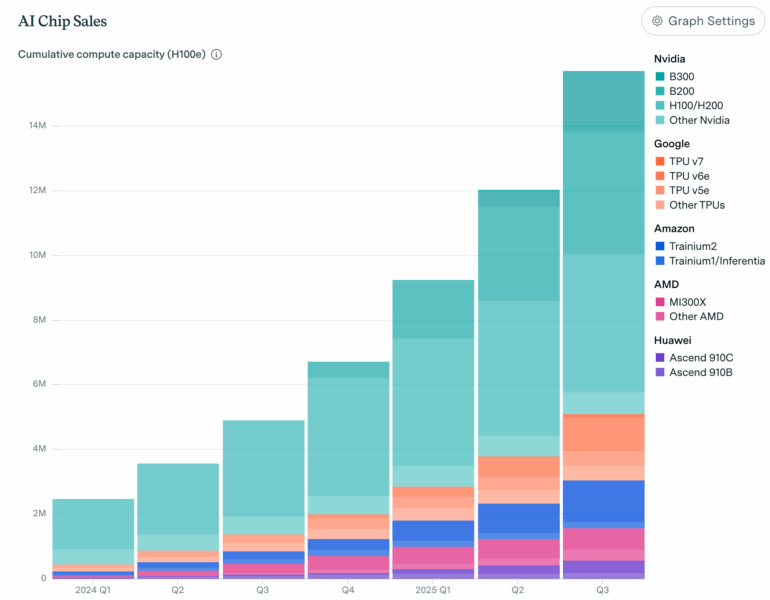

Epoch AI has released a comprehensive database of AI chip sales showing that global computing capacity now exceeds 15 million H100 equivalents. This metric compares the performance of various chips to Nvidia's H100 processor. The data, published on January 8, 2026, reveals that Nvidia's new B300 chip now generates the majority of the company's AI revenue, while the older H100 has dropped below ten percent. The analysis covers chips from Nvidia, Google, Amazon, AMD, and Huawei.

Epoch AI estimates this hardware collectively requires over 10 gigawatts of power - roughly twice what New York City consumes. The figures are based on financial reports and analyst estimates, since exact sales numbers are often not disclosed directly. The dataset is freely available and aims to bring transparency to computing capacity and energy consumption.

Elon Musk's lawsuit against OpenAI and CEO Sam Altman is going to trial. A California federal judge announced Wednesday that she intends to reject attempts by Altman's lawyers to dismiss the case. Judge Yvonne Gonzalez Rogers said during the hearing in Oakland that there is ample evidence to proceed.

Musk accuses OpenAI of deceiving him about its shift from a nonprofit to a for-profit structure. He says he donated $38 million to the company. The trial is scheduled for March. OpenAI denies the allegations, calling the lawsuit baseless and part of ongoing harassment by Musk.

The company claims Musk was informed about its profit plans back in 2018. Musk co-founded OpenAI in 2015 and left the company in 2018.

Shares of Chinese AI startup Minimax doubled in value during their Hong Kong Stock Exchange debut. The stock closed up 109 percent at 345 Hong Kong dollars, CNBC reports. Minimax significantly outperformed local rival Zhipu AI, whose shares gained just 13 percent the day before. The IPO raised around $620 million for Minimax.

The company, backed by Alibaba and Tencent, develops language models for chatbots and video generation. Despite having over 200 million users and revenue jumping to $53.4 million, Minimax reported a $512 million loss for the first nine months of 2025. The company says it's funneling earnings into research. Meanwhile, Disney, Universal, and Warner Bros have been suing Minimax for copyright infringement since September 2025.

The European Commission has ordered Elon Musk's platform X to preserve all internal documents and data related to the AI chatbot Grok through the end of 2026. A Commission spokesperson confirmed the order to Reuters on Thursday. The directive expands on a preservation requirement sent to X last year that focused on algorithms and the spread of illegal content.

The order stems from the Commission's concerns about regulatory compliance. However, the measure does not mean a new formal investigation under the Digital Services Act (DSA) has been opened.

Earlier this week, the Commission condemned images generated by Grok and spread on X showing unclothed women and children as illegal.