Big AI needs to overcome the high scaling costs of generative AI

Key Points

- Generative AI, such as Microsoft's Github Copilot, is expensive to deploy, prompting companies like Microsoft, Adobe, and Zoom to offer AI-based software upgrades at higher prices.

- To turn generative AI into a profitable business model, tech companies are working on their own AI chips and more efficient architectures. This includes developing smaller, less expensive AI systems.

- The high cost of training and running AI models without the revenue to match could discourage investment. But lowering the quality of AI output to save costs could hurt the entire business.

Tech companies are investing billions in developing and deploying generative AI. That money needs to be recouped. Recent reports and analysis show that it's not easy.

According to an anonymous source from the Wall Street Journal, Microsoft lost more than $20 per user per month on generative AI code Github Copilot in the first few months of the year. Some users reportedly cost as much as $80 per month. Microsoft charges $10 per user per month.

It loses money because the AI model that generates the code is expensive to run. Github Copilot is popular with developers and currently has about 1.5 million users who constantly trigger the model to write more code.

Companies like Microsoft, Adobe, Google, and Zoom are already responding to higher-than-usual operating costs by offering AI-based upgrades to their software at higher prices. Adobe is introducing usage limits and pay-as-you-go pricing. Zoom is cutting costs with simpler in-house AI.

"We are trying to provide great value but also protect ourselves on the cost side," Adobe CEO Shantanu Narayen said. Amazon Web Services CEO Adam Selipsky said customers are "unhappy" with the high cost of running AI models.

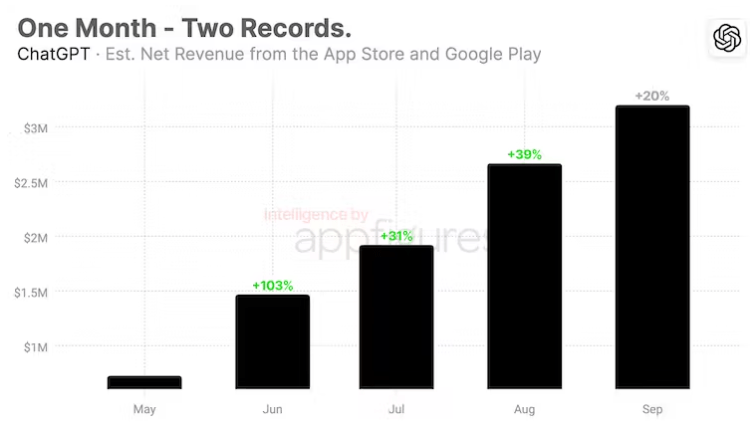

ChatGPT app revenue growth reportedly declining

Another issue is the scarcity and cost of chips. OpenAI partners with Microsoft and uses a supercomputer with 10,000 Nvidia GPUs for its services. Each query costs about 4 cents, according to Bernstein analyst Stacy Rasgon.

If those queries were one-tenth the volume of Google searches, Rasgon says, the initial investment in GPUs would be about $48.1 billion, with annual maintenance costs of about $16 billion.

With that in mind, there is an upside for OpenAI when analytics platform Appfigures estimates that ChatGPT app revenue growth fell from as much as 39 percent in August to 20 percent in September. September revenue is estimated at $4.58 million.

According to Appfigures, the ChatGPT app has been downloaded about 52 million times so far, with 15.6 million new installations in September alone. 9 million came via Android, 6.6 million via iOS.

The slowdown in revenue growth may indicate that the app has reached the limit of users willing to pay for the paid model, GPT-4, which is significantly pricier to run than GPT-3.5. It is also clearly superior in terms of quality.

Breaking the AI cost trap with proprietary AI chips and more efficient architectures

To turn generative AI into a business model in the medium term, the big tech companies are trying to become less dependent on Nvidia, which currently dictates prices for AI chips. Amazon and Google already make their own chips. Microsoft is expected to unveil its first AI chip soon, and OpenAI is reportedly also considering producing its own chips.

Companies are also researching more efficient AI architectures. Smaller AI models trained on particularly high-quality data can match the quality of larger models, at least in selected use cases. Microsoft's Phi model, for example, demonstrates this.

Microsoft research chief Peter Lee has tasked "many" of Microsoft's 1,500 researchers with developing conversational AI models like Phi that are smaller and cheaper to run, The Information recently reported. The transformation at Microsoft is just beginning, according to the site.

At What Point is generative AI good enough?

Focusing on cost efficiency rather than quality at this early stage introduces new risks: GPT-4 is just good enough for some tasks; for others, such as web browsing, the model is not yet competitive.

If manufacturers push too hard on quality because of high operating expenses, users may be discouraged from using generative AI. And if generative AI doesn't grow, investment could diminish - a downward market movement could be the result.

A negative example of cost-cutting experiments is the "Balanced" mode in Bing chat, which reportedly uses cheaper language models from Microsoft in addition to GPT-4. This leads to more errors in the output, which is particularly critical in the context of elections, as AlgorithmWatch shows in a recent investigation.

The high cost of running an AI search is also likely why Google is currently testing a scaled-down version of its AI search, called Search Generative Experience. This could allow for a smoother transition between classic and generative search, first by reducing the high operating expenses, and then by bringing Google's traditional advertising business into the new search experience.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now