ChatGPT beats doctors at answering online medical questions, study finds

According to a recent study, ChatGPT surpasses the quality and empathy of physicians when responding to online queries. However, there are some caveats.

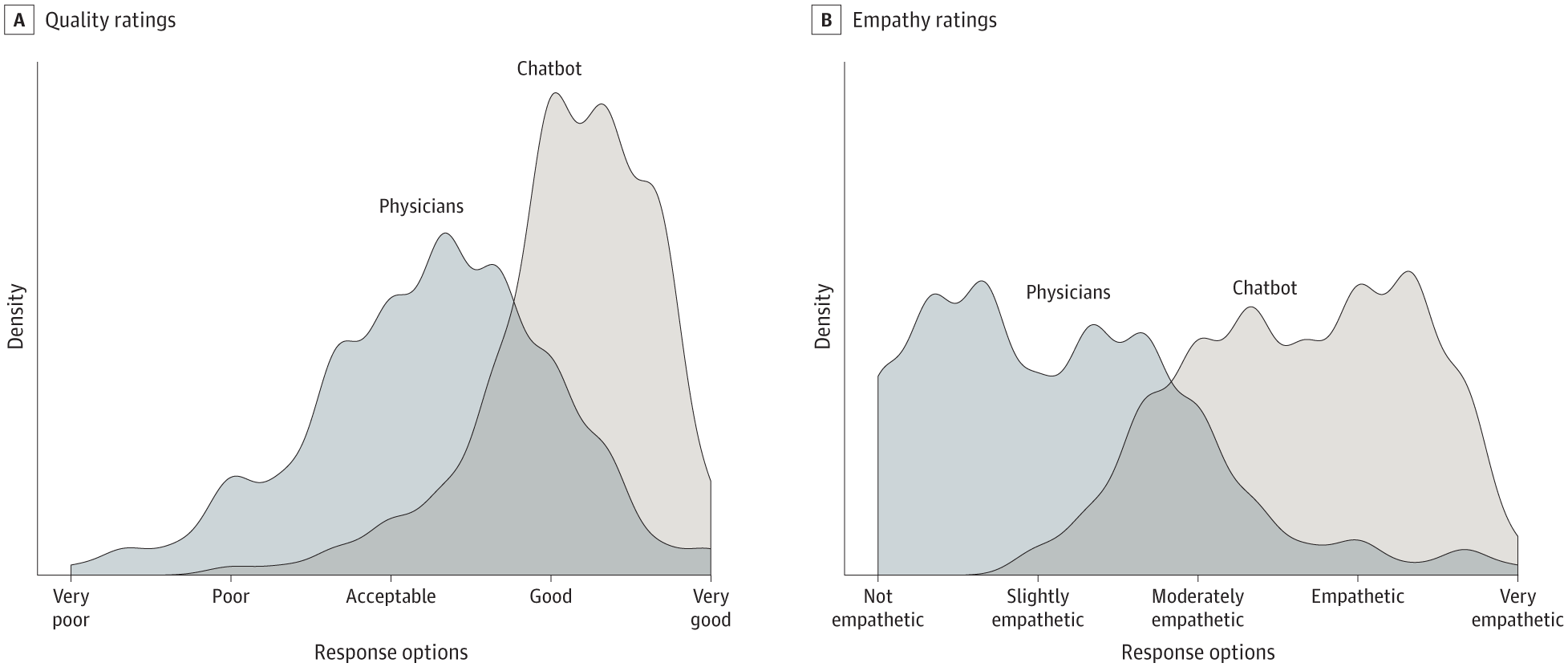

A recent study published in JAMA Internal Medicine reveals that ChatGPT surpasses physicians in terms of quality and empathy when responding to online queries. The study evaluated ChatGPT's performance compared to physicians in answering patient questions from Reddit's r/AskDocs forum.

The cross-sectional study involved 195 randomly selected questions and found that chatbot responses were preferred over physician responses. ChatGPT received significantly higher ratings for both quality and empathy.

Notably, the study used GPT 3.5, which is an older version, suggesting that the latest GPT-4 upgrade could provide even better results.

Chatbot-assisted doctors

The researchers write that AI assistants could help draft responses to patient questions, potentially benefiting both clinicians and patients. Further exploration of this technology in clinical settings is needed, including using chatbots to draft responses for doctors to edit. Randomized trials could assess the potential of AI assistants to improve responses, reduce clinician burnout, and improve patient outcomes.

Prompt and empathetic responses to patient queries could reduce unnecessary clinical visits and free up resources. Messaging is also a critical resource for promoting patient equity, benefiting individuals with mobility limitations, irregular work schedules, or fear of medical bills.

Despite the promising results, the study has limitations. The use of online forum questions may not reflect typical patient-physician interactions, and the ability of the chatbot to provide personalized details from electronic health records was not assessed.

Additionally, the study didn't evaluate the chatbot's responses for accuracy or fabricated information, which is a major concern with AI-generated medical advice. Sometimes it's harder to catch a mistake than to avoid making one, even when humans are in the loop for final review. But it's important to remember that humans make mistakes, too.

The authors conclude that more research is needed to determine the potential impact of AI assistants in clinical settings. They emphasize addressing ethical concerns, including the need for human review of AI-generated content for accuracy and potential false or fabricated information.

It's worth noting that ChatGPT is not specifically optimized for medical tasks. Google is developing Med-PaLM 2, a large language model fine-tuned for medical purposes. Google claims it can pass medical exams and plans to start trials with professionals.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.