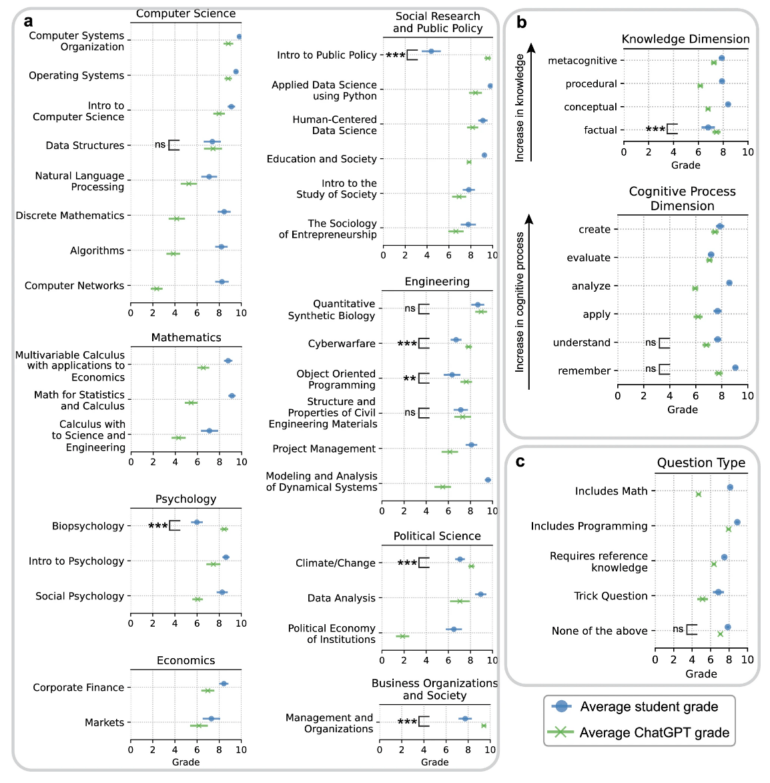

A study confirms anecdotal reports: ChatGPT can achieve academic performance comparable to students.

A study published in Scientific Reports compared the performance of students and ChatGPT on the same tasks. In an experiment, instructors at New York University Abu Dhabi (NYUAD) were first asked to provide ten questions from their respective lectures, along with three randomly selected student answers to each question.

The researchers then used ChatGPT to generate three different answers to each question. The questions were entered directly into ChatGPT without any additional context in the prompt.

It is not clear from the study whether GPT-3.5 or GPT-4 was used, although GPT-4 is mentioned in the references. If GPT-3.5 was used, the quality of the AI responses using GPT-4 instead could be much better, especially when it comes to reasoning.

ChatGPT is at least on the same level in 9 of 32 subjects

After the ChatGPT responses were generated, they were mixed with the student responses and scored by three different reviewers. ChatGPT performed as well as or better than human students in nine out of 32 subjects. These nine subjects were

- Data Structures

- Introduction to Public Policy

- Quantitative Synthetic Biology

- Cyberwarfare

- Object Oriented Programming

- Structure and Properties of Civil Engineering Materials

- Biopsychology

- Climate/Change

- Management and Organizations

The AI was particularly convincing in areas where extensive factual knowledge was required. In the "Introduction to Public Policy" course, ChatGPT scored on average more than twice as high as the students. On the other hand, students outperformed ChatGPT in mathematical and economic tasks that required higher cognitive skills.

AI text detectors fail

The researchers also tested whether they could reliably distinguish human from machine text using OpenAI's AI text classifier, which the company has since withdrawn due to unreliability, and GPTZero.

The OpenAI tool misclassified five percent of human text as machine text, while GPTZero misclassified 18 percent. This is a disastrous result, considering the potential consequences for the students involved, who could be falsely accused of cheating.

Conversely, the OpenAI tool identified 49 percent of machine-generated text as human, compared to 32 percent for GPTZero. In both cases, the potential for AI text to pass as human text is high.

This finding is significant in the context of results from a survey of 1,601 students and teachers in Brazil, India, Japan, the United States and the United Kingdom that was also part of the study. 74 percent of students want to use ChatGPT for their work. 70 percent of teachers want to report this use as plagiarism if they notice it.