Deepseek's language models could deliver massive profits even priced far below OpenAI

Newly released data from Chinese AI provider Deepseek reveals that AI language models could, in theory, generate substantial profit margins—even at prices significantly lower than OpenAI’s.

Deepseek has offered a rare glimpse into the operating costs and potential profitability of its AI services. The numbers suggest the company could achieve a theoretical profit margin of 545 percent if it fully monetized its services, even while maintaining its open source strategy and charging less than competitors like OpenAI.

Smart resource management keeps costs down

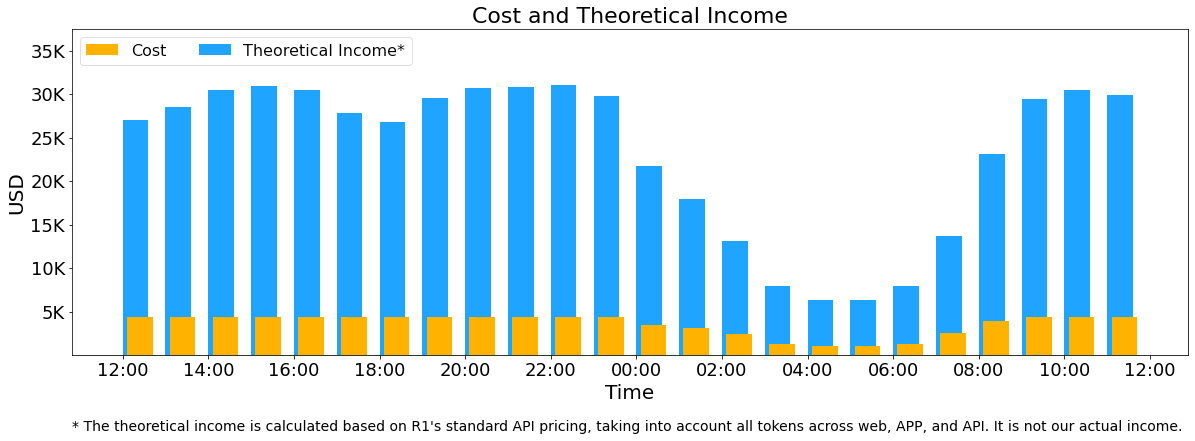

During a 24-hour test period, Deepseek's models processed 608 billion input tokens and 168 billion output tokens. The company managed to serve more than half of the inputs (56.3 percent) from a cache, significantly reducing costs.

To maximize efficiency, Deepseek uses a dynamic resource allocation system. During peak daytime hours, all nodes handle inference requests. At night, when demand drops, the company redirects resources to research and training tasks.

The hardware infrastructure for this operation costs $87,072 per day, using an average of 226.75 server nodes. Each node contains eight Nvidia H800 GPUs, with calculations based on an estimated leasing cost of two dollars per GPU per hour.

A single H800 node processes about 73,700 input tokens per second during prefilling or 14,800 output tokens during decoding. The average output speed reaches 20 to 22 tokens per second.

If Deepseek charged full price for every processed token using its premium R1 model rates ($0.14 per million input tokens for cache hits, $0.55 for cache misses, and $2.19 per million output tokens), daily revenue would reach $562,027.

But real-world revenues fall well below these theoretical figures, the company says. Deepseek's standard V3 model is priced below R1, most services are offered for free, and the company applies nightly discounts. For now, only API access is generating revenue.

The commoditization of AI services

Deepseek's unusual transparency reflects an industry dynamic: while AI language models can theoretically generate substantial profit margins, capturing that value proves difficult. Between market competition, tiered pricing structures, and the need to provide free services, actual profits tend to shrink dramatically.

In this context, OpenAI's recent pricing strategy is particularly noteworthy. The company's latest GPT-4.5 commands premium prices far above both its predecessors and competitors such as Deepseek, despite offering only modest performance improvements.

Deepseek's data suggests that language models are evolving into commodity services where premium pricing no longer reflects actual performance advantages. This creates additional pressure on Western AI companies like OpenAI, which are losing billions and facing significant operating costs while market pressures drive down prices.

This could be one of the reasons that OpenAI's GTM Manager Adam Goldberg recently emphasized that success in AI requires controlling the entire value chain - from infrastructure and data to models and applications. As language models become commoditized, the competitive advantage may lie less in the models themselves and more in a company's ability to integrate and optimize across the complete technology stack.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.