"Edge of Chaos": Yale study finds sweet spot in data complexity helps AI learn better

Yale University researchers have found that AI models learn best when trained on data that hits a specific complexity level – not too simple, not too chaotic.

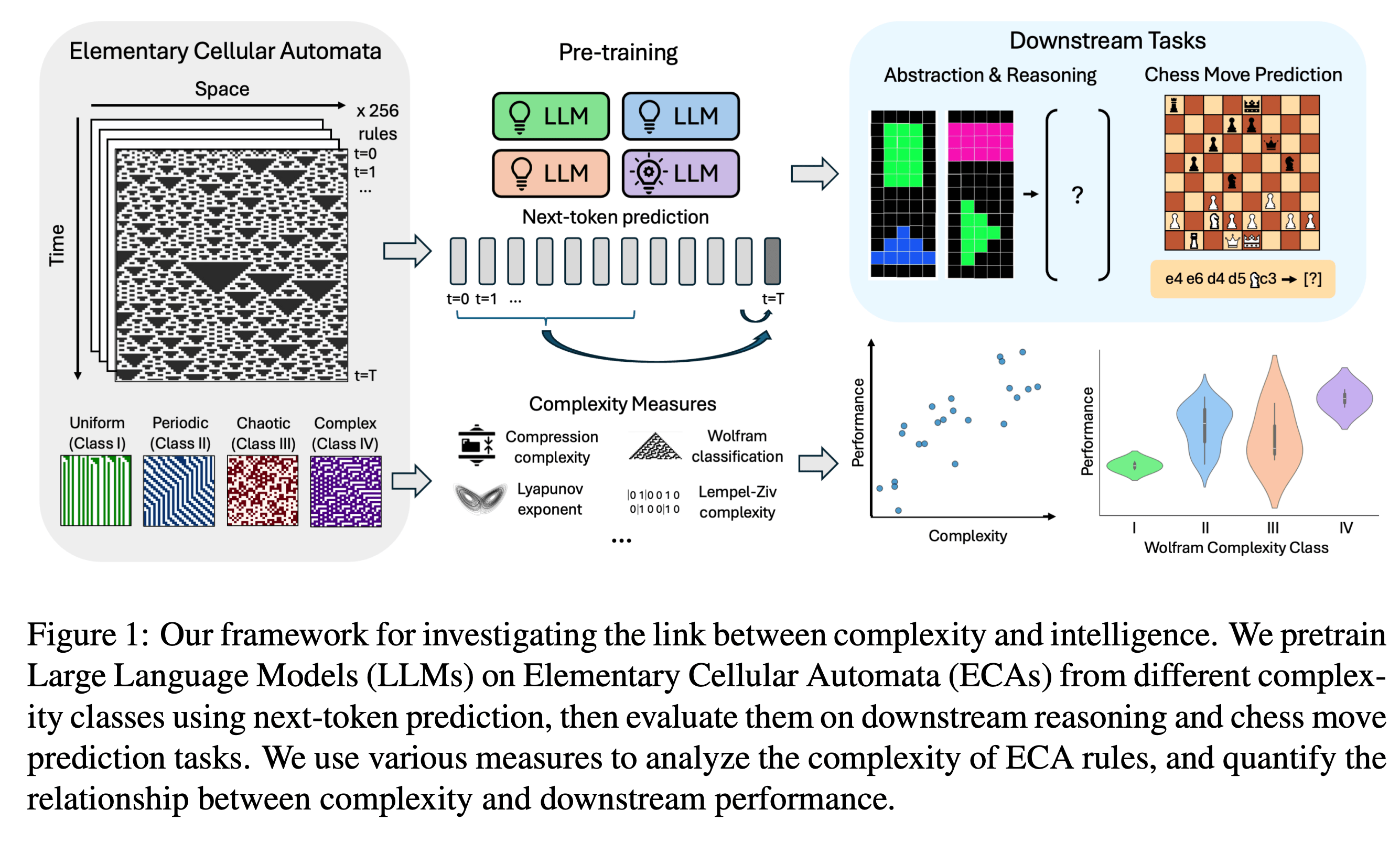

The research team trained various language models using elementary cellular automata (ECAs), simple systems in which the future state of each cell depends on itself and its two neighbors. While these systems use basic rules, they can generate anything from simple to highly complex patterns. The researchers then studied the performance of these LLMs in reasoning tasks and in predicting chess moves.

The study found that AI models trained on more complex ECA rules performed better in downstream tasks such as reasoning and predicting chess moves. Models trained on Class IV ECAs, according to Wolfram's classification, performed particularly well. The rules of these ECAs produce patterns that are neither completely ordered nor completely chaotic, but exhibit a kind of structured complexity.

The "Edge of Chaos"

"Surprisingly, we find that models can learn complex solutions when trained on simple rules. Our results point to an optimal complexity level, or 'edge of chaos', conducive to intelligence, where the system is structured yet challenging to predict," the authors say.

Models exposed to very simple patterns tended to learn trivial solutions, while those trained on more complex patterns developed more sophisticated abilities, even when simpler approaches were available. The researchers suspect that this complexity in the learned representations is a key factor enabling the models to transfer their knowledge to other tasks.

The findings could shed light on why large language models like GPT-3 and GPT-4 are so effective. According to the researchers, the sheer volume and diversity of training data used in these models might create benefits similar to those seen with complex ECA patterns in their study.

The team notes that more research is needed to verify this connection. They're planning to test their theory by expanding their experiments to include larger models and more complex systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.