Elon Musk's AI startup xAI has announced the release of its latest model, Grok-1.5.

The new model will soon be available to existing users and early testers on the X platform. New features include enhanced reasoning capabilities and a context length of 128,000 tokens, according to xAI.

Context length refers to the number of words or pages the model can process in one go. 128,000 tokens correspond to around 100,000 words or 300 book pages. This means Grok 1.5 can handle more complex prompts with more examples.

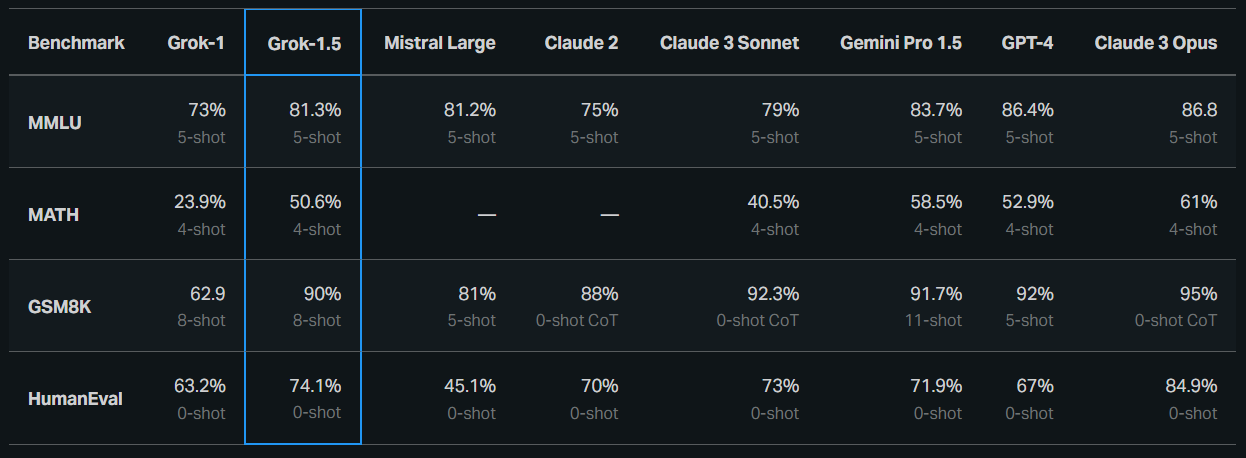

In tests, Grok 1.5 scored 50.6% on the MATH benchmark and 90% on the GSM8K benchmark. Both benchmarks cover a wide range of math problems from elementary school to high school competition level. For code generation and problem solving, Grok-1.5 achieved a 74.1% score on the HumanEval benchmark.

On the MMLU language comprehension benchmark, Grok 1.5 scored around 81%. This is a big jump from Grok-1's 73%, but well behind the current leaders GPT-4 and Claude 3 Opus, which each scored around 86%. And OpenAI may have the next model in the pipeline for this summer.

In the "needle in a haystack" test, which checks whether the AI model can reliably find specific information within the context window, Grok 1.5 achieved a perfect result. However, the test is not very meaningful because it uses the language model like an expensive search function.

More relevant, but much harder to test, would be things like the number of errors or omissions when summarizing very large documents. Other AI companies, such as Google or Anthropic, also use this ultimately misleading benchmark to boast about the performance of their model's context window.

xAI is working on making AI training more efficient

xAI emphasizes its focus on innovation, particularly in the training framework. Grok-1.5 is based on a specialized distributed training framework built on JAX, Rust, and Kubernetes, the company says. This training stack allows the team to prototype ideas and train new architectures at scale with minimal effort.

One of the biggest challenges in training large language models (LLMs) on large compute clusters is optimizing the reliability and availability of the training job, xAI says.

xAI's custom training orchestrator is designed to ensure that problematic nodes are automatically detected and removed from the training job. Checkpointing, data loading and restarting of training jobs have also been optimized to minimize downtime in the event of a failure.

xAI open-sourced Grok-1 about two weeks ago. It is the largest mixture-of-experts model available as open source to date. However, it lags behind the performance of smaller and more efficient open-source models. xAI did not comment on any plans to open source Grok 1.5.