A group of EU policymakers proposes three risk categories for AI applications.

AI has long existed in everyday life. It goes mostly unnoticed by users, hidden in the software they use on their smartphone, in their search engine, or in their autonomous household vacuum cleaner.

But, how do we deal with AI decisions and content? Or with the data that (has) to be collected and processed when using AI software? Policymakers in the U.S. and Europe are not yet providing comprehensive and uniform rules for this.

Self-regulation is not enough

In the absence of potent legislation, many prominent tech companies with flagship applications are self-regulating. Google, for example, does not currently publish powerful AI systems such as Parti (image generation) or LaMDA (dialogs) because there is a risk that the systems will produce morally questionable content or even violate laws.

OpenAI, for example, also did not release DALL-E 2 until it had sufficient safeguards in place, from its perspective, against the generation of critical content. However, these safeguards are sometimes controversial. They limit the technical capabilities of the systems and thus restrict creative freedom. DALL-E 2, for example, has only been allowed to generate faces for a few weeks.

The example of Clearview shows that policymakers should not rely on this self-regulation. The company's AI-based system theoretically enables mass surveillance based on facial data pulled from the Internet and is used internationally, in some cases illegally. Despite plenty of headwinds and the threat of millions in fines from EU data protection authorities, Clearview wants to continue to assert its own economic interests.

Risk awareness is feigned at best. In addition, the capabilities of AI are sometimes misjudged, such as for emotion recognition. Or, overestimated, such as the reliability and accuracy of even relatively proven systems like facial recognition when deployed at scale.

EU Policy: three categories of risk for artificial intelligence

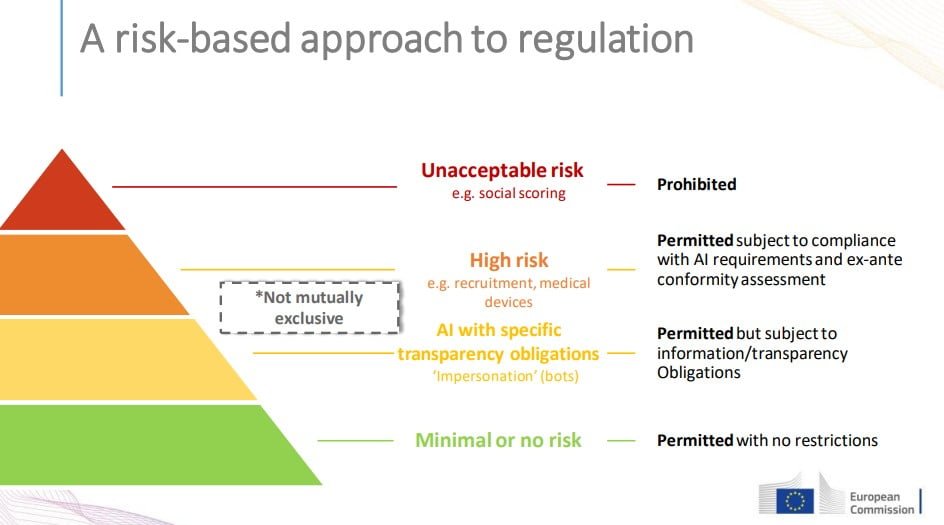

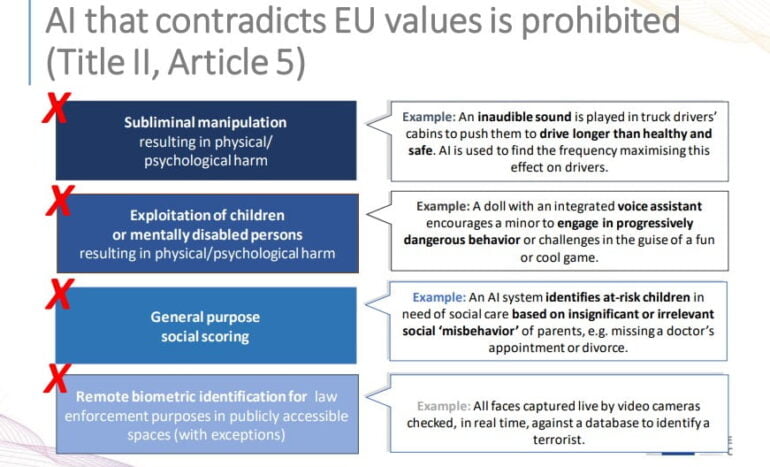

The Artificial Intelligence Act by a group of EU politicians aims to divide AI applications into three risk categories in the future. Based on these categories, an application can be banned directly or information and transparency requirements, as well as laws yet to be developed, will take effect.

As an unacceptable risk, the EU policy group sees AI surveillance for social scoring systems, such as those in use in China, or applications that generally violate EU values. Such systems will be banned.

High-risk applications include computer vision tools designed to detect whether an applicant is suitable for a job. There are to be separate laws for such systems. The list of possible high-risk applications is still in the works.

Applications with minimal or no risk are to remain "largely unregulated." These are all applications that do not fall into the high or unacceptable risk categories. The EU group expects that "most AI applications" will not fall into the high-risk category.

The EU AI law could come into force by the end of 2024.