- Further information on AI content theft added

Update, December 19, 2023:

According to Business Insider, the website Exceljet, which offers Microsoft Excel tutorials, was the target of the AI-powered "SEO heist" (see below). Online marketer Jake Ward used AI to clone thousands of Exceljet articles for his client and Exceljet competitor Causal, a startup that offers business planning software.

The move initially led to a significant increase in traffic for Causal and a decrease for Exceljet. This raises questions about the ethical use of AI in content creation and its impact on search engine rankings. It also shows how difficult it is for Google to differentiate between human-generated and AI-generated content (again, see below).

Marketer Ward argues that his use of AI is simply an efficient form of SEO strategy.

"Most websites, especially large-scale projects, will hire cheap writers who know nothing about the topic they're writing about," Ward said. "They copy and regurgitate content from the top few Google results. AI is smarter than 99% of the people writing those articles."

Google disagrees: Ward's client, Causal, has reportedly lost most of its AI traffic. It dropped from 610,000 visits in early October to 190,000 in mid-December.

That would put Causal's site below its pre-Ward campaign traffic of about 200,000 visits in early 2023. Traffic figures are estimates from the web analytics platform Ahrefs.

Original article dated November 27, 2023:

Generative AI is Google's worst enemy and greatest opportunity

A new SEO experiment shows that Google can't handle AI-generated content. It's just one example of many.

AI threatens Google's core Internet search business on two fronts: Chatbots like ChatGPT could one day become the primary way people search for digital information. A library of websites, like the classic Google search, might not become obsolete. But it would become much less important.

At the same time, and this is the more immediate threat, more and more AI-generated content is making its way onto the web. Google has to provide good search results despite this growing flood of near-duplicate content.

These two threats are mutually reinforcing: the worse web search gets, the more attractive chatbots become as a replacement. This could lead to a downward spiral for Google.

Google can't control AI spam

Google has been struggling with AI content for months. In June, a study by Newsguard showed how quickly news sites with AI-only content are growing.

They sometimes publish hundreds or more than a thousand articles a day - and generate millions of hits with these spam strategies, which even make money for Google because their owners place banners from Google Adsense, Google's advertising service for publishers, on their pages.

The site "gameishard.gg" is one such site that reached an audience of millions overnight with generic AI content through Google channels such as Search and Discover. Google knows about this site and it continues to generate traffic.

Perhaps Google feels its hands are tied because it has already stated that it won't penalize AI content in general, but will focus on its usefulness. But usefulness is an elastic term, and Google is only providing pseudo-transparency by saying what it expects from content that wants to rank well, without saying how it actually ranks the content (for understandable reasons).

If Google were to take a more aggressive stance against AI content, it would likely face more questions about its own AI content strategy, which is not fundamentally different from these AI content sites, namely using AI to automatically generate answers to common Internet questions.

The great SEO heist by Jake Ward

SEO Jake Ward has now successfully taken the principle of AI spam to the extreme. His campaign, which he calls an "SEO heist," brought him massive traffic in a very short time with minimal effort.

First, Ward scanned his competitors' websites. This gave him an extensive list of existing content, which he used as a starting point to generate new content ideas and article structure using AI. He did this using Byword, a GPT-4-based SEO tool, which he then ran to generate entire articles based on these suggestions.

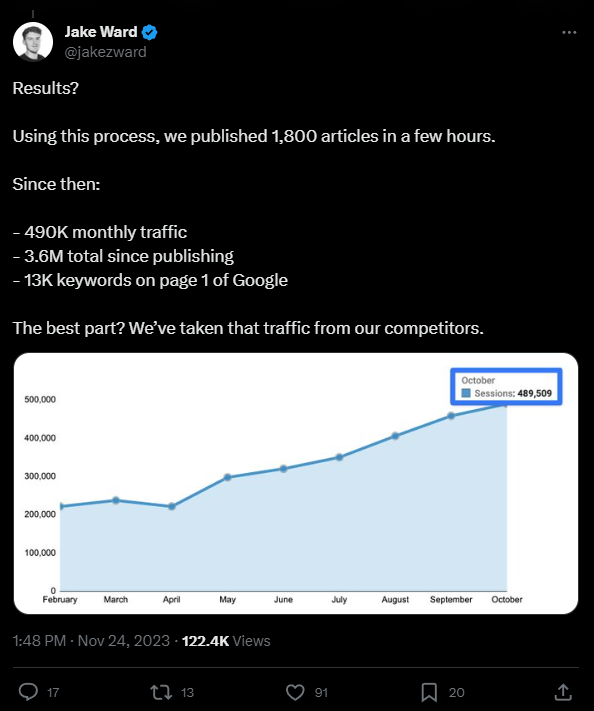

Using this method, Ward published 1,800 articles in a matter of hours, resulting in 3.6 million visits and 13,000 keywords on the first Google page since the project began.

On Twitter.com, Ward received heavy criticism from the SEO community for his move. People told him that his actions were dubious, that he was a nest and Internet polluter.

Perhaps these SEOs are aware that if such tactics work, then Google has a fundamental problem. And if Google has a fundamental problem, it could have a negative impact on their business.

Scenario 1: People save Google

But how could Google respond? As mentioned above, the company has made it clear that it will not penalize AI content across the board - and probably could not penalize it anyway due to a lack of reliable detectors.

One measure would be for Google to rely more on verified human content, i.e. verified authors such as professional editors. There are already signs that this is happening.

For example, Google recently began listing the name of the author of a news story next to the name of the publication. Social media posts also appear in Google's "Perspectives" search. Other human integrations into Google products are reportedly in the works.

This is the scenario: People save Google.

But it could cost Google dearly because people want to be paid for their work. And trading content for reach will eventually not be enough if Google loses its relevance as the single point of entry to the Internet.

Years of negotiations with news publishers around the world show that Google is reluctant to pay for the use of third-party content, even though it makes money from it. The search company will therefore do everything in its power to avoid relying on the human factor in the long term. Too expensive, too labor-intensive, not scalable.

Scenario 2: AI saves Google

So the second scenario is more likely: the search company will simply become the leading AI publisher itself.

The Search Generative Experience and giant AI model projects like Gemini show what Google is really focused on: Like the major social platforms, Google is evolving into a closed platform that only redirects users to an external site when unavoidable. An answer engine as envisioned by Google co-founder Sergey Brin in 2005: "The perfect search engine would be like the mind of God." According to insiders, Brin is now fully involved in Google's AI development after stepping back from day-to-day operations in the past.

Two questions remain: Will purely AI-generated content be good enough for Google's plans, with the reliability of information playing a key role? Today's AI systems need to be significantly improved so that they don't become misinformation spreaders like Microsoft's Bing chatbot or MSN.com.

And can Google make a smooth transition from the old to the new without a competitor gaining significant market share? Examples like Ward's raise doubts at the moment. But things are moving so fast that this is a snapshot at best. Google still has plenty of cards to play.