Google boasts 1.3 quadrillion tokens each month, but the figure is mostly window dressing

Google says it now processes more than 1.3 quadrillion tokens every month with its AI models. But this headline number mostly reflects computing effort, not real usage or practical value, and it raises questions about Google's own environmental claims.

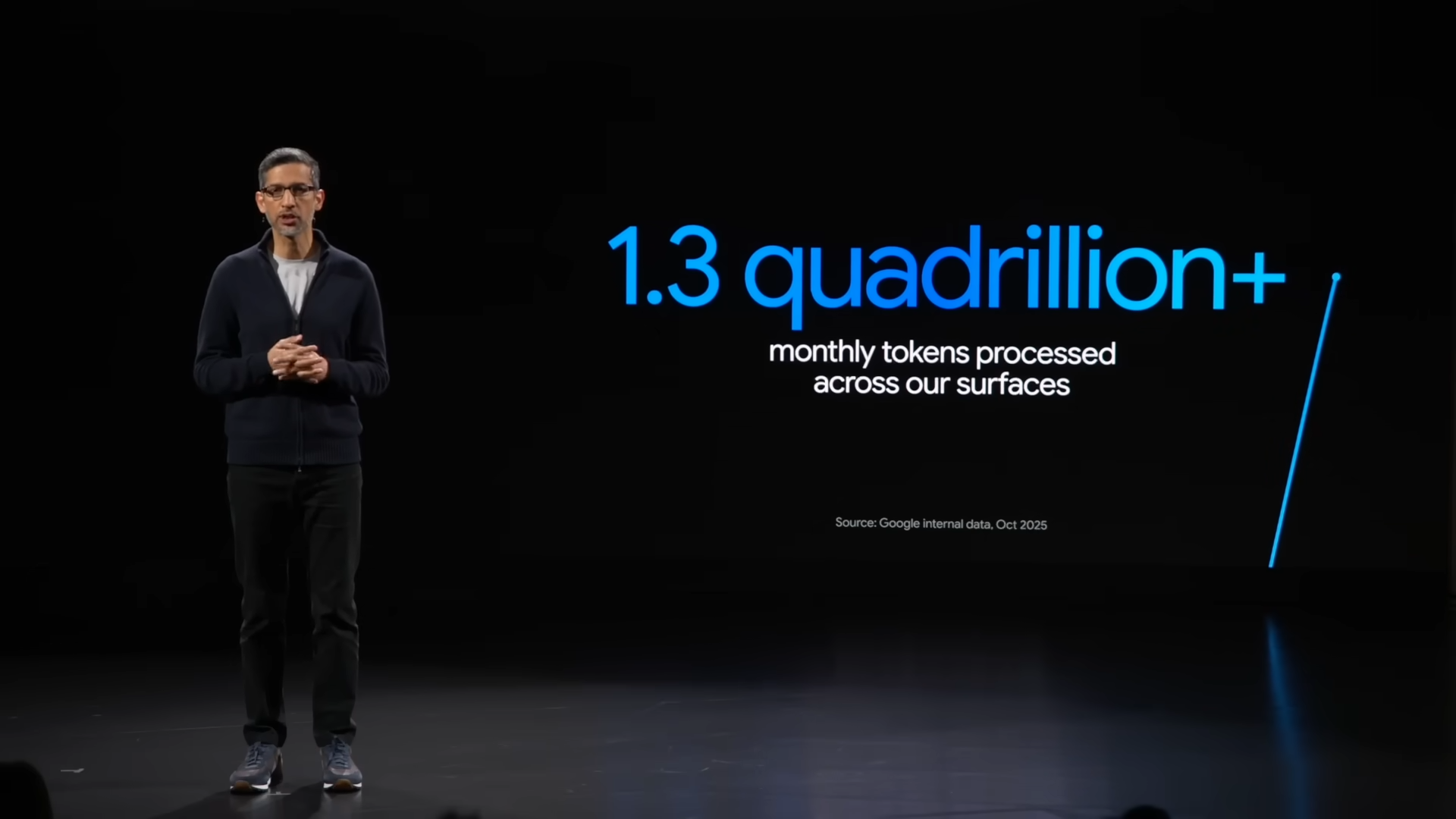

According to Google, it processes over 1.3 quadrillion tokens per month with its AI products and interfaces. This new brand was announced by Google CEO Sundar Pichai at a Google Cloud event.

Google announced the milestone during a recent Google Cloud event, with CEO Sundar Pichai highlighting the figure. Back in June, Google said it had reached 980 trillion tokens, more than double May's total. The latest jump adds about 320 trillion tokens since June, but growth has already slowed, a trend not reflected in Pichai's presentation.

Token consumption is growing faster than actual usage

Tokens are the smallest unit processed by large language models, similar to word fragments or syllables. A huge token count sounds like surging usage, but in reality, it's primarily a measure of rising computational complexity.

The main driver is likely Google's rollout of reasoning models like Gemini 2.5 Flash. These models perform far more internal calculations for every request. Even something as basic as "Hi" can trigger dozens of processing steps in today's reasoning models before returning an answer.

A recent analysis showed that Gemini Flash 2.5 uses about 17 times more tokens per request than its previous version and is up to 150 times pricier for reasoning tasks. Moreover, complex features like video, image, and audio processing are likely factored into the total, but Google doesn't break those out.

So, the token number is mostly a measure of backend computing load and infrastructure scaling, not a direct indicator of user activity or actual benefit.

Google's token consumption vs. Google's environmental claims

Google's new token stats also highlight a key issue with Google's own environmental report: by focusing on the smallest unit of computation, the study glosses over the real scale and environmental impact of AI operations. It claims a single Gemini request uses only 0.24 watt-hours of electricity, 0.03 grams of CO₂, and 0.26 milliliters of water—supposedly less than nine seconds of TV time.

These estimates are based on a "typical" text prompt in the Gemini app. Google doesn't clarify whether this reflects lightweight language models (likely) or the much more resource-intensive reasoning models (unlikely). The study also leaves out heavier use cases like document analysis, image or audio generation, multimodal prompts, or agent-driven web searches.

Viewed in this light, Google's 1.3 quadrillion tokens mainly highlight how rapidly its computing demands are accelerating. Yet this surge in system-wide usage doesn't appear in Google's official environmental assessment. It's a bit like an automaker touting low fuel consumption while idling, then calling the entire fleet "green" without accounting for real-world driving or manufacturing.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.