Google DeepMind opens up AlphaChip, letting researchers train AI on custom chip designs

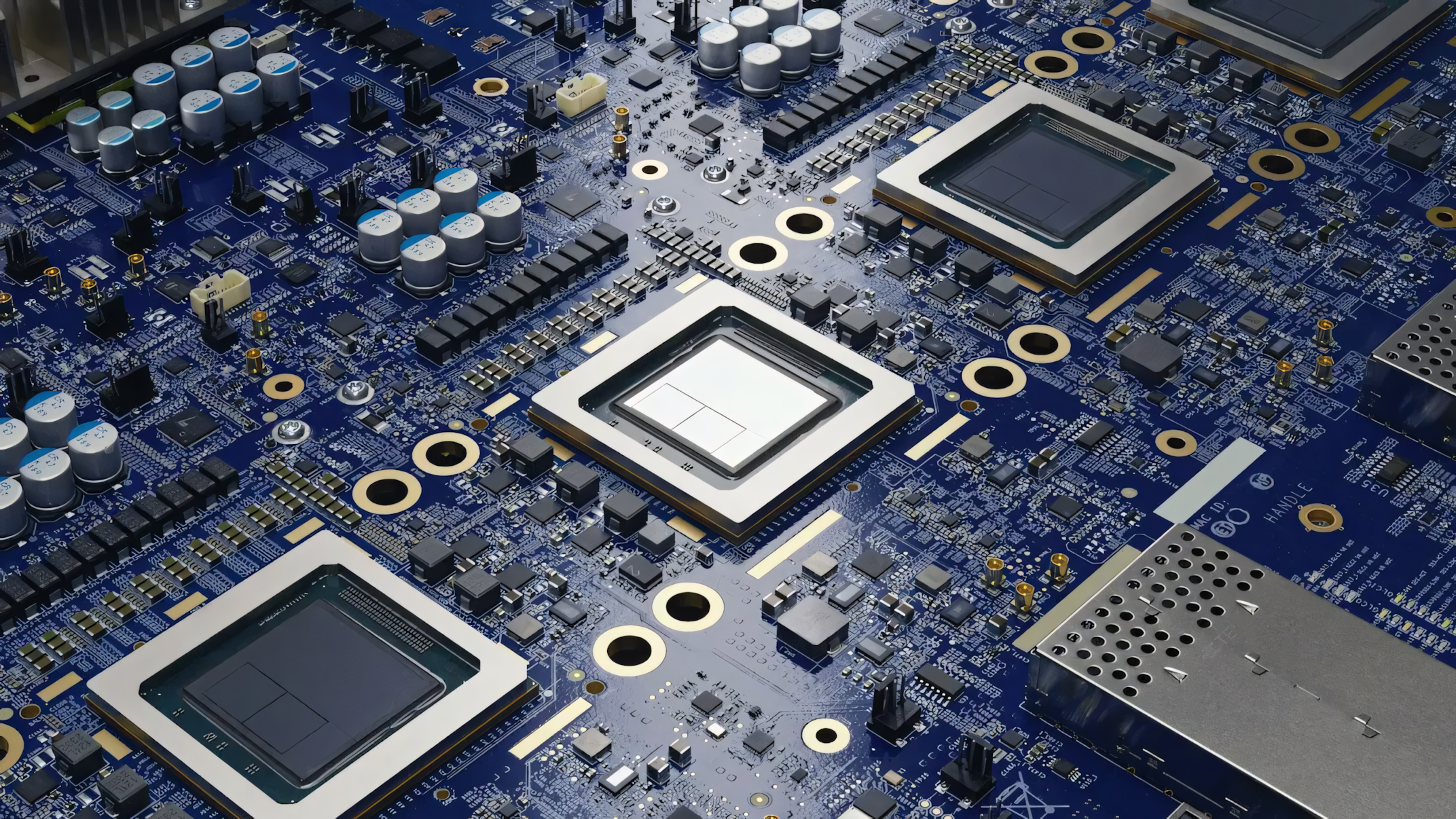

Google DeepMind has revealed more details about its AI system AlphaChip, which speeds up and improves computer chip development. The chip layouts created by AlphaChip are already being used in Google's AI accelerators.

In a follow-up to its 2021 Nature study, Google DeepMind has shared additional information about its AI system for chip design. The system, now officially called AlphaChip, uses reinforcement learning to create optimized chip layouts quickly.

According to Google DeepMind, AlphaChip has been used to design chip layouts in the last three generations of Google's Tensor Processing Unit (TPU) AI accelerator. The system's performance has steadily improved: For the TPU v5e, AlphaChip placed 10 blocks and reduced wire length by 3.2% compared to human experts. For the current 6th generation called Trillium, this increased to 25 blocks and a 6.2% reduction.

DeepMind says AlphaChip uses an approach similar to AlphaGo and AlphaZero. It treats chip layout as a kind of game, placing circuit components one after another on a grid. A specially developed graph neural network allows the system to learn relationships between connected components and generalize across different chips.

Besides Google, other companies are also using this approach. Chip manufacturer MediaTek has expanded AlphaChip for developing its most advanced chips, including the Dimensity Flagship 5G for Samsung smartphones.

AlphaChip is Open-Source

Google DeepMind sees further potential to optimize the entire chip design cycle. Future versions of AlphaChip are expected to be used from computer architecture to manufacturing. The company hopes to make chips even faster, cheaper, and more energy-efficient.

As part of publishing the Nature follow-up, Google DeepMind has also provided some open-source resources for AlphaChip. The researchers say they've released a software repository that can fully reproduce the methods described in the original study.

External researchers can use this repository to pre-train the system on various chip blocks and then apply it to new blocks. Google DeepMind is also providing a pre-trained model checkpoint trained on 20 TPU blocks.

However, the researchers recommend pre-training on custom, application-specific blocks for best results. They've provided a tutorial explaining how to perform pre-training using the open-source repository.

The tutorial and the pre-trained model are available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.