Google expands AI Mode with visual search and new features

Google is adding new visual search tools to AI Mode, letting users search for images using natural language and save results directly.

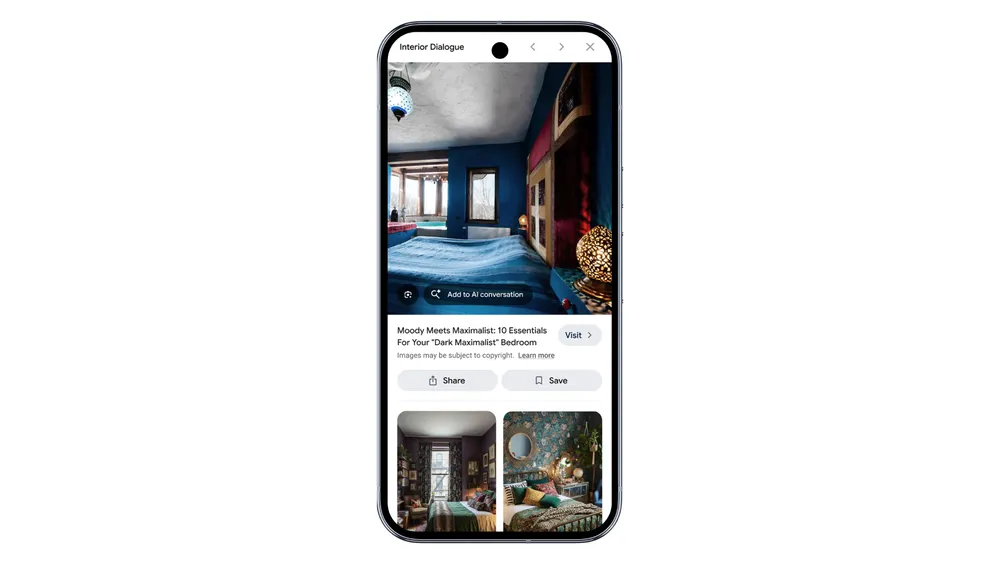

The update combines visual search with conversational queries. Users can enter a search, upload a photo, or start with an image, then ask follow-up questions to narrow down the results. Each image result links to its original source.

Video: Google

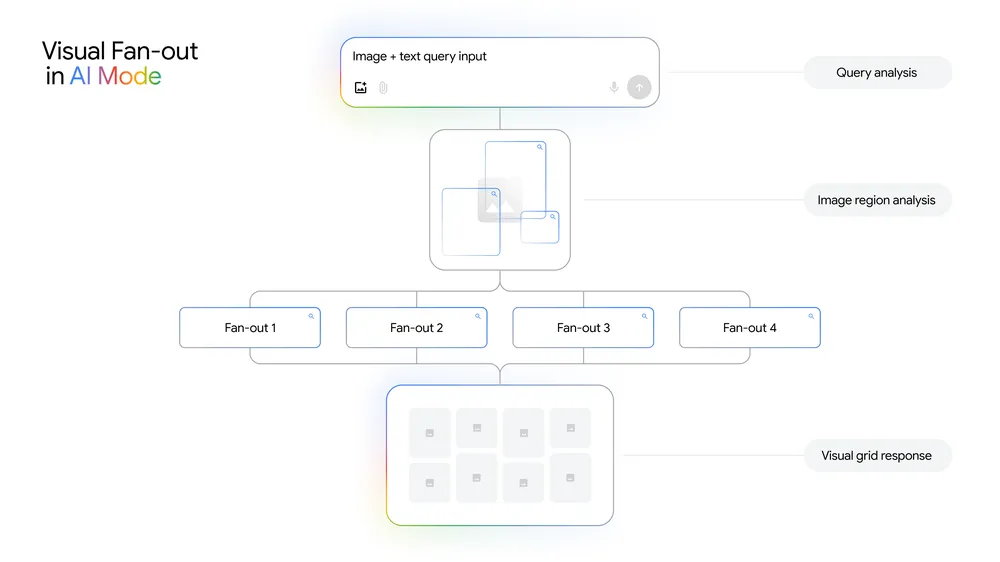

The new features build on Google's existing visual search, using the multimodal capabilities of Gemini 2.5. Google has introduced a "visual search fan-out" method that processes both images and text by launching multiple background searches at once to deliver more detailed results. Google says this approach is also being tested in other parts of its AI search, but hasn't shared technical details yet.

According to Google, the system is designed to recognize both main objects and smaller details in images, running several searches in parallel to better understand the visual context.

Shopping without filters

Shopping is a major focus. Instead of using filters, users can describe what they're looking for in plain English. For example, searching for "barrel jeans that aren't too wide" shows shoppable results, which can be further refined with follow-up requests like "show me more ankle-length options." On mobile, users can even search within a specific image.

Google's shopping feature is powered by the Shopping Graph, which tracks more than 50 billion product listings and updates over 2 billion entries every hour.

The new visual AI mode launches this week in the US, available in English.

Google has also added more AI features to AI Mode, including Gemini 2.5 Pro and Deep Search for paying users, and an automated calling tool for local businesses.

Earlier this year at I/O 2025, Google previewed agent-based features and personalized results. Project Mariner aims to let AI handle tasks like booking tickets and offering virtual try-on tools for clothing.

Google faces competition from OpenAI, which recently launched an online shopping payment feature for ChatGPT. The feature lets users make instant purchases within chat, starting with Etsy and expanding to over a million Shopify stores. OpenAI and Stripe have also introduced the Agentic Commerce Protocol, an open-source solution for in-chat shopping.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.