Google is rolling out Med-PaLM 2 on a limited basis for initial testing.

Update May 17, 2023:

Google has published the Med-PaLM 2 paper.

Update April 14, 2023:

Google Cloud announces that Med-PaLM 2 will be rolled out to select Google Cloud customers for a "limited test" in the coming weeks. The goal, the company says, is to explore safe, responsible and meaningful use scenarios.

The medical language model could "facilitate rich, informative discussions, answer complex medical questions, and find insights in complicated and unstructured medical texts," according to Google. It can also generate short and long answers to medical questions and create summaries from internal documentation and data sets, as well as from scientific sources.

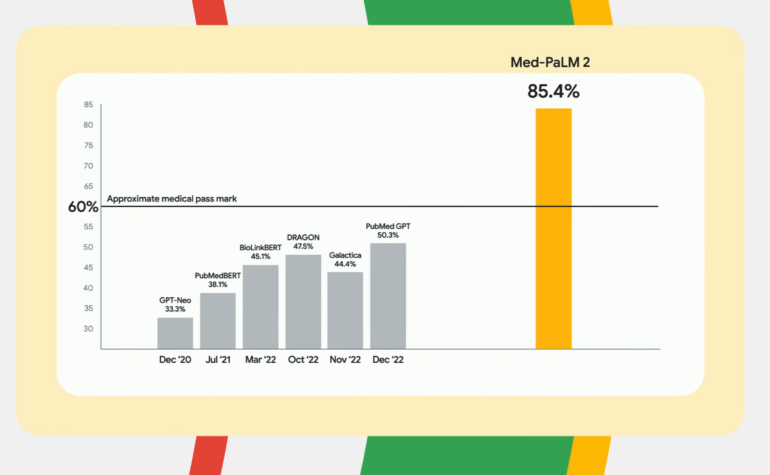

According to Google, Med-PaLM 2 is the first language model to achieve expert-level performance on U.S. Medical Licensing Examination (USMLE)-style questions with more than 85 percent accuracy. In the MedMCQA dataset, which includes questions from India's AIIMS and NEET medical exams, it achieved a "pass rate" of 72.3 percent.

Original article, March 18, 2023:

Google's medical language model Med-PaLM 2 passes exam questions

Med-PaLM is Google's variant of the PaLM language model optimized for medical questions. The latest version is designed to answer medical questions reliably at an expert level.

Last December, Google unveiled Med-PaLM, a version of Google's giant PaLM (Pathways Language Model) language model optimized for answering medical questions. Med-PaLM was developed using a special soft prompting method combined with responses to medical prompts written by four clinicians.

Med-PaLM performed at the level of medical professionals in most of the benchmarks tested. Potentially harmful responses were generated 5.9 percent of the time, compared with 5.7 percent for human experts, the research team said.

Med-PaLM was also the first AI model to potentially pass the U.S. Medical Licensing Examination (67.2 percent correct when tested with "licensing-style questions," 60 percent required), correctly answering multiple-choice and open-ended questions and reasoning about its answers.

Med-PaLM 2 is even more accurate - but still has gaps

As part of Google Health's "The Check Up" event, Google announced the continued development of Med-PaLM. The current version, Med-PaLM 2, can answer medical exam questions at an "expert doctor level" and is accurate 85 percent of the time.

This means that Med-PaLM 2 achieves an 18 percent increase in performance over its predecessor and is well above the level of comparable language models in medical tasks. However, the team still sees significant room for improvement to ensure that Med-PaLM 2 meets Google's quality standards. As for technical changes from Med-PaLM 1, the team is tight-lipped.

Med-PaLM 2 was tested against 14 criteria, including scientific factuality, accuracy, medical consensus, reasoning, bias, and harm, evaluated by clinicians and non-clinicians from diverse backgrounds and countries. The team found "significant gaps when it comes to answering medical questions," without elaborating on the shortcomings.

Working with research teams, Google plans to further develop Med-PaLM to address these gaps and understand how the language model can improve healthcare. The video below shows the announcement of Med-PaLM 2 starting at approximately 16:30.