Google's ViT-22B is the largest Vision Transformer to date, with 22 billion parameters. Google says it is better aligned to humans than other models.

In the fall of 2020, Google unveiled the Vision Transformer (ViT), an AI architecture that takes the Transformer models that have been so influential in language processing and makes them useful for image tasks like object recognition.

Instead of words, the Vision Transformer processes small portions of images. At the time, Google trained three ViT models on 300 million images: ViT-Base with 86 million parameters, ViT-Large with 307 million parameters, and ViT-Huge with 632 million parameters. In June 2021, a Google ViT model broke the previous record in the ImageNet benchmark. ViT-G/14 has just under two billion parameters and was trained on three billion images.

Google shows 22 billion parameter ViT model

In a new paper, Google presents an even larger scaled ViT model. With 22 billion parameters, ViT-22B is ten times the size of ViT-G/14 and was trained on 1,024 TPU-v4 chips with four billion images.

During scaling, the team encountered some training stability issues, which they were able to resolve by making improvements such as arranging the transformer layers in parallel. This also allowed much more efficient use of the hardware.

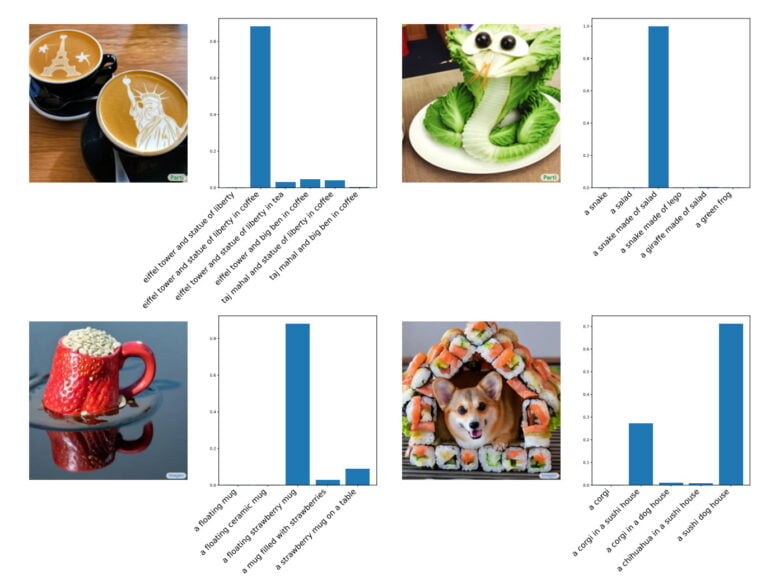

In some benchmarks, ViT-22B achieves SOTA, in others it plays in the top league - without specialization. The team tested ViT-22B in image classification, semantic segmentation, depth estimation, and video classification. In addition, Google verified the model's classification ability with AI-generated images that were not part of the training data.

ViT-22B comes closer to humans than any AI model before it

Google shows that ViT-22B is a good teacher for smaller AI models: In a teacher-student setup, a ViT-Base model learns from the larger ViT-22B and subsequently scores 88.6 percent on the ImageNet benchmark, a new high SOTA for this model size.

The Google team is also investigating the human alignment of ViT-22B. It has been known for years that AI models place too much emphasis on the texture of objects when classifying them, compared to humans. This is a fact that many adversarial attacks take advantage of.

Tests have shown that humans pay almost exclusive attention to shape and almost no attention to texture when classifying objects. In terms of values, this is 96 percent to 4 percent.

Google's ViT-22B achieves a new SOTA here, with the team showing that the model has a shape bias of 87 percent and a texture bias of 13 percent. The model is also more robust and fairer, according to the paper.

Google says ViT-22B shows the potential for "LLM-like" scaling in image processing. With further scaling, such models could also exhibit emergent capabilities that surpass some of the current limitations of ViT models.