Google's Gemini 2.0 model family expands with Flash-Lite and Pro

Google is expanding its AI model family with three new Gemini 2.0 variants, each designed for different use cases and offering varying balances of performance and cost.

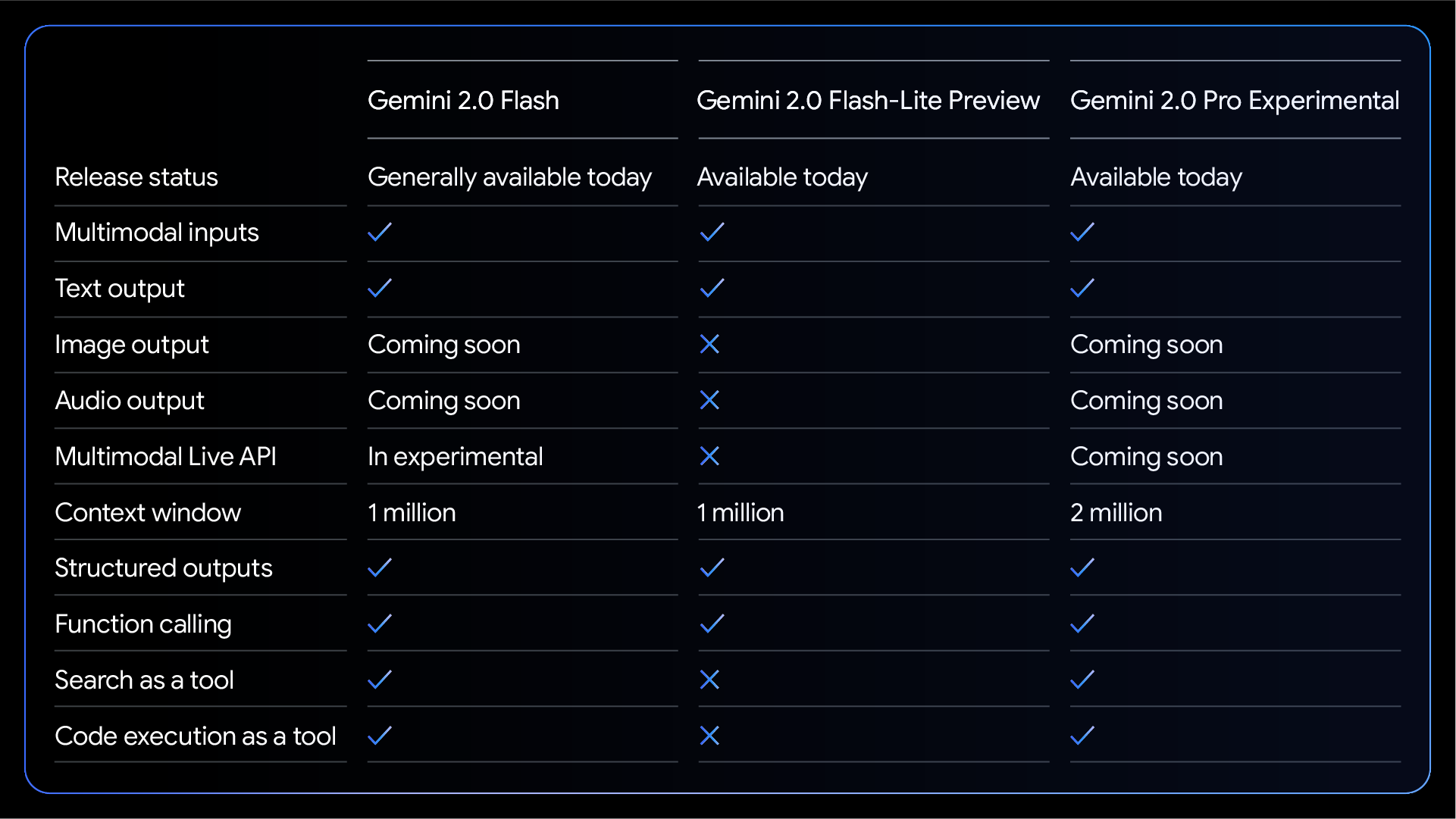

The basic Gemini 2.0 Flash model, introduced in December, is now generally available with higher rate limits and improved performance, Google says. Google is also launching Gemini 2.0 Flash-Lite, a cost-effective variant for developers that's currently in public preview through the API.

Completing the lineup is Gemini 2.0 Pro, which Google describes as still experimental. Designed for complex prompts and coding tasks, it features an extended context window of 2 million tokens - twice that of the Flash versions.

While the models only support text output for now, Google plans to add image and audio capabilities as well as live video to Flash and Pro in the coming months. All three models can process image and audio as input.

Google is also testing Flash Thinking models with Gemini 2.0, which function similarly to OpenAI o3 and Deepseek-R1 by running through additional reasoning steps before generating answers. These models can access YouTube, Maps and Google Search. Notably absent from the announcement is the flagship "Gemini 2.0 Ultra" model.

Gemini Pro 2.0 leads the benchmarks

Google's benchmark data shows that Gemini 2.0 Pro outperforms its predecessors in almost all areas. For mathematical tasks, it scores 91.8 percent on the MATH benchmark and 65.2 percent on HiddenMath, significantly outperforming the Flash variants. The general Flash 2.0 version scores between Flash Lite and Pro and outperforms the older 1.5 Pro model.

In OpenAI's SimpleQA test, the Pro model reached 44.3 percent, while Gemini 2.0 Flash achieved 29.9 percent. Deepseek-R1 (30.1 percent) and o3-mini-high (13.8 percent) are far behind in this test, likely due to smaller training datasets. The test requires models to answer difficult factual questions without internet access - though this may be less relevant for real-world applications.

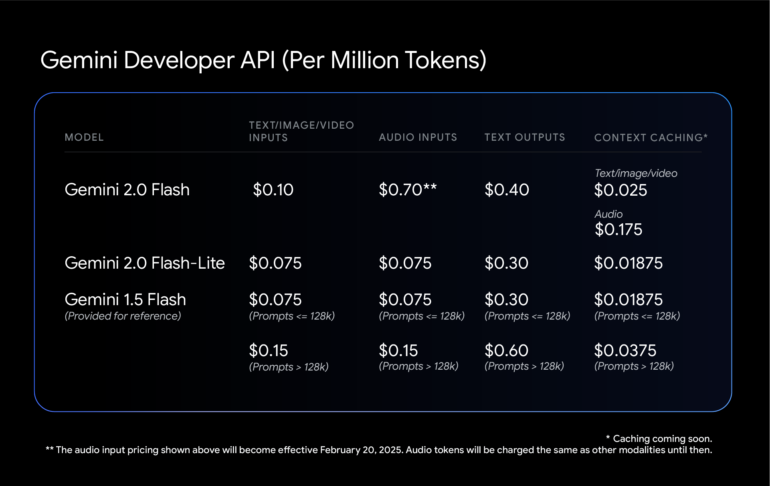

For API pricing, Google has removed the previous distinction between short and long context queries. This means that mixed workloads (text and images) may cost less than with Gemini 1.5 Flash, despite performance improvements.

Overall, Gemini 2.0 Flash is pricier than its predecessor. However, the new Flash-Lite is designed to compete with the older 1.5 Flash - it costs the same and performs better in most benchmarks. Only real-world tests will show whether the two models deliver comparable quality.

All models are available through Google AI Studio and Vertex AI, as well as Google's premium Gemini Advanced chatbot on desktop and mobile devices.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.