Google's new Gemini 1.5 AI models offer more power and speed at lower costs

Google has released two updated Gemini AI models that promise more power, speed, and lower costs.

The new versions, Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, offer significant improvements over their predecessors, according to Google, showing gains across a range of benchmarks, particularly in maths, long context and visual tasks.

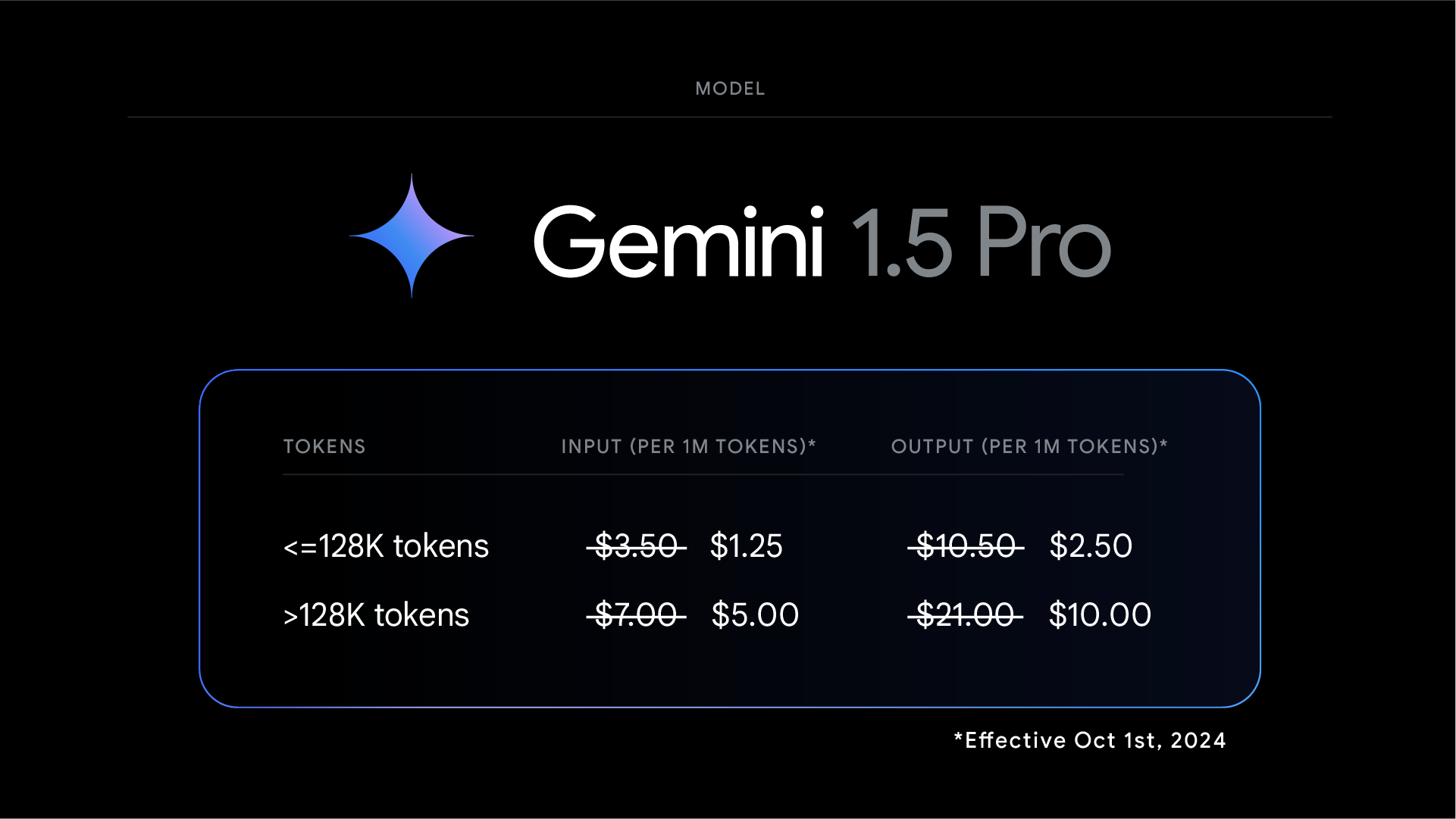

In addition, the company has reduced the price of input and output tokens for Gemini 1.5 Pro by more than 50%, increased rate limits for both models and reduced latency.

New Gemini models perform better at math benchmarks

On the MMLU-Pro benchmark, a more challenging version of MMLU, the models improved by about 7%. Math performance saw a notable 20% boost on the MATH and HiddenMath benchmarks. Vision and code-related tasks also saw improvements, with 2-7% gains in visual understanding and Python code generation evaluations.

Google claims the models now provide more helpful responses while maintaining content safety standards. The company refined the models' output style based on developer feedback, aiming for more precise and cost-effective use.

Google has also released an improved version of the Gemini 1.5 experimental model announced in August. The updated version, 'Gemini-1.5-Flash-8B-Exp-0924', offers further enhancements for text and multimodal applications.

Users can access the new Gemini models through Google AI Studio, the Gemini API, and Vertex AI for Google Cloud customers. A chat-optimized version of Gemini 1.5 Pro-002 is coming soon for Gemini Advanced users.

The new pricing takes effect on October 1, 2024, for prompts under 128,000 tokens. Combined with context caching, Google expects development costs with Gemini to decrease further.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.