Google's TPU chips give it the world's largest AI computing power, according to research

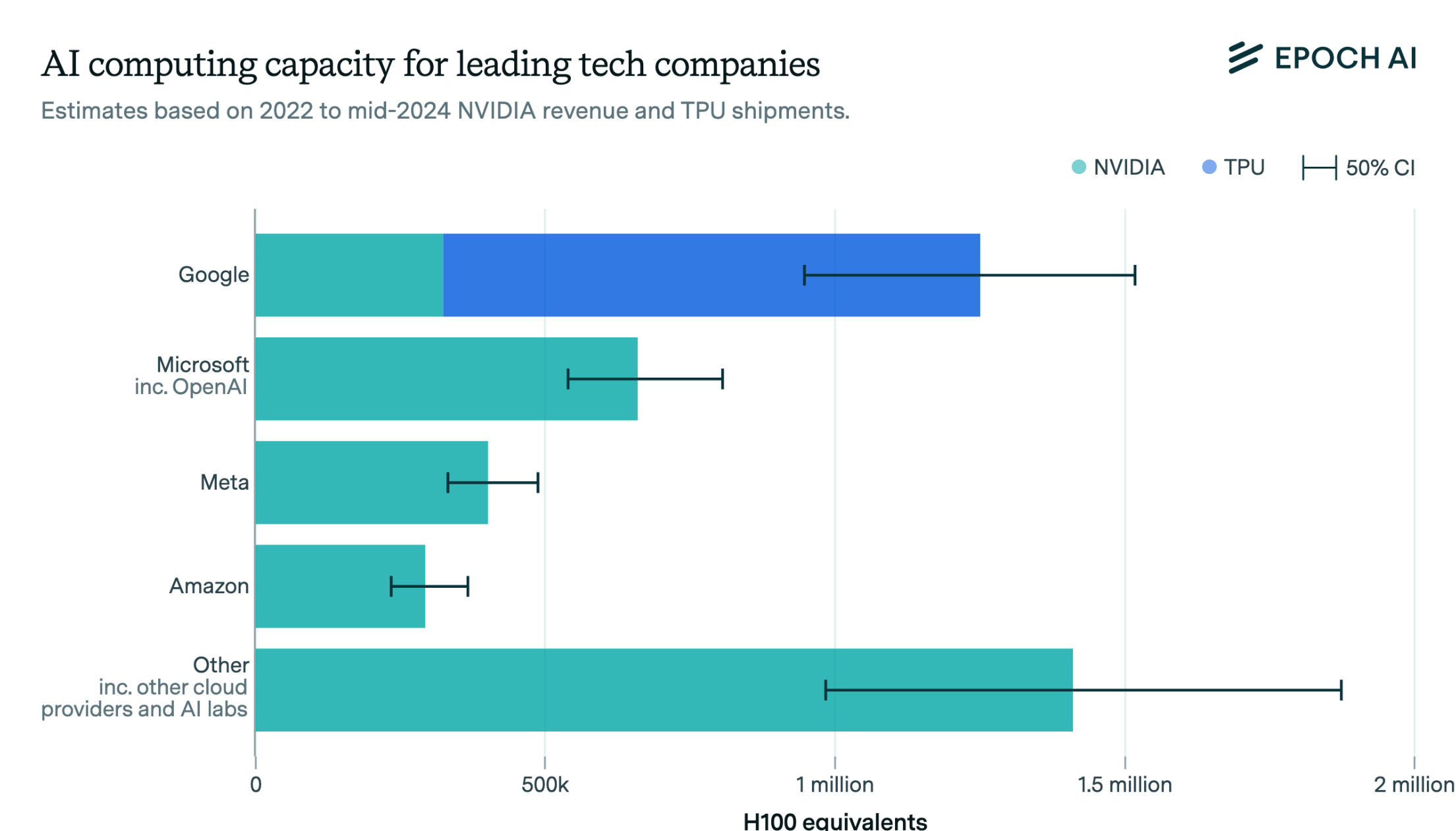

Google likely has the world's largest AI computing capacity, according to an analysis by AI research firm Epoch AI.

The tech giant's edge comes from its custom-built Tensor Processing Units (TPUs), which provide computing power equivalent to at least 600,000 Nvidia H100 GPUs. "Given this large TPU fleet, combined with their NVIDIA GPUs, Google probably has the most AI compute capacity of any single company," Epoch AI researchers noted.

Nvidia dominates the AI chip market

Despite Google's lead in total capacity, Nvidia remains the dominant player in AI chip sales. Since early 2022, Nvidia has sold AI chips with computing power equal to about 3 million H100 GPUs. Most went to four major cloud providers, with Microsoft being the largest customer.

Other buyers include cloud companies like Oracle and CoreWeave, AI firms such as xAI and Tesla, Chinese tech companies, and governments building national AI infrastructure.

Epoch AI cautions that its estimates have significant uncertainty and that the AI chip landscape is rapidly evolving as companies develop new processors and ramp up production.

Nvidia's Blackwell generation reportedly already sold out

Nvidia's upcoming Blackwell GPUs are reportedly in high demand. According to Barron's, citing conversations between Morgan Stanley analysts and Nvidia CEO Jensen Huang, the next 12 months of Blackwell supply is already sold out.

Beyond Nvidia and Google, other players like AMD, Intel, Huawei, Amazon, Meta, OpenAI, and Microsoft are developing their own AI chips. China is reportedly encouraging domestic firms to buy more chips from Chinese suppliers like Huawei.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.