Meta shows HyperReel, a new way to store and render 6-DoF video. HyperReel could be used in AR and VR applications, for example.

For years, 180° or 360° 3D videos have been the culmination of many efforts to produce the most immersive videos possible for virtual reality. Better cameras and higher resolutions are becoming available.

But an important step has not yet been taken: immersive videos in six degrees of freedom (6-DoF), which make it possible to change the head position in space in addition to the viewing direction.

There have already been initial attempts to make such particularly immersive videos suitable for mass consumption, such as Google's Lightfields technology or even experiments with volumetric videos like Sony's Joshua Bell video.

In recent years, research has increasingly focused on "view synthesis" methods. These are AI methods that can render new perspectives in an environment. Neural Radiance Fields (NeRFs) are an example of such a technique. They learn 3D representations of objects or entire scenes from a video or many photos.

6-DoF video must be fast, high quality, and sparse.

Despite numerous advances in view synthesis, there is no method that provides high-quality representations that are simultaneously rendered quickly and have low memory requirements. For example, even with current methods, synthesizing a single megapixel image can take nearly a minute, while dynamic scenes quickly require terabytes of memory. Additionally, capturing reflections and refraction is a major challenge.

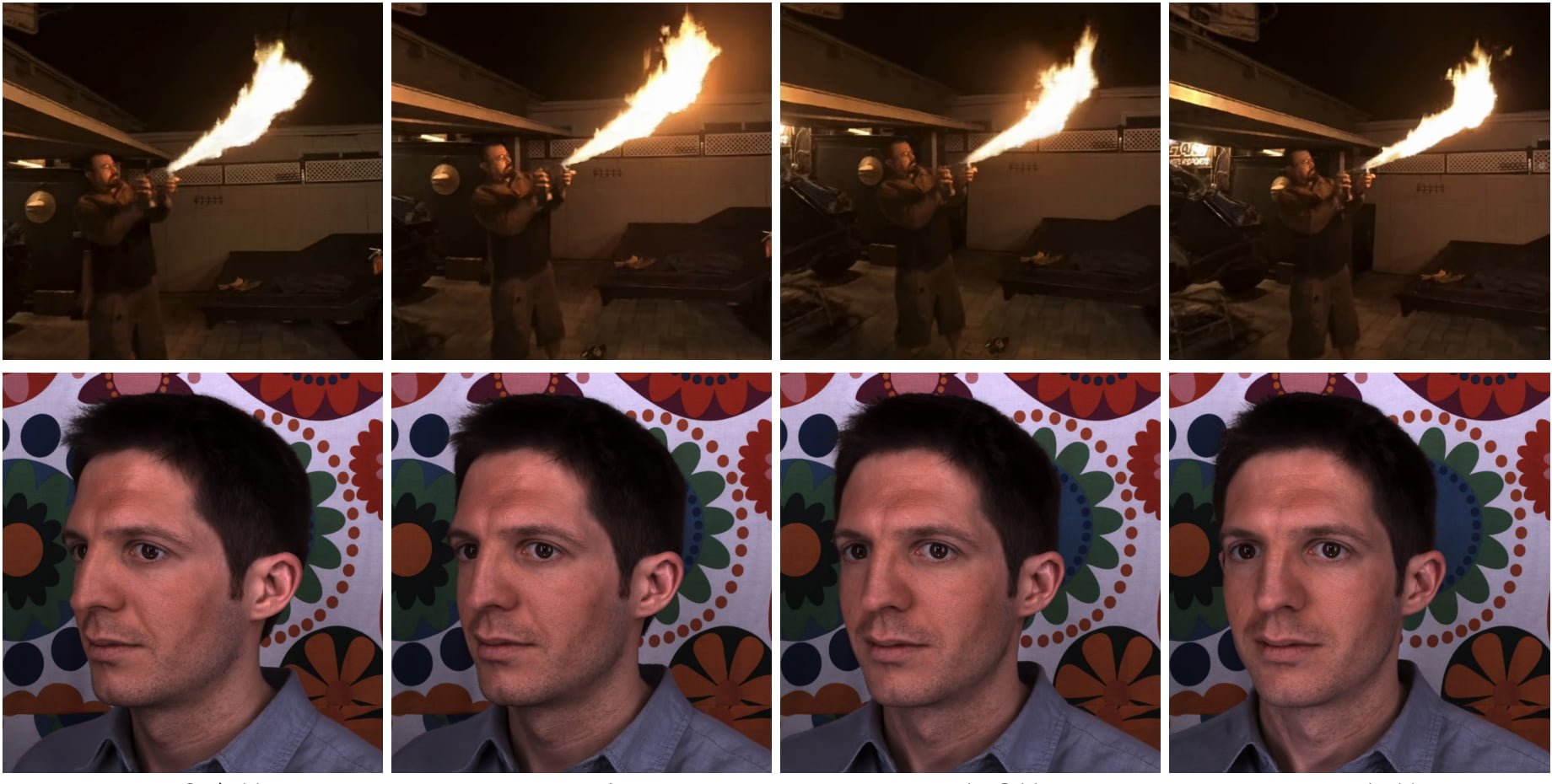

Researchers from Carnegie Mellon University, Reality Labs Research, Meta, and the University of Maryland are now demonstrating HyperReel, a method that is memory efficient and can render in real-time at high resolution.

To do this, the team relies on a neural network that learns to take rays as inputs and outputs parameters like color for a set of geometric primitives and displacement vectors. The team relies on predicting those geometric primitives in the scene, such as planes or spheres, and computes the intersections between the rays and the geometric primitives, instead of the hundreds of points along the ray path that are common in NeRFs.

In addition, the team uses a memory-efficient method for rendering dynamic scenes with a high compression ratio and interpolation between individual frames.

Metas HyperReel achieves between 6.5 and 29 frames per second

The quality of the dynamic and static scenes shown outperforms most other approaches. The team achieves between 6.5 and 29 frames per second on an Nvidia RTX 3090 GPU, depending on the scene and model size. However, the 29 frames per second are currently only possible with the Tiny model, which renders significantly lower resolutions.

Video: Meta

Video: Meta

Unlike NeRFPlayer, HyperReel is not suitable for streaming. According to Meta, this is would be an easy fix because the file size is small: NeRFPlayer requires about 17 megabytes per image, Google's Immersive Light Field Video 8.87 megabytes per image, and HyperReel only 1.2 megabytes.

HyperReel is not yet suitable for real-time virtual reality applications, where ideally at least 72 frames per second must be rendered in stereo. However, since the method is implemented in vanilla PyTorch, a significant increase in speed could be achieved in the future with additional technical effort, Meta said.

More information, examples, and the code are available on GitHub.