Imagen Video: Google unveils high-definition text-to-video AI

Google's latest generative AI, Imagen Video, creates short videos based on text input. It achieves a new quality standard in resolution and frame rate.

Shortly after Meta with Make-a-Video, Google introduces its own text-to-video system: It draws on the diffusion technique and other insights from the image AI Imagen, the most powerful image AI publicly presented to date, but uses a more complex setup based on a "cascade of video diffusion models," according to Google's research team.

"A bunch of autumn leaves falling on a calm lake to form the text 'Imagen Video'. Smooth. | Video: Google

Compared to the Imagen image model, the Imagen video model was extended to the temporal domain and trained with images and videos at the same time. The strengths of the Imagen image model have been retained, according to Google. For text processing, Google relied on a large, pre-trained Transformer language model (T5-XXL), as it did for the Imagen image model.

Imagen Video achieves HD resolution and smooth frame rates

Imagen Video's outstanding feature is its high resolution combined with a relatively high frame rate: the system achieves HD standard with 1280 x 768 pixels at 24 frames per second. Meta generated videos using Make-a-Video for initial testing with a maximum resolution of 768 x 768 videos at a lower frame rate.

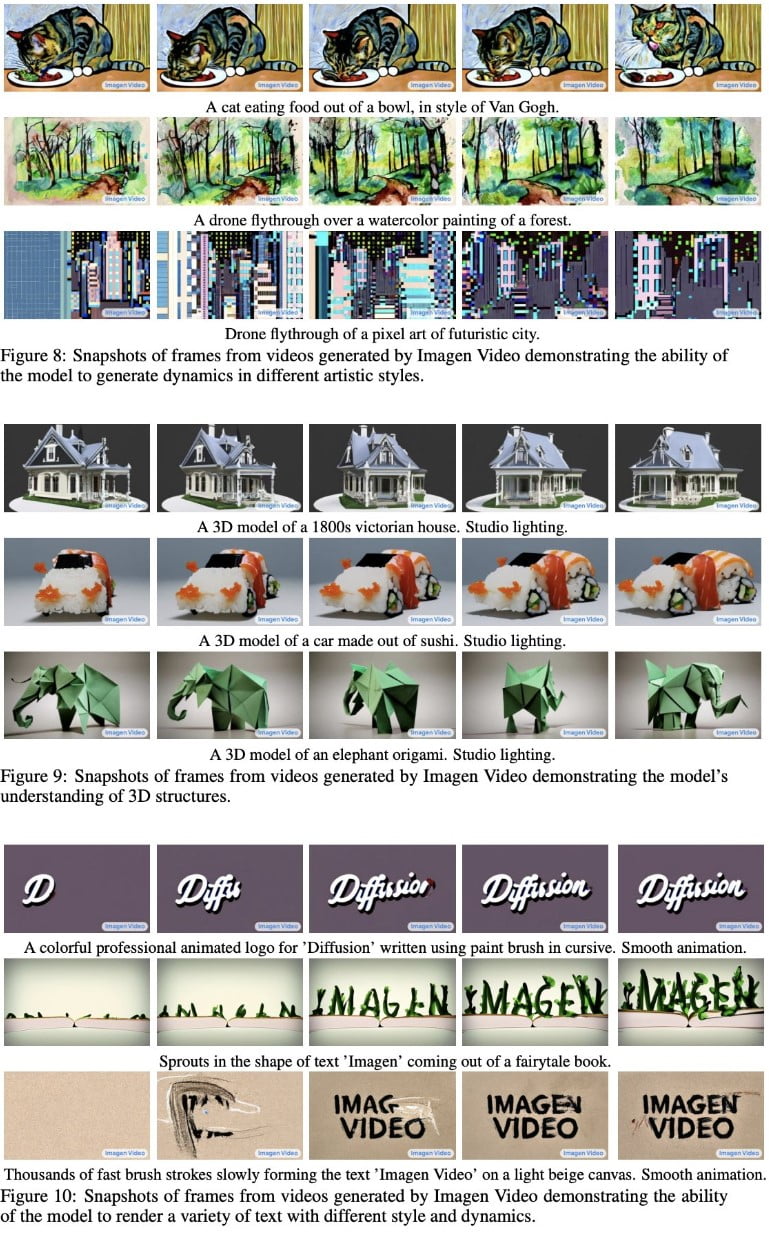

Imagen Video, like generative imaging systems, masters various artistic styles - from pixel art to Van Gogh - has an understanding of 3D objects and can spell words correctly, according to Google. The latter feature was previously only possible with Imagen image.

The system also offers a "high degree of controllability and world knowledge" and can generate videos very accurately to the text command, the research team writes - and demonstrates.

Prompt: "Sprouts in the shape of text 'Imagen Video' coming out of a fairytale book."

Model Output: pic.twitter.com/FVgnM0UAAn- Durk Kingma (@dpkingma) October 5, 2022

As with Meta's make-a-video, however, the length of the videos is limited: the output is currently around five seconds maximum. So Imagen Video tends to generate longer animations rather than videos - but a solution to this is also on the horizon.

Long AI videos: Phenaki also comes from Google

The fact that AI can also generate long videos was demonstrated just last week by the text-to-video AI system Phenaki, which can generate entire story scenes from prompts that build on each other. In a demo, the Phenaki research team showed an approximately two-minute-long video that was generated along a small script.

At the time of Phenaki's release, it wasn't clear who was behind the paper for review reasons. Now, Imagen video lead author Jonathan Ho reveals on Twitter that Phenaki is also a Google project.

The next step, according to Ho, is to combine the image quality of Imagen Video and the coherence and video length of Phenaki.

Imagen Video will not be released for now

As with Imagen for images, Google is not releasing the model at this time. The reason is the same: Imagen Video was trained with partly "problematic data".

While internal testing had successfully filtered out much explicit and violent content, social biases and stereotypes were still replicated. It is a challenge to recognize and filter these, the research team writes.

With a similar reasoning, Meta also refrained from releasing Make-a-Video for the time being, but at least held out the prospect of a demo in the near future.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.