UC Berkeley and Google researchers demonstrate a new method for generative AI that could replace diffusion models.

Generative AI models, such as GANs, diffusion models, or more recently consistency models, generate images by mapping an input, such as random noise, a sketch, or a low-resolution or otherwise corrupted image, to outputs that correspond to a given target data distribution, usually natural images. Diffusion models, for example, do this by "denoising" an image in several steps, learning the target data distribution during training.

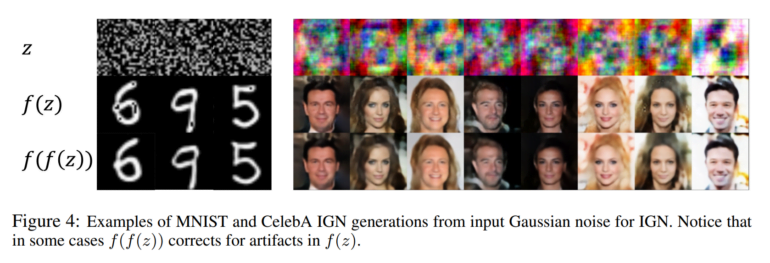

Researchers from UC Berkeley and Google now present a new generative model, called "Idempotent Generative Networks" (IGNs), that learns through training to generate a suitable image from any form of input, ideally in a single step. The proposed method is intended to be a "global projector" that projects any input data onto the target data distribution and, unlike other methods, is not limited to specific inputs.

Incidentally, the team cites a scene from Seinfeld as inspiration for the work, which sums up the eponymous concept of idempotent operators.

Idempotent generative networks show potential in first study

IGNs differ from GANs and diffusion models in two important ways: Unlike GANs, which require separate generator and discriminator models, IGNs are "self-antagonistic" - they fulfil both roles. Unlike diffusion models, which perform incremental steps, IGNs attempt to map the inputs to the data distribution in a single step.

The researchers demonstrate the potential of IGNs using the MNIST and CelebA datasets. The team shows applications such as converting a sketch into a photorealistic image, generating an image from noise, or repairing a damaged image.

Although the image quality is not yet state-of-the-art, the examples show that the method works, allows simple manipulations such as adding a headset to a face, and can handle any input such as sketches or damaged images.

Google how to scale up new generative AI method

IGNs could be much more efficient at inference because they produce their results in a single step after training. They could also produce more consistent results, which could be beneficial for certain applications such as medical image repair.

"We see this work as a first step towards a model that learns to map arbitrary inputs to a target distribution, a new paradigm for generative modeling."

From the paper.

Next, the team plans to scale up IGNs with significantly more data, hoping to realise the full potential of the new generative AI model. The code will soon be available on GitHub.