InstructPix2Pix shows how generative AI models can modify images through textual description. The method was quickly integrated into existing tools.

OpenAI's recently released chatbot, ChatGPT, outperforms the company's older models in almost all tasks. A key feature of the bot is that it follows natural language instructions better than previous models and can, for example, rephrase previously generated text or correct errors in code.

This works because the underlying model "text-davinci-003" was optimized with human feedback to follow instructions. ChatGPT was then trained with additional feedback.

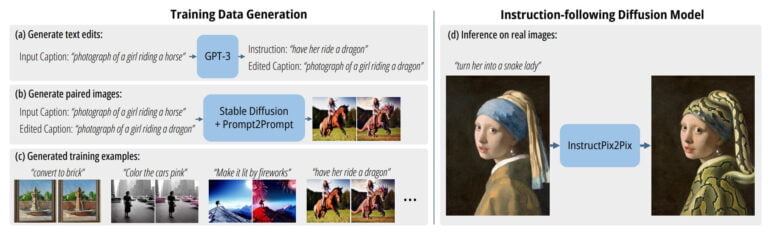

GPT-3 and Stable Diffusion generate synthetic training data

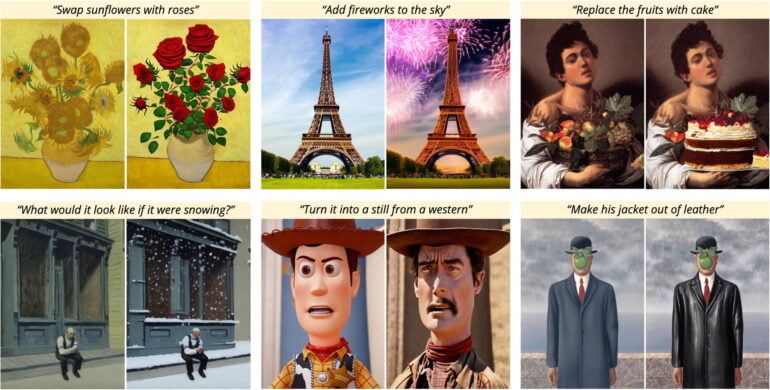

A similar approach has now been applied to image processing by researchers at the University of California, Berkeley. InstructPix2Pix describes a method for processing images using natural language instructions. This can be used, for example, to replace objects in images, alter the image style, change the setting, or change the artistic medium.

Similar to OpenAI, the team needs training data from successfully executed instructions. But unlike OpenAI, the researchers are building on an almost entirely synthetic dataset.

The team used a combination of GPT-3 and Stable Diffusion to generate its training data: the OpenAI language model generated a description of an initial image, an instruction to change certain details of the initial image, and a description of the resulting image.

With these two descriptions, the team then generated about 100 images using Stable Diffusion and the Prompt-to-Prompt image modification method, which were then reduced to two similar variants using CLIP that matched the desired modifications.

The team then trained the InstructPix2Pix model with the full AI-generated dataset. It contains more than 450,000 Stable Diffusion image pairs and the corresponding GPT-3 modification instructions.

InstructPix2Pix shows impressive capabilities despite being trained only with synthetic data

Although InstructPix2Pix has only been trained with synthetically generated material, the team says it can easily process all user input and images, and change images in seconds.

Of course, InstructPix2Pix is far from perfect. In particular, the model struggles with instructions that change the number of objects or require spatial understanding, the researchers say. To further improve the model, human feedback is an important area of future work, they said.

Try InstructPix2Pix

The researchers have made their model available on Hugging Face, and the first implementations for popular Stable Diffusion GUIs such as NMKD or Auto1111 already exist. Playground AI also seems to have already made the model available. You can try it there after free registration.

Introducing AI-first image editing to Playground—a way to instruct an AI to synthesize spectacular yet subtle edits

Try it here: https://t.co/pRmwNfsfzg

Example: "Make it a ferrari" pic.twitter.com/9Lq3Aqn9AM

— Playground AI (@playground_ai) January 24, 2023

AI image processing in Photoshop

In addition to being a current benchmark for the potential of AI, these scientific advances are of particular long-term interest to the photography industry.

Industry leader Adobe has long used machine learning in its products: In 2021, the U.S. company added features to Photoshop called "Neural Filters" which allow you to change the season of a landscape with a click, for example.

With models like InstructPix2Pix and Stable Diffusion integrations for Photoshop already available, workflows in the graphics industry could change fundamentally and quickly.