Generative AI models like Stable Diffusion can generate images - but have trouble editing them. Google shows a new method that allows more control.

With OpenAI's DALL-E 2, Midjourney, or Stable Diffusion, interested parties have a whole range of generative text-to-image models to choose from. All models produce believable images and can be controlled via prompt engineering. In many cases, therefore, the choice of offering is primarily a matter of personal preference, in some cases a matter of specific requirements that one model can meet better than another.

Apart from prompt engineering, there are other features that allow greater control over the desired result: outpainting, variations, or masking parts of an image. OpenAI's DALL-E 2 was a pioneer here with the editing function, where areas of an image can be masked and then regenerated. Similar solutions now also exist for Stable Diffusion.

Google's Prompt-to-Prompt allows text-level control

However, editing by masking has limitations, as it allows only rather rough changes in the edit - or requires an elaborate combination of extremely precise masking and various prompt changes.

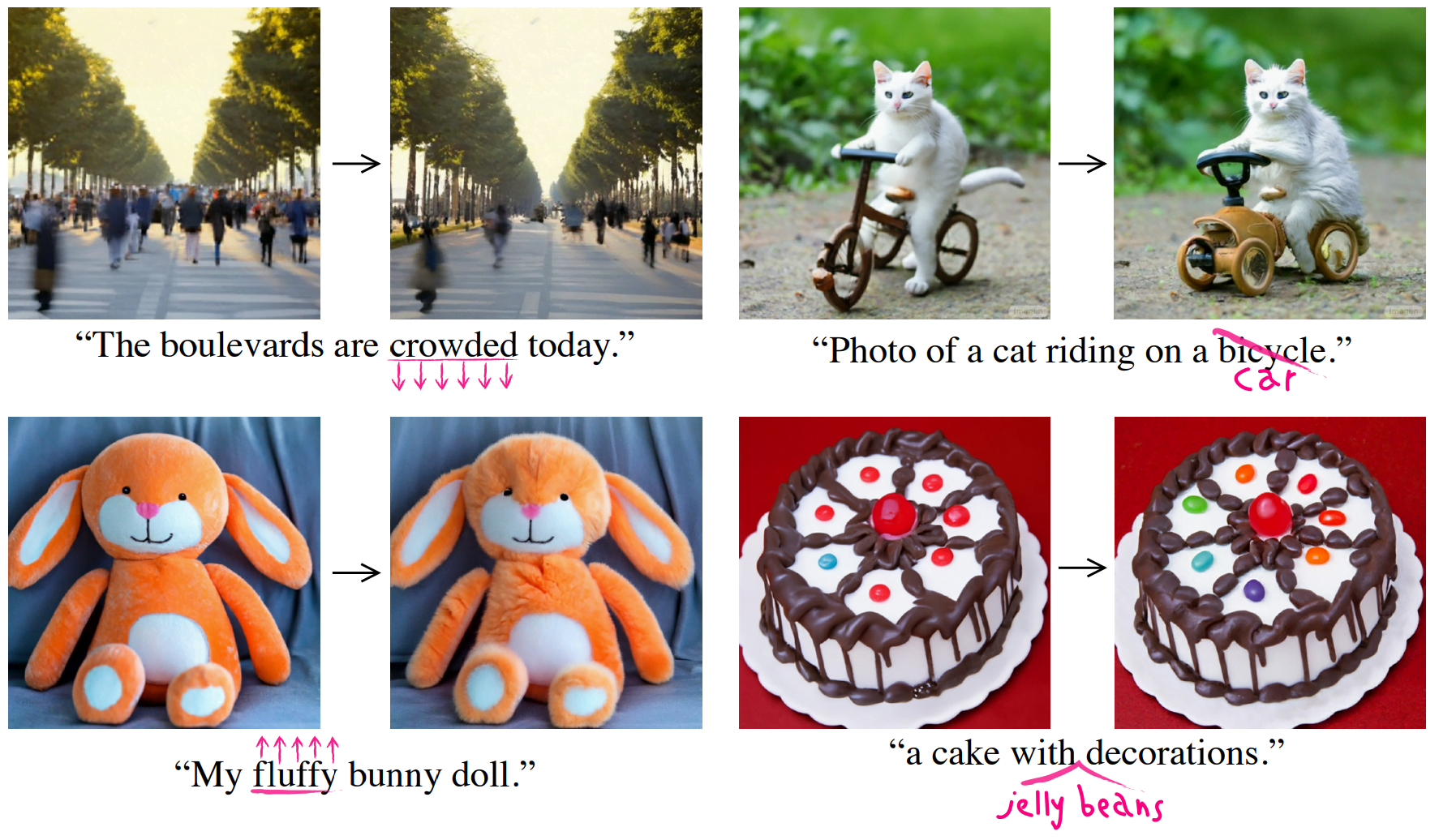

Researchers at Google show an alternative: Prompt-to-Prompt does without masking and instead allows control via changes to the original prompt. The team accesses the cross-attention maps in the generative AI model for this purpose. These represent the link between the text prompt and the generated images and contain semantic information relevant to a generation.

Manipulating these cross-attention maps can thus control the diffusion process of the model, of which the authors show several variants. One of them allows changing a single word of the text prompt while keeping the rest of the scene intact, which for example switches an object for another. A second method allows words to be added, adding objects or other visual elements to an otherwise unchanging scene. A third method can adjust the weighting of individual words, changing a feature of an image, such as the size of a group of people or the fluffiness of a teddy bear.

Prompt-to-Prompt is easy to use for Stable Diffusion

According to Google, Prompt-to-Prompt requires no finetuning or other optimizations and can be applied directly to existing models for more control. In their work, the researchers test the method with Latent Diffusion and Stable Diffusion. Prompt-to-Prompt is expected to run on graphics cards with at least 12 gigabytes of VRAM, according to Google.

This work is a first step towards providing users with simple and intuitive means to edit images and navigate through a semantic, textual, space, which exhibits incremental changes after each step, rather than producing an image from scratch after each text manipulation.

From the paper.

YouTuber Nerdy Rodent shows how Prompt-to-Prompt can be used for Stable Diffusion in his tutorial.

More information about Prompt-to-Prompt and the code is available on GitHub.